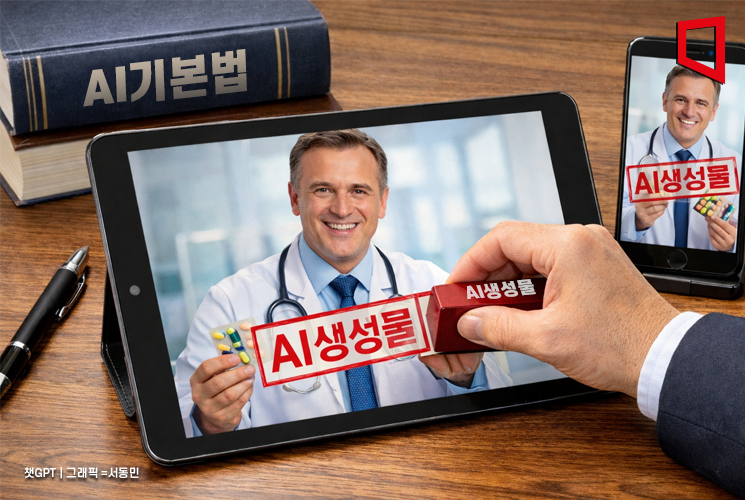

Introduction of AI-Generated Content Labeling to Prevent "Fake Doctor" Advertisements

Amendment to the Information and Communications Network Act Faces Slow Progress in the National Assembly

Intense Debate Expected Over Scope of Regulation and P

With only two days left until the enforcement of the Artificial Intelligence (AI) Basic Act, confusion is expected in the field as discussions on introducing a labeling system for AI-generated content, designed to prevent so-called 'fake expert advertisements,' have not yet taken place.

The AI Basic Act, set to take effect on January 22 as the world’s first such law, includes a provision requiring businesses to indicate when their products or services are generated by generative AI. However, there are no measures in place to address cases where these labels are damaged or tampered with during online distribution, making it difficult for the law to fulfill its intended purpose.

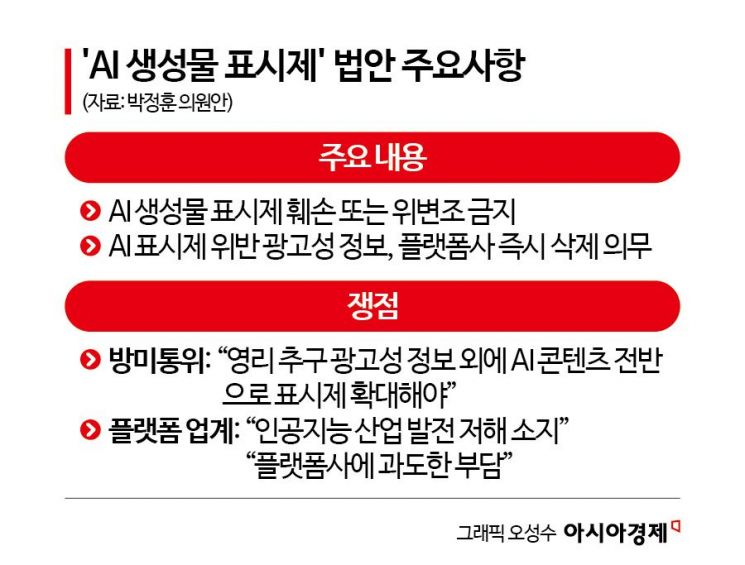

To supplement the 'transparency obligation' specified in the AI Basic Act, an amendment to the Act on Promotion of Information and Communications Network Utilization and Information Protection (hereinafter referred to as the Information and Communications Network Act), which includes regulations on labeling AI-generated content, has been proposed mainly by ruling and opposition lawmakers on the Science, ICT, Broadcasting, and Communications Committee. However, full-scale discussions have yet to begin. The bill includes measures to prevent the disruptive effects of false or exaggerated AI advertisements on online platforms such as portal sites and social networking services (SNS), which can mislead consumers or destabilize the market.

The Broadcasting, Media, and Communications Committee aims to introduce the Information and Communications Network Act amendment, which includes the AI-generated content labeling system, within the first quarter of this year. A committee official stated, "Concerns about false or exaggerated advertisements using AI are shared by both ruling and opposition parties," adding, "The government also intends to provide maximum support."

The problem is that the legislative process is sluggish. An official from a lawmaker’s office on the Science, ICT, Broadcasting, and Communications Committee said, "We have requested prompt deliberation of the bill, but there is still no timeline," adding, "Since items to be placed on the subcommittee agenda are decided through agreement between the ruling and opposition secretaries, we have to wait and see."

Even if discussions proceed, disagreements are expected over details such as the scope of the labeling system, as the government, industry, and both ruling and opposition parties have differing views. For example, platform companies argue that the law imposes excessive obligations on the private sector, while the regulatory Broadcasting, Media, and Communications Committee insists that regulations should be expanded beyond advertisements to cover all AI-generated content.

Additionally, the bill proposed by Jeon Yonggi, a lawmaker from the Democratic Party of Korea, in November 2024, sets a relatively broad scope for the labeling system by including 'content generated using AI technology' to address issues such as deepfakes. In contrast, the bill proposed by Park Junghoon, a lawmaker from the People Power Party, in October last year, limits the scope to 'advertising information' only. In a phone interview with The Asia Business Daily, Jeong Junhwa, a legislative researcher at the National Assembly Research Service, said, "There are pros and cons, so it is a matter to be considered as a legislative decision," adding, "It is possible to first address the most urgent issues and gradually expand the scope, or, since regulation is inevitable, to implement a broad scope from the outset."

Platform companies oppose the provision in Park’s bill that requires them to 'immediately' delete advertising information that has been damaged or tampered with, arguing that it places an excessive burden on platform operators. They also claim that it is not easy to determine in real time whether certain information is AI-generated advertising or has been tampered with. The Korea Internet Corporations Association, whose members include Naver, Kakao, Google, and Meta, pointed out, "Administrative authorities such as the Broadcasting, Media, and Communications Committee are shifting responsibilities that should be theirs onto the platforms."

Researcher Jeong stated, "A clear definition of what constitutes damage or tampering is necessary," adding, "A compromise is needed that reduces the burden on private businesses while filling regulatory gaps and protecting consumers." A committee official added, "The obligations of platform companies will need to be adjusted during the legislative process."

© The Asia Business Daily(www.asiae.co.kr). All rights reserved.

![Clutching a Stolen Dior Bag, Saying "I Hate Being Poor but Real"... The Grotesque Con of a "Human Knockoff" [Slate]](https://cwcontent.asiae.co.kr/asiaresize/183/2026021902243444107_1771435474.jpg)