The ability of robots to handle deformable objects such as wires, clothing, or rubber bands is a core challenge in the automation of manufacturing and service industries. However, until now, it has been difficult for robots to accurately recognize and manipulate such objects when their shapes are irregular and their movements are hard to predict.

To overcome these limitations, a domestic research team has developed a robotic technology that enables precise identification and skillful manipulation of deformable objects, even with incomplete visual information.

This technology is expected to contribute to intelligent automation across various industrial and service sectors, including cable and wire assembly, manufacturing processes involving soft components, and clothing sorting and packaging.

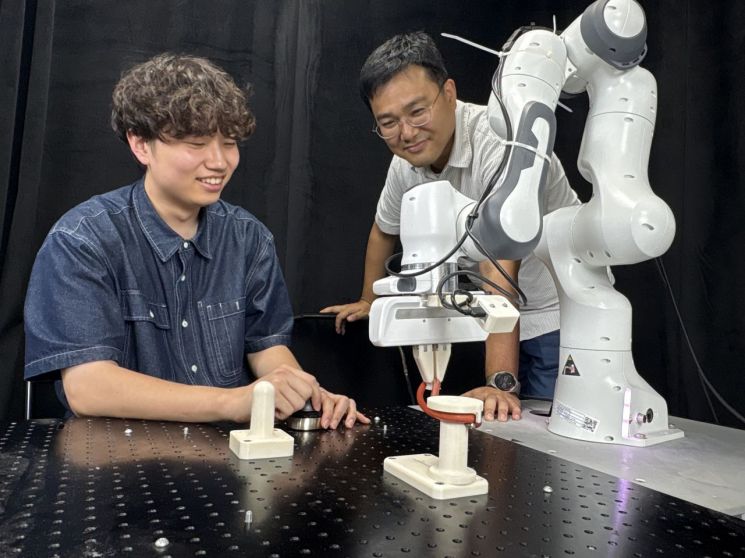

On August 21, KAIST announced that the research team led by Professor Daehyung Park of the School of Computing has developed an artificial intelligence (AI) technology called 'INR-DOM (Implicit Neural-Representation for Deformable Object Manipulation),' which enables robots to skillfully manipulate objects whose shapes change continuously, like elastic bands, and are visually difficult to distinguish.

INR-DOM allows robots to fully reconstruct the entire shape of a deformable object using only partial 3D information obtained through observation, and to learn manipulation strategies based on this reconstruction.

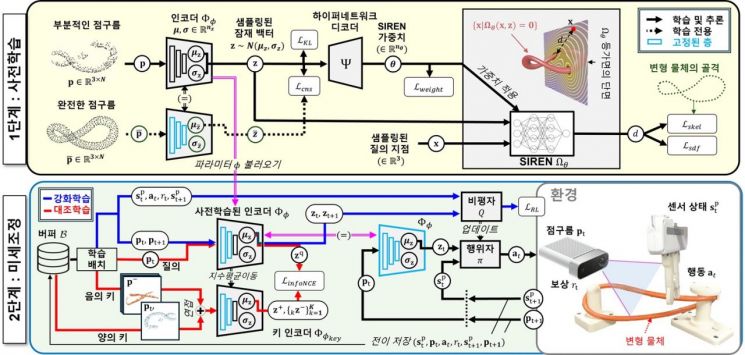

The research team also introduced a novel two-stage training framework that combines 'reinforcement learning' and 'contrastive learning' to enable INR-DOM to efficiently learn specific tasks.

In the first stage, pre-training, the model learns to reconstruct complete shapes from incomplete point clouds (a method of representing a 3D object as a set of points on its surface), thereby acquiring a state representation module that is robust to occlusions and effectively describes the surfaces of stretching objects.

In the second stage, fine-tuning, both reinforcement learning and contrastive learning are applied. This enables the robot to clearly distinguish subtle differences between the current and target states, allowing it to efficiently identify the optimal actions required to complete a given task. This is the core structure of the two-stage training process.

In simulation environments utilizing INR-DOM technology, the robot demonstrated higher success rates than existing methods across three tasks: inserting a rubber ring into a groove (sealing), installing an O-ring onto a component (installation), and untangling a twisted rubber band (disentanglement).

Notably, in the most challenging task-disentanglement-the success rate reached 75%, which is approximately 49% higher than the previous best technology (ACID, 26%).

The research team also validated whether INR-DOM technology could be applied in real-world environments. As a result, the robot achieved success rates exceeding 90% in insertion, installation, and disentanglement tasks in actual settings.

Most notably, in the visually ambiguous bidirectional disentanglement task, the robot recorded a 25% higher success rate compared to conventional image-based reinforcement learning methods, demonstrating the ability to overcome visual ambiguity and achieve efficient manipulation.

Researcher Minseok Song stated, "This study demonstrates the potential for robots to understand the complete shape of deformable objects using only incomplete information and to perform complex manipulation processes based on this understanding." He added, "We expect that the results of this research will lead to robotic technologies capable of collaborating with or substituting for humans in manufacturing, logistics, healthcare, and other industrial and service sectors."

This research was conducted with Minseok Song, a Master's student at the KAIST School of Computing, as the first author. The results were presented at the international robotics conference 'Robotics: Science and Systems (RSS) 2025,' held at USC in Los Angeles from June 21 to 25.

© The Asia Business Daily(www.asiae.co.kr). All rights reserved.

![Clutching a Stolen Dior Bag, Saying "I Hate Being Poor but Real"... The Grotesque Con of a "Human Knockoff" [Slate]](https://cwcontent.asiae.co.kr/asiaresize/183/2026021902243444107_1771435474.jpg)