"MI325X Full-Scale Mass Production in Q4 This Year"

Lisa Su CEO "AI Demand Exceeds Expectations"

No Plans to Use Companies Other Than TSMC

New Server CPU Targeting Intel Also Unveiled

U.S. semiconductor company AMD unveiled its new artificial intelligence (AI) chip 'MI325X' on the 10th (local time), challenging Nvidia's monopolistic position. With a release schedule similar to Nvidia's next-generation chip 'Blackwell,' a direct showdown between the two companies is anticipated.

On the same day, AMD held the 'Advancing AI 2024' event at the Moscone Center in San Francisco, introducing next-generation AI and high-performance computing solutions and showcasing the new product MI325X.

The MI325X is the successor to last year's 'MI300X.' While maintaining the existing architecture, it improved AI computing performance and memory capacity by 1.3 times and 1.8 times, respectively, compared to the previous product. Mass production of the MI325X will begin by the end of the year, with shipments to the global market starting in January next year. Nvidia's next-generation chip Blackwell is also expected to begin mass production soon.

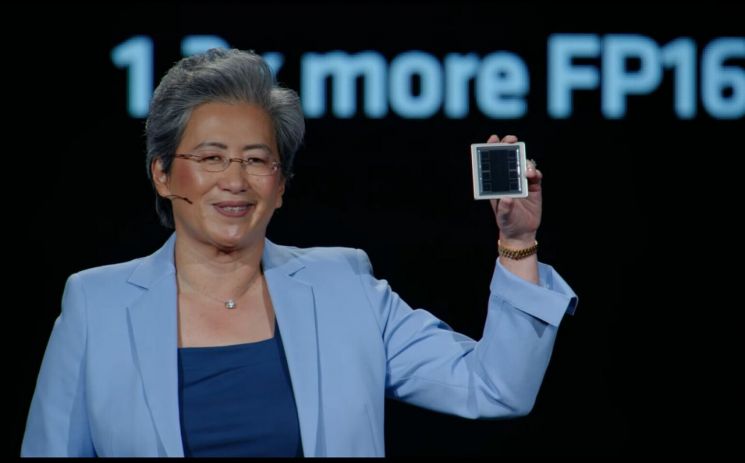

On the 10th (local time) at 'Advancing AI 2024,' Lisa Su, CEO of AMD, is introducing the new artificial intelligence (AI) chip 'MI325X.' [Image source=AMD]

On the 10th (local time) at 'Advancing AI 2024,' Lisa Su, CEO of AMD, is introducing the new artificial intelligence (AI) chip 'MI325X.' [Image source=AMD]

Lisa Su, AMD's Chief Executive Officer (CEO), who is celebrating her 10th anniversary this year, said, "The MI325X uses a new type of memory chip to deliver better performance running AI software than Nvidia's chips." The 'new type of memory chip' mentioned by CEO Su refers to the latest version of High Bandwidth Memory (HBM), HBM3E. It offers up to 256GB of capacity and 6 terabytes per second (TB/s) of memory bandwidth. This represents a 1.8 times increase in memory capacity compared to the MI300, which is equipped with 192GB of HBM3 memory.

At the event, AMD also revealed its next-generation AI chip roadmap. The company announced plans to release the MI350 next year and the MI400 in 2026, presenting a long-term strategy for the AI market. AMD also raised its AI chip-related sales target for this year from $4 billion to $4.5 billion, demonstrating strong determination to capture the market. CEO Su stated, "AI demand continues to grow and is exceeding expectations," adding, "It is clear that investments are increasing everywhere."

AMD continued its aggressive offensive targeting Intel in the server market. On the same day, it unveiled the 'EPYC 5th generation' central processing unit (CPU) for servers, showcasing a broad product lineup ranging from low-cost 8-core chips to 192-core processors for supercomputers. AMD emphasized that its most expensive model, priced at $14,813, outperforms Intel's 5th generation Xeon server chips.

AMD also announced the 'Ryzen AI PRO 300' processor for AI PCs aimed at enterprise customers. It highlighted that the highest-performance model in this series delivers 40% better performance than Intel's products.

CEO Su emphasized strengthening cooperation with TSMC, stating, "Currently, we have no plans to use any chip manufacturers other than Taiwan's TSMC for producing the latest AI chips." She added, "We want to utilize additional capacity outside Taiwan," expressing strong interest in TSMC's Arizona plant.

Despite AMD's aggressive announcements, its stock price on the New York Stock Exchange closed down 4% compared to the previous day. This is analyzed as investors showing cautious reactions, conscious of Nvidia's still dominant market share in the AI sector. Nvidia holds over 80% of the global AI chip market, with AMD trailing behind.

© The Asia Business Daily(www.asiae.co.kr). All rights reserved.

![Clutching a Stolen Dior Bag, Saying "I Hate Being Poor but Real"... The Grotesque Con of a "Human Knockoff" [Slate]](https://cwcontent.asiae.co.kr/asiaresize/183/2026021902243444107_1771435474.jpg)