NVIDIA, the leading company in artificial intelligence (AI), unveiled its next-generation AI chip, the Blackwell 'B200'. This product boosts inference performance by 30 times compared to previous models. NVIDIA aims to further solidify its dominance as a leader in AI chips through this innovation.

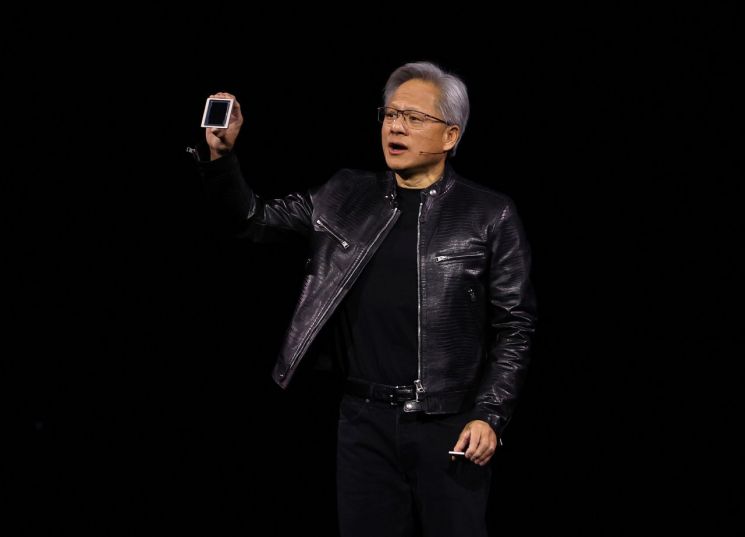

Jensen Huang, CEO of NVIDIA, introduced the next-generation AI chip on the 18th (local time) at the annual developer conference (GTC 2024) held at the SAP Center in California, USA. Wearing a black leather jacket, CEO Huang took the stage and said, "While Hopper is fantastic now, a larger graphics processing unit (GPU) is needed," adding, "Blackwell is the engine driving a new industrial revolution."

The B200, revealed that day, is based on the new Blackwell platform and combines two chips of the size of existing NVIDIA products into a single chip. It features 208 billion transistors, more than double the previous 80 billion, enhancing productivity. Compared to the H100, its inference performance is over 30 times higher, while cost and energy consumption have been reduced to one twenty-fifth.

CEO Huang emphasized, "NVIDIA has pursued accelerated computing for the past 30 years to realize innovations such as deep learning and AI," and added, "We will realize the potential of AI across all industries by collaborating with the world's most dynamic companies." Major customers announced at the event included Amazon Web Services, Dell, Google, Meta Platforms, Microsoft (MS), OpenAI, Oracle, Tesla, and xAI.

The name Blackwell, the successor technology to the existing Hopper architecture, is derived from David Harold Blackwell, the first Black mathematician inducted into the U.S. National Academy of Sciences. It supports AI training and real-time inference of large language models (LLMs) that scale up to 10 trillion parameters. NVIDIA updates its GPU architecture every two years. CEO Huang highlighted that "Blackwell is a platform, not just a chip," emphasizing that NVIDIA is moving beyond simply supplying GPU chips to becoming a platform company building software.

The price of the B200, scheduled for release later this year, has not yet been disclosed. However, the price of the existing Hopper-based H100 is estimated at $25,000 to $40,000 per chip, with the entire system costing around $200,000. Wall Street expects the B200 to be priced around $50,000 per chip.

Meanwhile, on the New York Stock Exchange, NVIDIA's stock closed at $884.55 per share, up 0.7% from the previous session. NVIDIA's stock price has risen nearly 80% so far this year.

© The Asia Business Daily(www.asiae.co.kr). All rights reserved.

![Clutching a Stolen Dior Bag, Saying "I Hate Being Poor but Real"... The Grotesque Con of a "Human Knockoff" [Slate]](https://cwcontent.asiae.co.kr/asiaresize/183/2026021902243444107_1771435474.jpg)