Safe Communication Through Context-Based eHMI

(From left) Professor Seungjun Kim of GIST, doctoral student Yumin Kang, master's student Jungju Park, doctoral student Seokhyun Hwang of University of Washington, doctoral students Minwoo Sung and Kwangbin Kim of GIST.

(From left) Professor Seungjun Kim of GIST, doctoral student Yumin Kang, master's student Jungju Park, doctoral student Seokhyun Hwang of University of Washington, doctoral students Minwoo Sung and Kwangbin Kim of GIST.

On December 15, the Gwangju Institute of Science and Technology (GIST) announced that the research team led by Professor Kim Seungjun from the Department of AI Convergence has developed a new "external human-machine interface (eHMI) technology" to help autonomous vehicles communicate more safely and clearly with road users.

This research is significant in that it goes beyond the limitations of most previous studies, which have only assumed "pedestrian-only" scenarios. Instead, it recreated real road environments in virtual reality (VR), where pedestrians, cyclists, and drivers coexist, to verify the effectiveness of eHMI.

With the full-scale introduction of autonomous vehicles, all road users outside the vehicle-including pedestrians, cyclists, and regular drivers-will experience new changes. Traditionally, drivers and pedestrians could confirm each other's intentions through nonverbal cues such as eye contact or hand gestures. However, in the era of fully autonomous driving, these signals will no longer function. Therefore, technology that enables autonomous vehicles to clearly communicate their intentions and actions to people nearby (eHMI) is essential.

Until now, most research has focused on one-to-one situations between autonomous vehicles and pedestrians. In environments where multiple users move simultaneously, as on real roads, it has been consistently pointed out that it is unclear to whom (target), when (timing), and where (location) the autonomous vehicle should deliver its message. This ambiguity can lead to misunderstandings and risks.

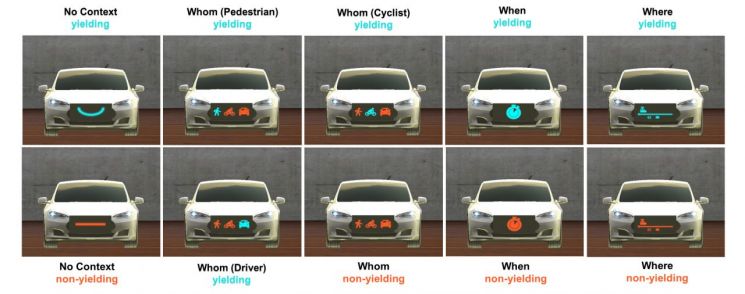

Visual representations of external human-machine interface (eHMI) types for autonomous vehicles. Provided by GIST

Visual representations of external human-machine interface (eHMI) types for autonomous vehicles. Provided by GIST

To address these real-world issues, the research team proposed a "context-based eHMI" design direction that enables clear and safe communication even in complex road situations.

The team classified the external signals (eHMI) of autonomous vehicles into five types: ▲"No eHMI" (no signal), ▲"No Context" (basic signal indicating simple intention to yield using a mouth-shaped symbol), ▲"Whom" (target information indicating to whom the vehicle is yielding), ▲"When" (timing information indicating when the vehicle will stop), and ▲"Where" (location information indicating where the vehicle will stop). They then conducted experiments to compare the effectiveness of each type.

All signals were designed with a unique color and symbol system to avoid confusion with international standard traffic signals and were displayed identically on the front, sides, and rear of the vehicle.

A total of 42 participants, including pedestrians, cyclists, and regular drivers, took part in the experiment. The research team used VR headsets (HMD) for pedestrians, indoor stationary bicycle trainers that replicated actual pedaling and speed changes for cyclists, and vehicle simulators that mimicked real driving environments for drivers. This setup allowed all participants to interact with autonomous vehicles simultaneously in the same virtual road environment.

The results showed that the "Whom" signal led to the fastest and most stable decision-making, demonstrating the best performance across all indicators.

When it was clearly indicated to whom the vehicle was yielding, pedestrians, cyclists, and drivers were all able to make decisions and take action in a shorter amount of time. Subjective evaluations such as sense of safety, trust, and clarity were also highest for this signal.

In contrast, the "No eHMI" (no signal) condition showed the lowest performance across all indicators, and the "No Context" (basic signal indicating only the intention to yield) was limited in its ability to reduce confusion.

The "When" and "Where" signals also demonstrated higher reliability and safety than the no-signal condition ("No eHMI"). Notably, both signals resulted in zero behavioral errors caused by misinterpretation, clearly showing that contextual information directly impacts decision-making stability.

Furthermore, analysis of electrodermal activity (EDA)-a biometric signal used to assess tension and anxiety-revealed that participants' psychological tension tended to decrease when the "Whom" signal was provided.

Interviews with participants also showed a clear preference for the "Whom" signal. Many commented that "it was the easiest to understand and trust because the signal's target was clear," while some found the symbol-based "No Context" signal confusing due to its difference from existing traffic signal systems.

Some participants suggested that methods to enhance the intuitiveness of visual information, such as "color coding," which conveys meaning through color alone, would be helpful.

Lead author Yumin Kang, a PhD candidate, stated, "On real roads, not only pedestrians but also cyclists and drivers are present. This study is meaningful in that it presents a new direction for interface design that can reduce misunderstandings and risks between autonomous vehicles and road users in such realistic environments."

Professor Kim Seungjun, who led the research, emphasized, "It is rare worldwide to implement a real road environment with multiple traffic participants in VR and verify the effectiveness of eHMI. In the future, it will be essential for autonomous vehicles to communicate not only the fact that they are yielding, but also to whom, when, and where they are yielding, as this will be the key to traffic safety."

The research team plans to expand the scope of their work based on the multi-user VR traffic simulation platform developed in this study, including advancing AI-based autonomous driving technologies, designing smart intersections, and developing safety systems to protect vulnerable road users.

© The Asia Business Daily(www.asiae.co.kr). All rights reserved.

![Clutching a Stolen Dior Bag, Saying "I Hate Being Poor but Real"... The Grotesque Con of a "Human Knockoff" [Slate]](https://cwcontent.asiae.co.kr/asiaresize/183/2026021902243444107_1771435474.jpg)