Motif 12.7B Released on Hugging Face

Enhanced Cost Efficiency in Development and Operations

On November 5, Motif Technologies, an artificial intelligence (AI) startup, announced that it has released its proprietary large language model (LLM), "Motif 12.7B," as open source on Hugging Face.

This model, based on 12.7 billion parameters, was entirely developed and trained by Motif using a "from scratch" approach. Motif 12.7B is a high-performance LLM that represents a significant advancement from the previously released lightweight model (Motif 2.6B), enhancing both inference capabilities and learning efficiency.

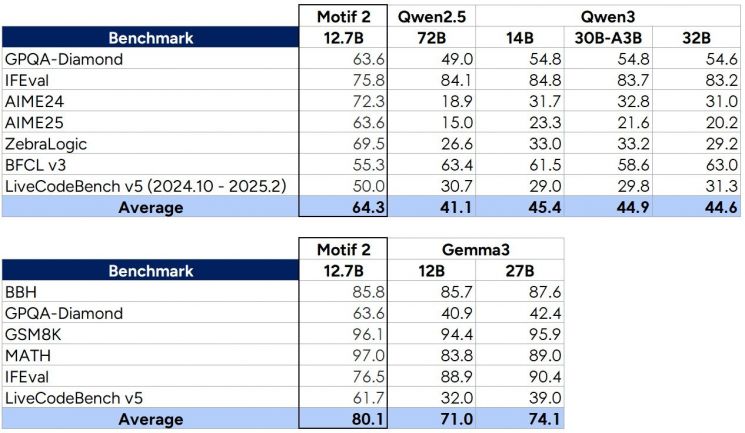

According to benchmark results, Motif 12.7B outperformed Alibaba Qwen 2.5 (72B), which has 72 billion parameters, in math, science, and logic tasks used to assess inference capabilities. It also achieved higher scores on key inference-related evaluation metrics compared to Google Gemma 3 models of a similar class.

Additionally, Motif improved cost efficiency throughout both the development and operational phases. During development, the omission of the reinforcement learning process reduced the burden of high-cost training. In the operational phase, the model automatically bypasses unnecessary inference computations, resulting in reduced GPU usage, simplified model management, and minimized response latency.

Lim Junghwan, CEO of Motif, stated, "Motif 12.7B not only represents a simple performance upgrade but also serves as a case study in the structural evolution of AI models. At the same time, it provides an exemplary solution for companies seeking high-performance, cost-efficient LLMs."

© The Asia Business Daily(www.asiae.co.kr). All rights reserved.

![Clutching a Stolen Dior Bag, Saying "I Hate Being Poor but Real"... The Grotesque Con of a "Human Knockoff" [Slate]](https://cwcontent.asiae.co.kr/asiaresize/183/2026021902243444107_1771435474.jpg)