The Electronics and Telecommunications Research Institute (ETRI) announced on August 19 that it has proposed two standards to the International Organization for Standardization (ISO/IEC): the "AI Red Team Testing" standard, which proactively identifies risks in artificial intelligence (AI) systems, and the "Trustworthiness Fact Label (TFL)" standard, which allows consumers to easily understand the level of trustworthiness of AI.

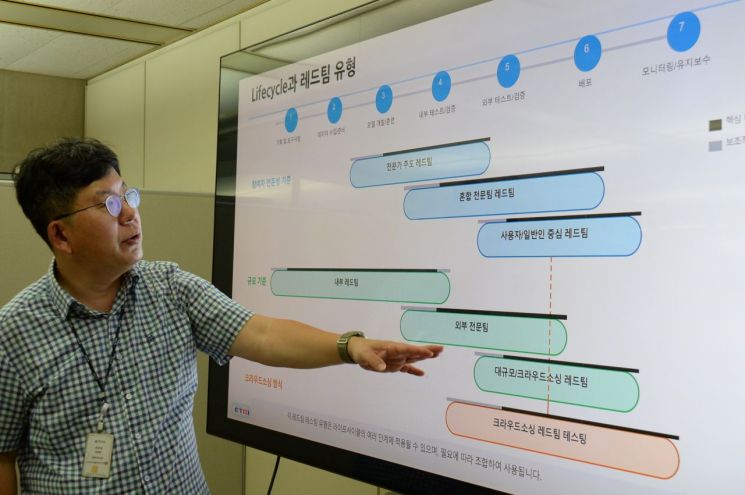

ETRI researchers are explaining the types of red teams and application criteria according to the entire cycle of artificial intelligence system development. Provided by Electronics and Telecommunications Research Institute (ETRI)

ETRI researchers are explaining the types of red teams and application criteria according to the entire cycle of artificial intelligence system development. Provided by Electronics and Telecommunications Research Institute (ETRI)

The "AI Red Team Testing" standard proposed by ETRI to the International Organization for Standardization is a method that aggressively explores and tests the safety of AI systems. For example, it can identify in advance situations where generative AI provides incorrect information or is exploited by bypassing user protection mechanisms.

ETRI is serving as the editor for the ISO/IEC "42119-7" international standard in this field and is developing internationally standardized test procedures and methods applicable to sectors such as healthcare, finance, and defense.

In addition, ETRI is collaborating with Asan Medical Center in Seoul to develop a red team evaluation methodology specifically for healthcare, establishing a red team testing framework for digital medical products that utilize advanced AI technologies, and conducting empirical testing. Beyond this, ETRI has formed a consortium with major companies such as STA, Naver, Upstage, SelectStar, KT, and LG AI Research to strengthen international standardization cooperation for AI red teams.

Currently, ETRI is also leading the development of the "42117 series" standard for the Trustworthiness Fact Label at the International Organization for Standardization. This standard can be implemented in various ways, such as companies providing information themselves or third-party organizations verifying and certifying the information.

The core function is to visualize how trustworthy an AI system is, providing transparent information to consumers-similar to a nutrition label on food products. There are also plans to consider incorporating ESG factors, such as the AI system's carbon emissions (carbon footprint), in the future.

This standard can also be linked to the "AI Management System Standard (ISO/IEC 42001)," which serves as an international certification standard for organizations utilizing artificial intelligence, and can be used as a framework to demonstrate the trustworthiness of developed products and services.

The "AI Red Team Testing" and "Trustworthiness Fact Label" standards are also aligned with government-led strategies such as "Sovereign AI" and the "AI G3 Leap" initiative. This means that, beyond simply securing technological capability, these efforts can make a substantial contribution to the global competition for leadership in establishing AI rules.

Lee Seungyun, Director of the Standards Research Division at ETRI, said, "AI Red Team Testing and Trustworthiness Labels are core technological elements included in AI regulatory policies in the United States, Europe (EU), and other countries. The international standards for AI Red Team Testing and Trustworthiness Labels, once established, will serve as common criteria for evaluating the safety and trustworthiness of AI systems worldwide."

© The Asia Business Daily(www.asiae.co.kr). All rights reserved.