Autotuning Up to 2.5 Times Faster with Code Similarity-Based "Diffusion" Method

Selected for OSDI, One of the Top Two Computer Systems Conferences

Only Two Papers Accepted from Korea This Year

A new technology has been developed that reduces by more than half the time required to convert deep learning AI models into executable programs.

The team led by Professor Seulgi Lee from the Department of Computer Science and Engineering at UNIST announced on August 12 that they have developed a method that can accelerate the autotuning process by up to 2.5 times. This achievement has been accepted by OSDI, a prestigious conference in the field of computer systems.

In the more than 20-year history of OSDI, there have been only 12 cases where research led by a Korean first author has been accepted.

For an AI model to function in practice, it must undergo a "compilation" process, which transforms the high-level program written by humans into a form that computer processors can understand.

For example, even a command like "distinguish cat photos" must be converted into thousands of lines of complex calculation code for processors such as GPUs or CPUs to actually execute it.

Autotuning is a technology that automatically finds the fastest and most efficient configuration for the processor among hundreds of thousands of possible code combinations during this process. However, depending on the case, autotuning can require significant computational resources, with tuning times ranging from several minutes to several hours, and it also consumes a lot of power.

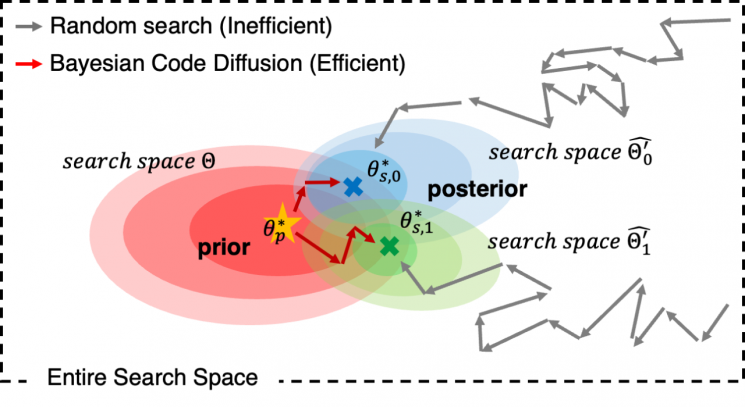

The research team focused on the fact that deep learning models contain many repetitive computational structures, and reduced the search space by allowing similar operators to share information.

Instead of searching for every code combination from scratch, they increased autotuning speed by reusing existing results.

When this method was applied to the existing autotuning framework (Ansor), the time required to generate executable code with the same performance was reduced by an average of 2.5 times on CPUs and 2 times on GPUs.

Professor Seulgi Lee said, "By reducing compilation time, we can not only use limited computational resources more efficiently by decreasing the number of times GPUs or CPUs are used in experiments, but also reduce power consumption."

This research was led by Isu Jung, a researcher at UNIST, as the first author. The research was supported by the Institute of Information & Communications Technology Planning & Evaluation under the Ministry of Science and ICT.

OSDI (Operating Systems Design and Implementation) is considered, along with SOSP (Symposium on Operating Systems Principles), one of the two premier conferences in the field of computer systems. AI technologies such as Google's TensorFlow have also been introduced at this conference.

This year, 338 papers were submitted to OSDI, and only 48 were accepted. In Korea, in addition to Professor Seulgi Lee's team, Professor Jaewook Lee's team from Seoul National University was also selected. The conference was held in Boston, USA, for three days starting July 7.

© The Asia Business Daily(www.asiae.co.kr). All rights reserved.

![Clutching a Stolen Dior Bag, Saying "I Hate Being Poor but Real"... The Grotesque Con of a "Human Knockoff" [Slate]](https://cwcontent.asiae.co.kr/asiaresize/183/2026021902243444107_1771435474.jpg)