'2024 Intelligent Information Society User Panel Survey' Released

24% of Respondents Have Used Generative AI

A recent survey found that one in four Koreans has used generative artificial intelligence (AI) services such as ChatGPT, developed by OpenAI. The survey also revealed concerns about exposure to illegal information and personal data leaks in portal sites and YouTube's algorithm-based recommendation services.

On May 29, the Korea Communications Commission and the Korea Information Society Development Institute released the results of the "2024 Intelligent Information Society User Panel Survey," which examined user perceptions, attitudes, and acceptance of intelligent information technologies and services such as AI.

This survey, which began in 2018, aims to develop user-centered broadcasting and telecommunications policies in response to the spread of intelligent information technologies and services. It targeted 4,420 people aged 15 to 69 who use smartphones and access the internet at least once a day, across 17 regions nationwide.

The main findings show that both the percentage of users who have tried generative AI and those with paid subscriptions have increased significantly compared to the previous year.

Specifically, 24% of all respondents said they had used generative AI, nearly doubling from the previous year (an increase of 11.7 percentage points). The rate of paid subscription experience rose to 7.0%, about seven times higher than the previous year's 0.9%.

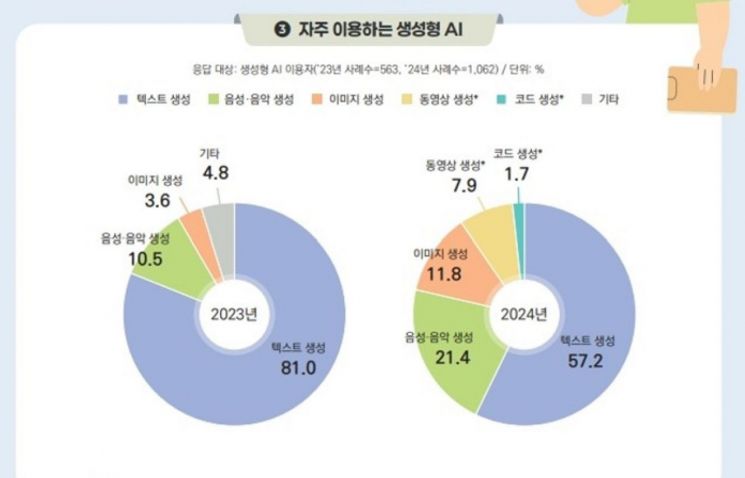

Regarding the types of generative AI usage, text generation accounted for 57.2%, voice and music generation for 21.4%, and image generation for 11.8%. Unlike the previous year, when text generation made up the vast majority (81%), this indicates that generative AI is now being used in a wider variety of applications.

The main motivations for using generative AI were "efficiency in information search" (87.9%), "helpful for supporting daily tasks" (70%), and "used to have someone to converse with" (69.5%). All of these figures increased compared to the previous year.

The main reasons for not using generative AI were "concerns that it would be difficult to use due to requiring a high level of knowledge" (65.2%), "concerns about personal information leaks" (58.9%), and "concerns that it would be complicated to use" (57.3%).

Regarding potential negative effects, respondents expressed high levels of concern about job replacement (60.9%), decline in creativity (60.4%), copyright infringement (58.8%), and the potential for criminal misuse (58.7%).

When it comes to concerns about algorithm-based recommendation services provided by portals and YouTube, last year the top concern was "value bias" (49.9% for portals, 51.0% for YouTube). However, this year, the main concern for portals was "exposure to illegal information" (47.4%), while for YouTube, it was "leakage of personal information" (48.2%).

In a survey on the ethical responsibilities users expect from AI recommendation service providers, the highest demand was for "disclosure of the criteria used to select algorithmic content" (69.8%). This figure increased by 16 percentage points compared to 2022 and by 7 percentage points compared to 2023, indicating a continued rise in users' demand for the right to know how recommendation algorithms operate.

The Korea Communications Commission plans to review user behaviors, perceptions, and concerns regarding intelligent information technologies and services identified in this survey, and will use the findings to develop future user protection policies.

© The Asia Business Daily(www.asiae.co.kr). All rights reserved.

![Clutching a Stolen Dior Bag, Saying "I Hate Being Poor but Real"... The Grotesque Con of a "Human Knockoff" [Slate]](https://cwcontent.asiae.co.kr/asiaresize/183/2026021902243444107_1771435474.jpg)