Simultaneous Understanding and Processing of Text, Speech, and Images

Efficient Development in a Short Period Through Model Merging Technology

Competitiveness Comparable to Global Models

On May 1, Kakao announced the performance and development stories of its integrated multimodal language model "Kanana-o" and its audio language model "Kanana-a" through its official tech blog.

The integrated multimodal language model "Kanana-o" is the first in Korea capable of simultaneously understanding and processing various types of information, including text, speech, and images. The model is designed to process any combination of text, speech, or images as input and to respond with context-appropriate text or natural speech.

Kakao efficiently developed "Kanana-o" in a short period by merging models specialized in different modalities: "Kanana-v," which is optimized for image processing, and "Kanana-a," which specializes in audio understanding and generation, utilizing its "model merging" technology.

Kanana-o leverages speech emotion recognition technology to accurately interpret user intent and provide appropriate responses and answers for each situation. It analyzes nonverbal cues such as intonation, speech patterns, and voice tremors, and generates emotionally resonant and natural-sounding speech responses that fit the conversational context.

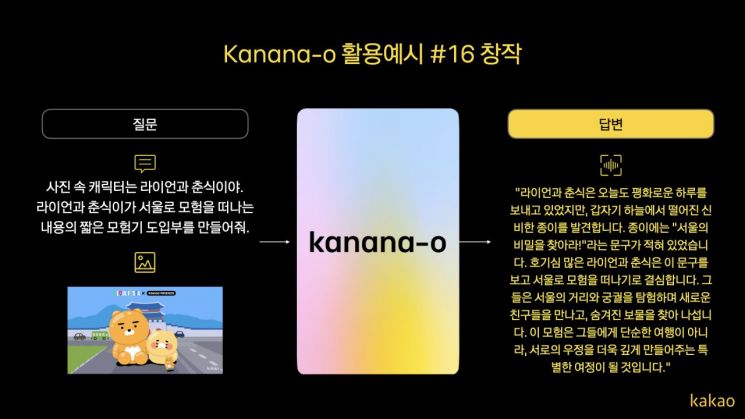

The model also features streaming-based speech synthesis technology, enabling responses without long wait times. For example, if a user inputs "Create a fairy tale that matches this picture" along with an image, Kanana-o can understand the speech, analyze the user's intonation and emotions, and generate and narrate a creative story in real time.

Kanana-o achieved performance levels comparable to leading global models in both Korean and English benchmarks, and demonstrated a clear advantage in Korean benchmarks. In particular, the model showed a significant gap in emotion recognition capabilities in both Korean and English, proving the potential of AI models that can understand and communicate emotions.

Kim Byunghak, Kanana Performance Leader at Kakao, stated, "The Kanana model is evolving beyond traditional text-centric AI by integratively processing complex forms of information, enabling AI to see, listen, speak, and empathize like a human. Based on our proprietary multimodal technology, we will continue to strengthen our AI technology competitiveness and contribute to the advancement of the domestic AI ecosystem by consistently sharing our research results."

Last year, Kakao unveiled its lineup of proprietary AI models under the "Kanana" brand, and has since shared performance results and development stories for its language models, multimodal language models, and visual generation models through its official tech blog.

© The Asia Business Daily(www.asiae.co.kr). All rights reserved.

![Clutching a Stolen Dior Bag, Saying "I Hate Being Poor but Real"... The Grotesque Con of a "Human Knockoff" [Slate]](https://cwcontent.asiae.co.kr/asiaresize/183/2026021902243444107_1771435474.jpg)