(From left) Professor Kyubin Lee of the Department of AI Convergence, PhD candidate Sangjun Noh, integrated master's and doctoral candidates Jongwon Kim, Raeyoung Kang, Dongwoo Nam, Senior Researcher Seunghyuk Baek of the Korea Institute of Machinery and Materials.

(From left) Professor Kyubin Lee of the Department of AI Convergence, PhD candidate Sangjun Noh, integrated master's and doctoral candidates Jongwon Kim, Raeyoung Kang, Dongwoo Nam, Senior Researcher Seunghyuk Baek of the Korea Institute of Machinery and Materials.

The Gwangju Institute of Science and Technology (GIST) has developed an AI robot grasping model that achieves optimal efficiency in collaboration with human workers.

On April 29, GIST announced that the research team led by Professor Kyubin Lee of the Department of AI Convergence has developed "GraspSAM," an innovative robot grasping model with world-leading performance, designed specifically for collaboration with human operators.

GraspSAM supports various types of prompt inputs, including points, boxes, and text, and is designed to accurately predict grasp points on objects with a single inference. This overcomes the limitations of existing models and enables stable grasping of even unseen objects in complex environments.

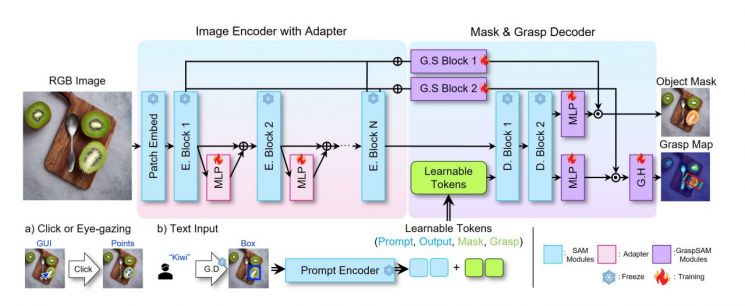

Traditional deep learning-based grasping models required separate AI models to be trained for different environments and situations. To address this issue, the research team became the first to apply SAM (Segment Anything Model), a general-purpose image segmentation model developed by Meta (Facebook's parent company), to enable robotic grasping output.

The GraspSAM developed by the research team is an innovative model that utilizes SAM's powerful object segmentation capabilities to predict grasp points on objects with minimal fine-tuning. To achieve this, the team applied adapter techniques and learnable token methods to optimize SAM for grasp point inference.

GraspSAM supports prompt-based inputs and is designed to instantly adapt to various environments, objects, and situations through simple point, box, or text inputs provided by the user. This allows robots to easily grasp a wider variety of objects, and by predicting grasp points in a single computation, it has dramatically expanded the applicability of robots in industrial settings.

GraspSAM achieved state-of-the-art (SOTA) performance on prominent grasping benchmark datasets such as "Grasp-anything" and "Jacquard." In addition, experimental results confirmed that robots can perform stable grasping tasks even in complex real-world environments.

SOTA is a term frequently used in the fields of artificial intelligence (AI) and machine learning (ML), and a SOTA model generally refers to one that records the highest performance on benchmark datasets or delivers the most efficient and accurate results for specific tasks.

Professor Kyubin Lee stated, "The GraspSAM model enables intuitive interaction between robots and users, and demonstrates outstanding grasping capabilities even in complex environments. We expect it to be widely utilized not only in industrial sites but also in various fields such as home robots and service robots."

© The Asia Business Daily(www.asiae.co.kr). All rights reserved.

![Clutching a Stolen Dior Bag, Saying "I Hate Being Poor but Real"... The Grotesque Con of a "Human Knockoff" [Slate]](https://cwcontent.asiae.co.kr/asiaresize/183/2026021902243444107_1771435474.jpg)