Ultra-Lightweight Federated Learning AI Model 'PRISM' Developed by Yoo Jaejun's Team

Reduces Communication Costs and Boosts Performance

Adopted by ICLR 2025 for Applications in Healthcare, Finance, and Personal Mobile AI

An ultra-lightweight artificial intelligence model has been developed to help generate high-quality images without directly sending sensitive data to a server.

This opens up the possibility of safely utilizing high-performance generative AI in environments where personal information protection is crucial, such as patient MRI and CT analysis.

The team led by Professor Yoo Jaejun at the Graduate School of Artificial Intelligence at UNIST has developed a federated learning AI model called PRISM (PRivacy-preserving Improved Stochastic Masking).

Federated learning is a technology that creates a single 'global AI' by having 'local AIs' on individual devices perform training without uploading sensitive data directly to the server, and then aggregating only the results.

PRISM acts as an AI model that mediates the learning process between local AI and global AI in federated learning. Compared to existing models, it reduces communication costs by an average of 38%, and its size is reduced by 48% to a 1-bit ultra-lightweight level, allowing it to operate smoothly on CPUs or memory of small devices such as smartphones and tablet PCs without burden.

Even in situations where there are significant differences in data and performance among local AIs, PRISM can accurately determine which local AI's information to trust and reflect, resulting in high-quality final outputs.

For example, when transforming a 'selfie' into a Studio Ghibli-style image, the previous approach required uploading the photo to a server, raising privacy concerns. With PRISM, all processing is done within the smartphone, preventing privacy invasion and delivering results quickly. However, the development of a local AI model capable of generating images directly on the smartphone is still required separately.

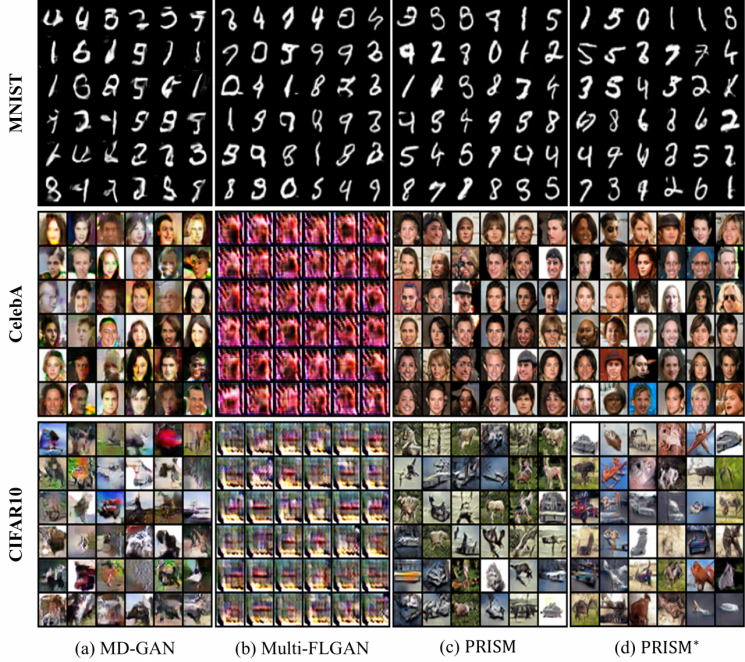

Experiments using datasets commonly used for AI performance validation?MNIST, FMNIST, CelebA, and CIFAR10?showed that PRISM required less communication than existing methods while achieving higher image generation quality. In particular, additional experiments with the MNIST dataset confirmed compatibility with diffusion models, which are mainly used to generate Studio Ghibli-style images.

The research team improved communication efficiency by applying a binary mask method that selectively shares only important information, instead of sharing all information through large-scale parameters.

They also addressed data deviation and training instability by employing a loss function (MMD, Maximum Mean Discrepancy) for precise evaluation of generation quality and a strategy (MADA, Mask-Aware Dynamic Aggregation) that aggregates each local AI's contribution differently.

Professor Yoo Jaejun said, "This can be applied not only to image generation, but also to various generative AI fields such as text generation, data simulation, and automated documentation," adding, "It will be an effective and safe solution for fields dealing with sensitive information, such as healthcare and finance."

This research was conducted in collaboration with Professor Han Dongjun of Yonsei University, with Seo Kyungguk, a researcher at UNIST, participating as the first author.

The research results have been accepted for presentation at ICLR (The International Conference on Learning Representations) 2025, one of the world's top three AI conferences. ICLR 2025 will be held in Singapore for five days from April 24 to 28.

The research was supported by the National Research Foundation of Korea, the Institute of Information & Communications Technology Planning & Evaluation, and the UNIST Supercomputing Center under the Ministry of Science and ICT.

© The Asia Business Daily(www.asiae.co.kr). All rights reserved.

![Clutching a Stolen Dior Bag, Saying "I Hate Being Poor but Real"... The Grotesque Con of a "Human Knockoff" [Slate]](https://cwcontent.asiae.co.kr/asiaresize/183/2026021902243444107_1771435474.jpg)