ExaOne 3.5 Development Cost at 7 Billion KRW, Lower Than DeepSeek

Kakao: "DeepSeek's Approach Is Not a Difficult Issue"

Global Tech Giants Also Unveil 'Cost-Efficient' AI Models

Since the launch of DeepSeek in China, competition among 'low-cost, high-performance' artificial intelligence (AI) models has been intensifying. Following Google, the French AI company Mistral unveiled its own chatbot, and domestically, Kakao, in partnership with OpenAI, is understood to have begun establishing plans to develop cost-efficient AI models. Startups that find it difficult to acquire expensive graphics processing units (GPUs) are maximizing performance by leveraging software technology.

The French startup Mistral, known as the European version of 'ChatGPT,' released the latest version of its mobile application (app) for its proprietary chatbot 'Le Chat' on the 6th (local time). Shortly after launching the mobile app for 'Le Chat,' Mistral co-founder Arthur Mensch stated in a local interview, "We can train models more efficiently than DeepSeek," expressing determination to showcase technological advancements that surpass DeepSeek in the future. On the 5th, Google introduced its AI model lineup, describing the 'Gemini 2.0 Flashlight' model as "the most cost-efficient." OpenAI unveiled the inference-type small model 'o3 Mini' at the end of last month.

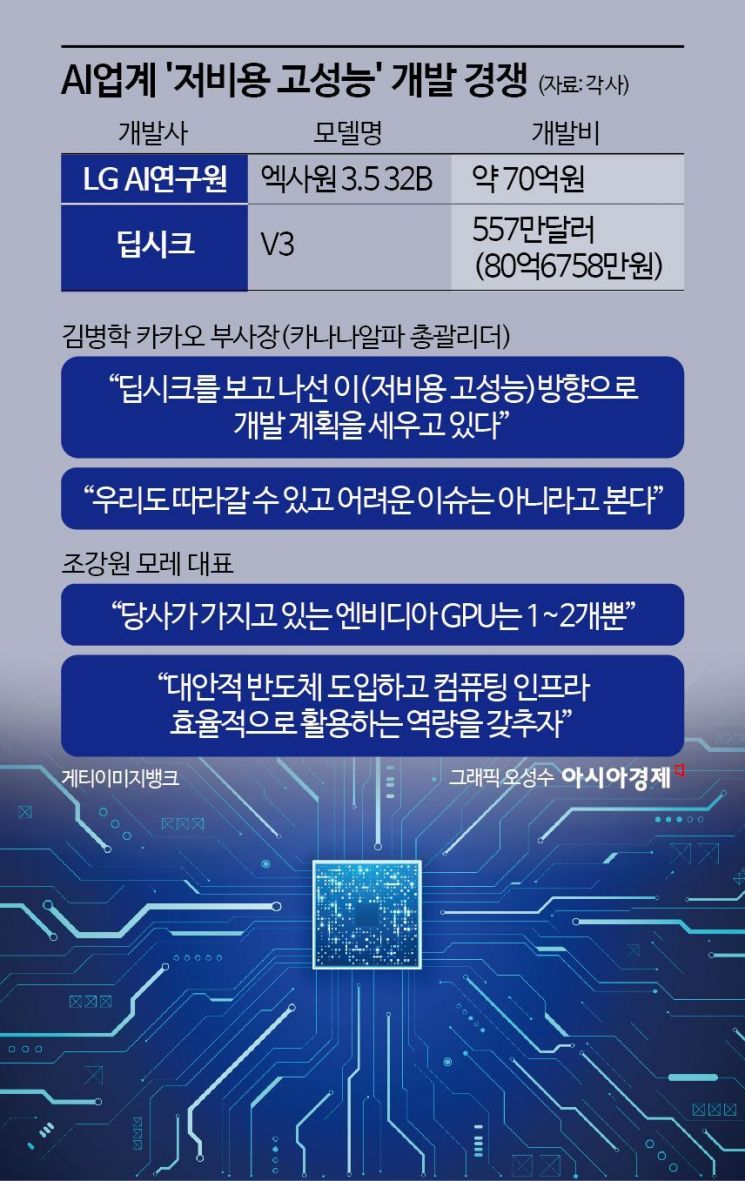

Efficient AI models are also emerging domestically. The AI Research Institute under LG Management Development Institute revealed that the cost invested in developing the 'ExaOne 3.5' 32B model was approximately 7 billion KRW. ExaOne 3.5 is an AI model developed for all employees of LG affiliates and was launched in December last year.

This cost is analyzed to be lower than the development cost of DeepSeek, known for its 'cost-effectiveness (performance relative to price).' According to a paper released by DeepSeek in December last year, the total cost invested in training the 'V3' model was $5.57 million (approximately 8.06758 billion KRW). However, DeepSeek clarified that this figure does not include past research and experimental costs related to data or algorithms.

ExaOne 3.5 is known to deliver performance comparable to DeepSeek despite the lower development cost. In December last year, it was recognized for its performance by ranking first on the 'Open LLM Leaderboard,' the largest AI platform Hugging Face's evaluation of large language models (LLMs). As a workplace-specialized AI model, it can process long texts equivalent to 100 pages of A4 paper at once and also offers features such as customized prompt recommendations by job category and complex data analysis.

On the 6th, Baek Gwang-hoon, head of LG AI Research Institute, stated, "We utilized NVIDIA GPU 'H100' for four months and employed the Mixture of Experts (MoE) technique, which is considered a key factor in DeepSeek's success in low-cost development." The MoE technique selectively activates only specific models suitable for problem-solving, reducing the computational load required for training and inference. He added, "It would have been better known if it had been released globally beyond the (LG) group level, which is regrettable," and "We plan to soon unveil an AI model at the level of DeepSeek's 'R1' and release it as open source."

Kakao, preparing to launch its AI brand 'Kanana,' is also likely to focus on cost efficiency. Kim Byung-hak, Vice President of Kakao and General Leader of Kanana Alpha, said at a briefing, "While creating 'Kanana,' we divided the language models into Nano, Essence, and Flag based on size, and after seeing DeepSeek, we are planning how to develop in this (low-cost, high-performance) direction," adding, "We believe we can follow and that it is not a difficult issue."

There are also startups reducing AI development costs by utilizing low-spec GPUs. AI infrastructure solution company 'More' is developing high-performance AI models through software technology without the NVIDIA high-end GPU 'H100.' CEO Jo Kang-won of the company said, "We only have one or two NVIDIA GPUs," and added, "While high-performance GPUs are somewhat necessary, it is essential to adopt alternative semiconductors and have the capability to efficiently utilize computing infrastructure."

A representative of an AI startup predicted, "The competition for cost efficiency will accelerate in the future," and forecasted, "Development costs will drop to around 1 billion KRW."

© The Asia Business Daily(www.asiae.co.kr). All rights reserved.

![Clutching a Stolen Dior Bag, Saying "I Hate Being Poor but Real"... The Grotesque Con of a "Human Knockoff" [Slate]](https://cwcontent.asiae.co.kr/asiaresize/183/2026021902243444107_1771435474.jpg)