Kakao announced on the 23rd that it has established a management system to proactively identify and manage risks that may arise during the development and operation of artificial intelligence (AI) technology.

The system, called the Kakao AI Safety Initiative (Kakao ASI), refers to comprehensive guidelines aimed at minimizing risks in AI technology development and operation, and building safe and ethical systems. It was unveiled at Kakao's developer event, 'if kakao 2024.'

Kakao ASI enables proactive responses to risks that may occur throughout the entire lifecycle of AI systems, from design, development, testing, deployment, monitoring, to updates. It broadly manages risks caused by both AI and humans, and comprehensively designates the scope of management to include areas that may arise from negligence or mistakes.

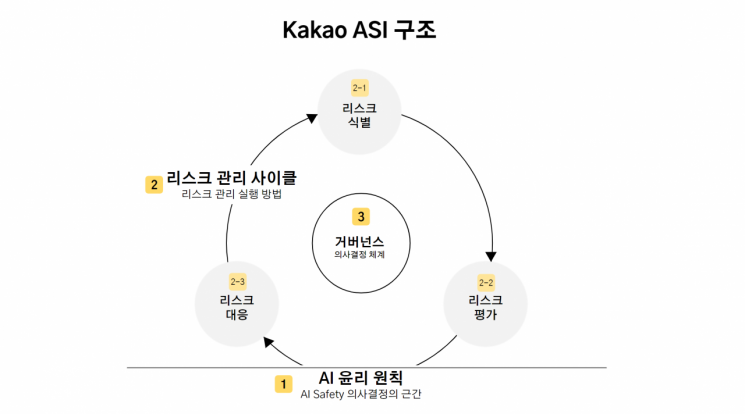

Kakao ASI consists of three core elements: ▲Kakao AI Ethical Principles ▲Risk Management Cycle ▲AI Risk Governance. The Kakao AI Ethical Principles are based on the "Guidelines for Responsible AI of Kakao Group" announced in March 2023, and include principles such as social ethics, inclusiveness, transparency, privacy, and user protection. These are aimed at developers and users.

The Risk Management Cycle is composed of iterative stages of identification, assessment, and response. It plays a key role in minimizing unethical or incomplete AI technologies and ensuring safety and reliability. The cycle is repeatedly applied throughout the entire lifecycle of AI systems.

AI Risk Governance refers to the decision-making system that manages and supervises development, deployment, and usage. It includes internal policies, procedures, responsibility structures, and harmonization with external regulations. Within the governance system, related risks are reviewed from multiple perspectives. It is composed of three levels: AI Safety, the ERM Committee responsible for enterprise-wide risk management, and the highest decision-making body.

© The Asia Business Daily(www.asiae.co.kr). All rights reserved.

![Clutching a Stolen Dior Bag, Saying "I Hate Being Poor but Real"... The Grotesque Con of a "Human Knockoff" [Slate]](https://cwcontent.asiae.co.kr/asiaresize/183/2026021902243444107_1771435474.jpg)