Minimum Chip Pitch in Industry 7㎛

50% Performance and Capacity Improvement Compared to HBM3

"Leading the High-Capacity HBM Market Development"

Samsung Electronics has succeeded in developing a high-capacity product by stacking the 5th generation high-bandwidth memory (HBM) 3E, HBM3E, 12 layers high for the first time in the industry. The new product increases capacity while maintaining the size compared to the 8-layer HBM3E product. It has the performance to download 40 UHD movies per second. On the same day, US-based Micron also announced the start of mass production of a 24GB capacity HBM3E 8-layer product, marking the beginning of full-scale competition in the global memory market for 5th generation HBM.

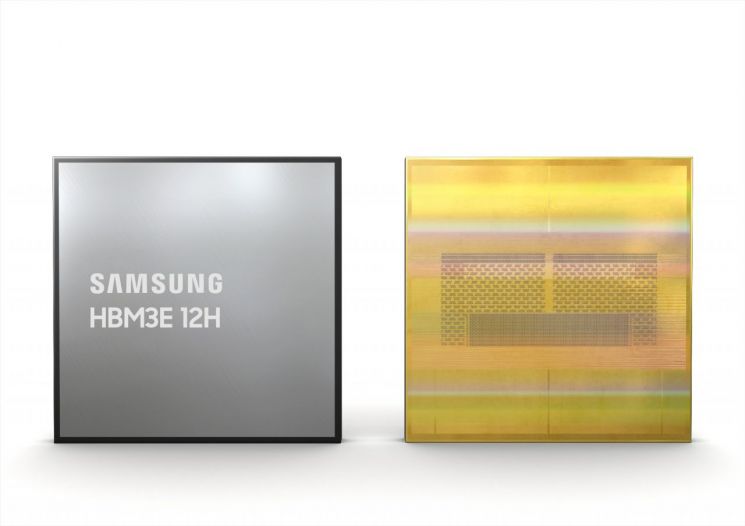

36GB capacity 12-stack HBM3E product developed by Samsung Electronics / Photo by Samsung Electronics

36GB capacity 12-stack HBM3E product developed by Samsung Electronics / Photo by Samsung Electronics

On the 27th, Samsung Electronics announced that it developed the industry's largest capacity 36GB HBM3E 12H (12-layer stacking) product by stacking twelve 24Gb (3GB capacity) DRAM chips.

HBM is a high-performance, high-capacity DRAM made by vertically stacking multiple DRAM chips with micro-holes and connecting the stacked chips with electrodes. Since it is used together with graphics processing units (GPUs), it is called memory for artificial intelligence (AI).

Samsung Electronics implemented the chip spacing at an industry minimum of 7㎛ (1㎛ = one-millionth of a meter) when stacking multiple DRAMs, making the 12H product the same height as the 8H product to increase integration density. The new product offers a maximum bandwidth of 1280GB per second and the largest existing capacity of 36GB, with both performance and capacity improved by more than 50% compared to the previous generation (HBM3 8H). Processing 1280GB per second allows downloading about 40 UHD movies of 30GB capacity each in one second.

Samsung Electronics expects the HBM3E 12H product to be helpful to various companies utilizing AI platforms. Applying HBM3E 12H to server systems can speed up AI training by an average of 34% compared to using HBM3 8H. It is expected to enable up to 11.5 times more AI user services.

With Samsung Electronics' success in developing HBM3E 12H, competition in the HBM3E market is expected to intensify. On the 26th (local time), Micron announced the mass production of the HBM3E 8-layer product and stated that it will be installed in Nvidia H200 GPUs in the second quarter. Samsung Electronics also recently provided 12-layer samples to customers and plans to mass-produce the product within the first half of the year.

Byoung-chul Bae, Vice President and Head of Product Planning at Samsung Electronics' Memory Business Division, said, "We are striving to develop innovative products that meet the high-capacity solution demands of customers providing AI services," adding, "We will lead and pioneer the high-capacity HBM market going forward."

© The Asia Business Daily(www.asiae.co.kr). All rights reserved.

![Clutching a Stolen Dior Bag, Saying "I Hate Being Poor but Real"... The Grotesque Con of a "Human Knockoff" [Slate]](https://cwcontent.asiae.co.kr/asiaresize/183/2026021902243444107_1771435474.jpg)