Girl Group Photos Available for Anyone to Download

Potential Misuse for Profit Generation and Pornographic Content Production

Agency "Considering Legal Action Against Unauthorized Use"

An AI model trained by massively misappropriating photos of K-pop girl group members has been distributed online, raising concerns that it could be exploited for profit generation or the production of obscene materials, prompting agencies to consider countermeasures.

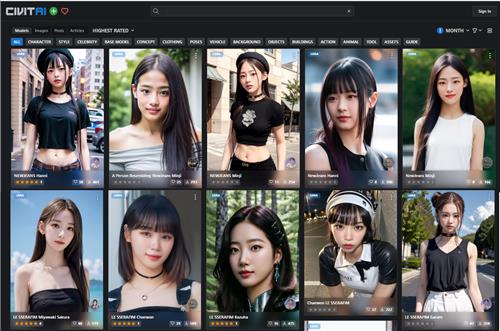

According to industry and legal sources on the 27th, the generative AI model sharing site CivitAI hosts numerous datasets trained on images of popular K-pop girl group members such as NewJeans, LE SSERAFIM, IVE, aespa, and TWICE.

AI model created using stolen images of K-pop girl group members.

AI model created using stolen images of K-pop girl group members. [Photo by AI model sharing site Sibit]

The posted files are AI models that can generate deepfake images by applying them to 'Stable Diffusion,' an image synthesis AI open-sourced last year by Stability AI.

Stable Diffusion's models are broadly divided into a core model called 'checkpoint,' which determines the overall artistic style, and an auxiliary model called 'LoRA' (Low-Rank Adaptation), which determines facial features and poses of characters.

Among these, LoRA, mainly used for creating deepfakes of real people, is only tens to hundreds of megabytes (MB) in size and relatively easy to produce, making it easily shareable on sites like CivitAI and online communities.

Unlike high-performance conversational AIs like ChatGPT, Stable Diffusion is characterized by its ability to run offline on a personal computer accessible to the general public.

Even people without programming knowledge can easily use and misuse it

Recently, an interface called 'WebUI' has emerged, allowing even those without programming skills to use it easily. With a PC equipped with a budget GPU costing several hundred thousand won and some basic knowledge, anyone can download an AI model with a single click and create high-resolution idol composite images at home within 1 to 2 minutes.

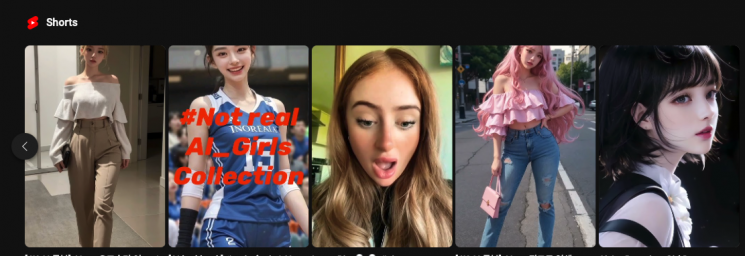

The problem is that such AI models can be exploited for profit or the production of obscene materials. On YouTube, 'AI lookbook' content showcasing female images generated by image synthesis AI is thriving, and some users are selling AI-generated pornography on the patronage platform Patreon.

The K-pop industry is also considering countermeasures against the distribution of AI models using images of their affiliated artists.

A representative from a major domestic entertainment agency stated, "We are aware of the situation," adding, "If images produced by generative AI infringe on the artist's honor or portrait rights, we will request the deletion of the relevant works and consider legal action if necessary."

Meanwhile, creating works with AI models that infringe on the portrait rights of celebrities or influencers and profiting from them can result in civil and criminal liability.

According to Article 2 of the Unfair Competition Prevention Act, "Acts that infringe on another's economic interests by unauthorized use of another's name, portrait, voice, signature, or other identifiable marks widely recognized domestically and having economic value" are considered unfair competition acts.

Article 14-2, Paragraph 2 of the Sexual Violence Punishment Act also punishes those who distribute "photographs, videos, or audio recordings based on a person's face, body, or voice" and related "edited, synthesized, processed, or duplicated materials" against the person's will with imprisonment of up to five years or a fine of up to 50 million won.

© The Asia Business Daily(www.asiae.co.kr). All rights reserved.

![Clutching a Stolen Dior Bag, Saying "I Hate Being Poor but Real"... The Grotesque Con of a "Human Knockoff" [Slate]](https://cwcontent.asiae.co.kr/asiaresize/183/2026021902243444107_1771435474.jpg)