Significant Progress by Google, MS, Nvidia, etc.

Naver, Kakao, LG Join In

[Asia Economy Reporter Seungjin Lee] Global companies are fiercely competing to dominate the super-large artificial intelligence (AI) market.

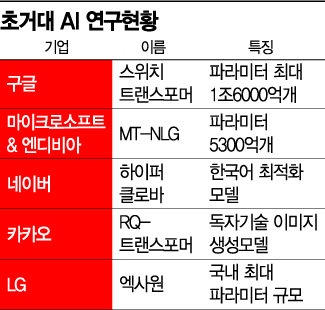

At the center of super-large AI development is Google. Having proven its AI capabilities with 'AlphaGo,' the AI that defeated 9-dan Lee Sedol in Go, Google introduced the 'Switch Transformer' in January last year, which has one of the world's largest numbers of parameters. Although it exists only in source code form, it consists of 1.6 trillion parameters. The more parameters an AI has, the more sophisticated its learning can be, making this model thousands of times more advanced than AlphaGo.

Last month, Google also unveiled 'LaMDA 2,' the next-generation model of its super-large AI language model 'LaMDA,' at its annual developer conference. During a demonstration, when prompted with "Imagine you are deep under the sea," LaMDA 2 imagined the ocean and continued a natural conversation. LaMDA 2 has advanced beyond previous models that only answered questions, now initiating questions to users and leading natural dialogues.

Microsoft and NVIDIA revealed the language model MT-NLG in October last year, which has 530 billion parameters and can perform document summarization, automatic conversation generation, translation, semantic search, and code auto-completion.

In South Korea, the competition to develop super-large AI is also intense. Naver HyperCLOVA, optimized for the Korean language, has 204 billion parameters and was globally recognized when its related research paper was selected for the main track at EMNLP 2021, the most prestigious conference in natural language processing.

Kakao introduced a new super-large AI model called 'RQ-Transformer' through its AI research subsidiary Kakao Brain, which understands input English text and generates corresponding images. It is the largest image generation model publicly available in South Korea.

LG has partnered with Google to expand the usability of the super-large AI 'ExaOne.' LG has formed an 'Expert AI Alliance' with partners from various fields, including LG AI Research Institute, LG Electronics, and Google. ExaOne currently has 300 billion parameters, with plans to increase to 600 billion within the year and even to trillion-scale parameters. LG aims to realize a super-large AI that can be applied across virtually all sectors, including research, education, and finance.

© The Asia Business Daily(www.asiae.co.kr). All rights reserved.