Usage Surges During October University Midterms

Both Students and Instructors See Increased Use Year-on-Year

Shin Dongho: "Oral and Interview-Based Assessments Needed"

Recently, as cases of academic misconduct using generative artificial intelligence (AI) have been uncovered at universities, the use of services that detect AI-generated documents has surged.

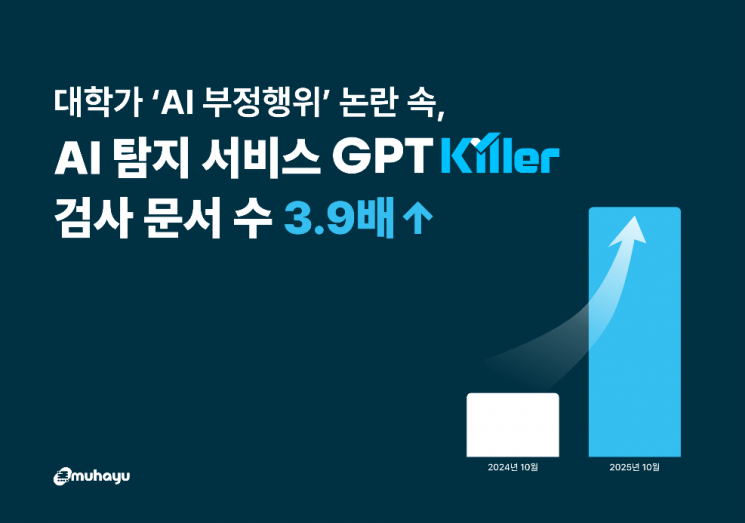

According to an analysis of usage statistics for the AI-generated content detection solution "GPT Killer" by AI specialist company Muhayu, the number of GPT Killer checks in October, during the midterm exam period of the second semester at universities, increased 3.9 times compared to the same period last year, the company announced on the 14th.

Muhayu's GPT Killer determines whether a document was generated by AI with 98% accuracy and is integrated into the plagiarism detection service "Copy Killer." For universities, it is provided as "Copy Killer Campus" for students' self-verification and as "CK Bridge" for instructors' evaluation.

Compared to the same period last year, the number of documents checked with the GPT Killer function in Copy Killer Campus, which students use for self-verification, rose from about 177,000 to about 647,000, an increase of approximately 3.6 times. This suggests that as students' use of AI increases, their need for self-verification before submission has also grown.

The number of documents checked by instructors for evaluation also increased from about 101,000 to about 437,000, which is about 4.3 times higher than the previous year. This indicates that instructors are becoming more aware of students' use of AI and are increasingly using both Copy Killer and GPT Killer when reviewing assignments.

Students' pre-submission self-verification has also contributed to a reduction in actual plagiarism rates. During the midterm exam period of the second semester this year in October, 40% of documents checked in Copy Killer Campus had a plagiarism rate of 50% or higher, but in CK Bridge, the actual submission system, this figure was only 20.6%, about half. This shows that students often draft their work by referencing AI or existing materials, then revise and supplement their content through self-verification.

Recently, major universities have uncovered a series of group cheating incidents involving AI, raising concerns about academic misconduct using AI. Cases of generative AI being used have emerged not only in remote exams but also in in-person exams, leading to growing concerns about the fairness of assessment in educational settings.

Shin Dongho, CEO of Muhayu, commented on the situation, emphasizing, "Three approaches must be implemented together: transparent AI usage policies, ethics education, and the establishment of verification infrastructure." He added, "Rather than outright banning the use of AI, it is important to create source-based guidelines that require students to specify which parts were assisted by AI and which are their own original contributions." He also noted, "New evaluation methods, such as oral or interview-based assessments that allow instructors to directly assess students' thought processes and problem-solving abilities, are also necessary."

© The Asia Business Daily(www.asiae.co.kr). All rights reserved.