Professor Kyungdon Joo's Team Develops Technology to Restore 3D Dog Avatars from a Single Image

Accuracy Enhanced with Animal-Specific Statistical and Generative AI Technology, Published in Int. J. Comput. Vis.

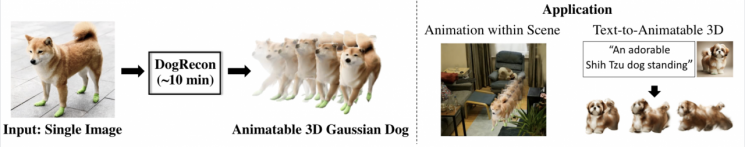

An artificial intelligence technology has been developed that can reconstruct a dog's three-dimensional shape and even create animations from just a single photograph.

Now, it is possible to encounter an avatar that looks just like your pet dog in virtual reality (VR), augmented reality (AR), and the metaverse.

On July 24, a team led by Professor Kyungdon Joo at the UNIST Graduate School of Artificial Intelligence announced the development of an AI model called 'DogRecon,' which can generate a three-dimensional (3D) avatar of a dog capable of movement from just one photograph.

Research team (from bottom left, counterclockwise): Professor Kyungdon Joo, Researcher Kyungsu Cho (first author), Researcher Donghyun Soon, Researcher Changwoo Kang. Provided by UNIST

Research team (from bottom left, counterclockwise): Professor Kyungdon Joo, Researcher Kyungsu Cho (first author), Researcher Donghyun Soon, Researcher Changwoo Kang. Provided by UNIST

Dogs are challenging animals for 3D reconstruction because their body shapes vary by breed, and their joints are often obscured due to their quadrupedal posture. In particular, reconstructing a three-dimensional shape from a single two-dimensional photograph often leads to inaccuracies or distortions in certain parts due to insufficient information.

DogRecon applies a dog-specific statistical model to accurately capture differences in body shape and posture by breed. It also uses generative AI to automatically create images from various angles, allowing it to realistically reconstruct obscured parts. In addition, by utilizing a Gaussian splatting model, it precisely reproduces the dog's curved physique and the texture of its fur.

In performance tests using datasets, DogRecon was able to generate a natural and accurate 3D avatar of a dog from just one photograph, achieving results comparable to those of existing video-based technologies. Previous models often produced unrealistic representations, such as a dog's torso appearing stretched when its legs were bent, awkwardly positioned joints, or ears, tails, and fur clumping together in ways that did not match the real appearance.

DogRecon also demonstrated excellent scalability in application areas such as VR and AR, including 'text-based animation generation,' which allows users to animate the avatar simply by entering text commands.

Technology for generating three-dimensional avatars that can be animated and their application areas.

Technology for generating three-dimensional avatars that can be animated and their application areas.

This research was led by Researcher Kyungsu Cho at UNIST as the first author, with Researcher Changwoo Kang (UNIST) and Researcher Donghyun Soon (DGIST) as co-authors. Kyungsu Cho stated, "As more than a quarter of all households now have companion animals, I wanted to expand 3D reconstruction technology, which has so far been developed mainly for humans, to include pets as well. DogRecon will be a tool that allows anyone to create and animate their own pet dog in digital spaces."

Professor Kyungdon Joo said, "This research is a meaningful achievement in that it combines generative AI and 3D reconstruction technology to create pet models that closely resemble reality. We look forward to expanding this technology to various animals and personalized avatars in the future."

This research was published on June 2 in the International Journal of Computer Vision, the world's most prestigious journal in the field of computer vision. It was carried out with support from the Ministry of Science and ICT and the Institute of Information & Communications Technology Planning & Evaluation (IITP) through the 'Development of AI Technology for Inferring and Understanding New Facts Based on Everyday Common Sense' project and the 'UNIST Graduate School of Artificial Intelligence Support Project.'

The paper and demonstration videos are available on the project website.

© The Asia Business Daily(www.asiae.co.kr). All rights reserved.

![Clutching a Stolen Dior Bag, Saying "I Hate Being Poor but Real"... The Grotesque Con of a "Human Knockoff" [Slate]](https://cwcontent.asiae.co.kr/asiaresize/183/2026021902243444107_1771435474.jpg)