NC AI announced on July 16 that it will release four versions of its Korean-based multimodal artificial intelligence (AI) model, VARCO VISION 2.0, as open source.

VARCO VISION 2.0 is an AI model capable of understanding both images and text to answer questions. It can analyze multiple images simultaneously and process complex documents, tables, and charts. The model can understand both Korean and English, and its text generation capabilities and understanding of Korean culture have been enhanced.

NC AI's Korean-based multimodal artificial intelligence (AI) model VARCO VISION 2.0. Provided by NC AI

NC AI's Korean-based multimodal artificial intelligence (AI) model VARCO VISION 2.0. Provided by NC AI

The four open-source models are 14B, 1.7B, 1.7B OCR, and Video Embedding. Of these, the 14B and Embedding models were released on July 16, while the 1.7B and OCR (Optical Character Recognition) models are scheduled for release next week.

NC AI is providing both the 14B (14 billion parameters) model and the lightweight 1.7B (1.7 billion parameters) model simultaneously. The 14B model is optimized for environments requiring complex multi-image analysis and advanced reasoning. The lightweight 1.7B model is designed to operate on personal devices such as smartphones and PCs.

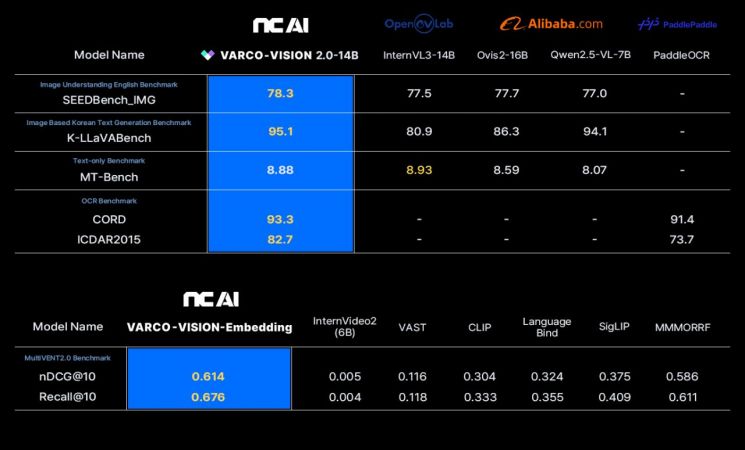

NC AI emphasized that the 14B model outperformed comparable multimodal models such as InternVL3-14B, Ovis2-16B, and Qwen2.5-VL7B in performance tests including English image understanding, Korean image understanding, and OCR benchmarks.

The company is also releasing VARCO-VISION-1.7B-OCR, a model specialized in optical character recognition that identifies text within images. Unlike conventional OCR models, it adopts a VLM-based approach that simultaneously learns image and language information.

The multimodal embedding model 'VARCO VISION Embedding' calculates the similarity between text, images, and videos in a high-dimensional embedding space. Embedding refers to converting the contents of a video into numerical values for storage, and based on the distance or similarity between embeddings, it is possible to retrieve highly relevant images or videos.

According to NC AI, these four newly released models can be utilized in a wide range of fields including finance, education, culture, shopping, and manufacturing.

NC AI plans to contribute to the government's initiative to strengthen 'Sovereign AI' by releasing these four multimodal AI models. Lee Yeonsu, CEO of NC AI, stated, "With technological advancement, the global trend is shifting from language models that only process text to vision-language models that also utilize vision models. Through the release of these four models, NC AI, which already leads domestic multimodal AI in verticals such as media, gaming, and fashion, has confirmed the potential to safeguard Korea's sovereignty in the field of vision-language models as well."

© The Asia Business Daily(www.asiae.co.kr). All rights reserved.