FBI warns of scams impersonating high-ranking U.S. officials

$5 billion in losses across the U.S. last year

Celebrity impersonation scams induce fraudulent money transfers

The Federal Bureau of Investigation (FBI) has issued a warning to U.S. government employees about the growing threat of artificial intelligence (AI) scam voice messages impersonating high-ranking officials. In the United States alone, losses exceeded 7 trillion won last year, with a significant increase in victims not only among the elderly but also among younger generations. As AI deepfake technology becomes more sophisticated, there have even been incidents where fake videos impersonating celebrities are created to scam fans out of money.

FBI warns: "Beware of voice messages impersonating high-ranking U.S. officials"

According to CNN, on May 15 (local time), the FBI warned that since last month, there have been scams in which AI-generated voice messages are used to impersonate high-ranking U.S. officials. The agency also noted that hacking attempts on government accounts continue to occur, urging U.S. government employees to remain vigilant. Hackers whose identities have not yet been determined are reportedly attempting to contact U.S. government officials and their acquaintances.

The FBI explained, "Hackers first impersonate high-ranking officials and repeatedly send text messages and AI-generated voice messages to build trust. They then encourage the target to communicate on a separate platform and send malicious links." The agency added, "Anyone who clicks on these links risks exposing their personal information. The hackers then use the victim's contact information to target other government officials, their associates, or acquaintances, leading to further spread of the scam."

In April 2024, the U.S. Department of Health and Human Services (HHS) IT departments were targeted by a cyberattack involving fake AI voice messages. In October 2024, during the U.S. presidential election period, a deepfake robocall using a fake voice of former President Joe Biden spread widely, sparking controversy.

Scams impact all generations, from the young to the elderly... $5 billion in losses in the U.S.

According to the FBI, losses from AI-related scams across the United States last year were estimated at $5 billion (about 7 trillion won). As scams involving impersonation of government officials or business leaders via AI-generated text messages, voice messages, and videos increase, people of all ages have suffered financial losses. Among those who reported damages, 44% of victims were in the 20-29 age group, while 24% were in the 70-79 age group.

There has also been a sharp rise in "romance scam" cases, where scammers use social networking services (SNS), voice, or video calls to pose as romantic partners and steal money. According to statistics released by the U.S. Federal Trade Commission (FTC), romance scam losses in the U.S. alone amounted to $1.3 billion (about 1.8 trillion won). The actual scale of losses is likely higher when including unreported cases.

Some authorities have also begun regulating the increasing use of AI to create fake reviews and recommendations on online shopping platforms. In August 2024, the U.S. FTC announced in a statement, "We are issuing a final rule prohibiting fake consumer reviews, testimonials, or celebrity endorsements based on AI, as well as other types of unfair or deceptive practices." This regulation has been in effect since October 2024.

Scams impersonating celebrities on the rise... Tactics becoming more sophisticated

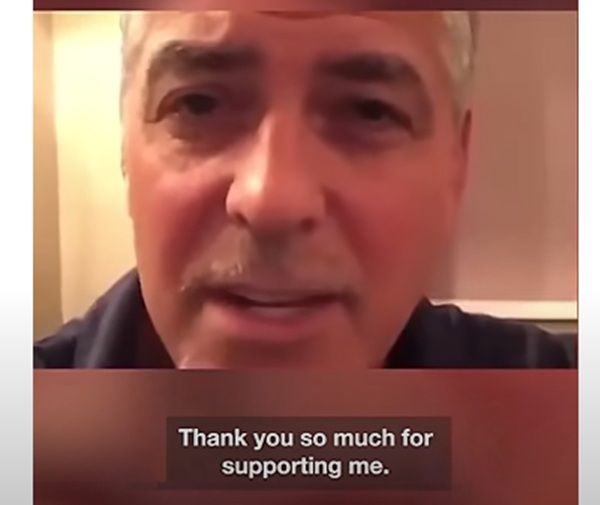

A fake video of George Clooney created using artificial intelligence (AI) deepfake technology. On the 14th (local time), a woman in Argentina was scammed into sending 10,000 pounds (about 18.63 million won) after being deceived by the video. YouTube

A fake video of George Clooney created using artificial intelligence (AI) deepfake technology. On the 14th (local time), a woman in Argentina was scammed into sending 10,000 pounds (about 18.63 million won) after being deceived by the video. YouTube

As AI deepfake technology becomes more advanced, crimes in which scammers use AI to create fake videos of celebrities and defraud fans are also increasing. Scammers are creating not only photos and voice messages but even sophisticated video call screens to manipulate fans' emotions, then inducing them to send money or invest, resulting in financial losses worldwide.

According to the UK Daily Mail, on May 14 (local time), a woman in Argentina was scammed out of 10,000 pounds (about 18.59 million won) after being deceived by a fake video of Hollywood star George Clooney created with AI. The woman received fake photos, videos, and voice calls from the impersonated George Clooney through a hacker's account on SNS over a period of six weeks and believed them to be real. The victim said, "The George Clooney in the video told me he needed money to divorce his wife, and I was deceived into sending the money."

Earlier, in January 2025, a similar AI scam occurred in France. A French woman was scammed out of 700,000 francs (about 1.17 billion won) after being deceived by a fake video of Brad Pitt created with AI. The woman stated that the Brad Pitt in the video claimed to have kidney cancer and needed money for treatment, and she sent the large sum without suspicion. French police believe the perpetrators are hackers of Nigerian nationality.

© The Asia Business Daily(www.asiae.co.kr). All rights reserved.