'Kanana Technical Report' Released: Announcement of AI Model Research Achievements

LLM 'Kanana Flag' Developed: Full Lineup Established

'Kanana Nano' Released as Open Source Model on GitHub

Kakao is advancing technology verification and ecosystem expansion based on its proprietary artificial intelligence (AI) models. Among its in-house models, the lightweight model will be released as open source for anyone to use, aiming to revitalize the domestic AI ecosystem.

On the 27th, Kakao announced that it has published a technical report detailing the research outcomes of its self-developed language model 'Kanana' on the archive platform ArXiv. At the same time, it distributed the 'Kanana Nano 2.1B' model, part of the language model lineup, as open source on GitHub.

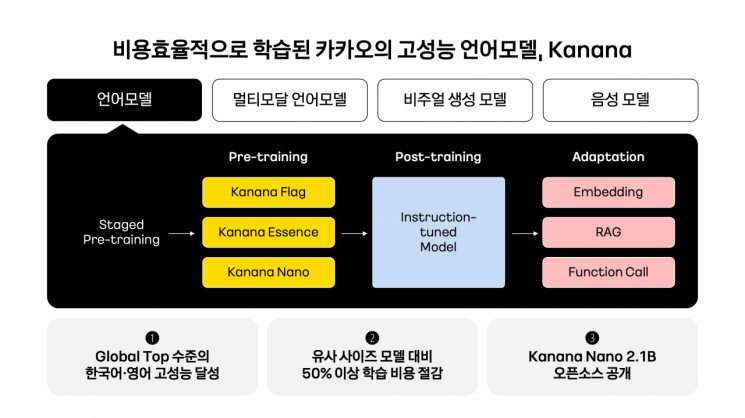

The technical report is an AI paper containing detailed information such as parameters, training methods, and training data. Kakao included the entire process of Kanana language model’s pre-training and post-training in this report. The structure of the Kanana model, training strategies, and performance on global benchmarks can also be reviewed.

Kakao’s large language model (LLM) 'Kanana Flag' completed training at the end of last year. With this, Kakao has built the entire Kanana language model lineup (Flag, Essence, Nano) that was unveiled at the developer conference 'if(kakaoAI)2024' in October last year.

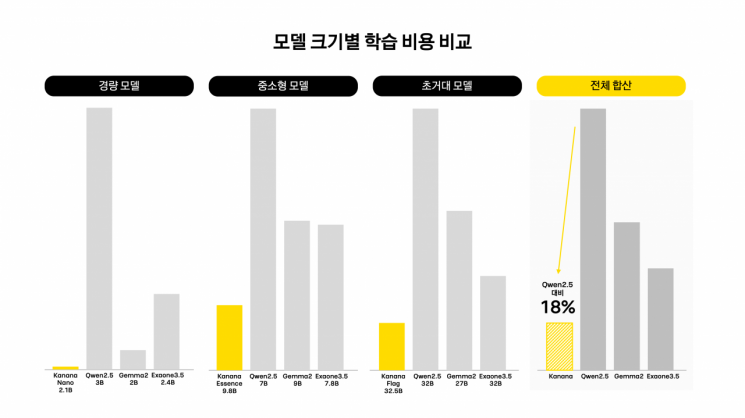

Kakao explained that Kanana Flag demonstrated superior processing capabilities compared to other models on Korean language performance benchmarks such as 'LogicKor' and 'KMMLU'. On English performance benchmarks like 'MT-bench' and 'MMLU', it recorded results comparable to competing models. Additionally, through optimization of training resources, it reduced costs by more than 50% compared to models of similar size.

To improve training efficiency of large-scale language models, Kakao applied training techniques including ▲Staged pre-training ▲Pruning (a technique that trims model components to retain only important elements) ▲Distillation (a method of transferring knowledge from a large model to a smaller one) ▲DUS (Depth Upscaling). Through these, it achieved training costs less than half of comparable models.

Kakao plans to integrate the latest technologies based on Reinforcement Learning and Continual Learning into the Kanana model in the future. It aims to enhance reasoning, mathematics, and coding abilities, and to advance alignment technology to improve accuracy. Through this, Kakao intends to continue upgrading the model to enable communication in various forms such as voice, images, and videos.

Furthermore, Kakao has released its self-developed lightweight model 'Kanana Nano 2.1B' as open source on GitHub. Open source means that the programming source code is made public so anyone can utilize it for new development. Accordingly, open source AI reveals its operating principles, design methods, and algorithms. Besides Kanana Nano, DeepSeek’s 'R1' and Meta’s 'LLaMA' are also open source AI models.

The base model, Instruct model, and Embedding model of Kanana Nano 2.1B are publicly available on GitHub. GitHub is a distributed version control system (VCS) platform that stores program source code online, serving as a kind of cloud service for developers.

This model is a high-performance lightweight model suitable for use by researchers and developers as well as in on-device environments. Kakao explains that, like Kanana Flag, it shows excellent performance in processing both Korean and English.

Kakao plans to continuously support updates to the model so that researchers and developers can apply it in various ways even after this open source release.

Byunghak Kim, Kanana Performance Leader at Kakao, said, "Based on model optimization and lightweight technology, we have efficiently secured a high-performance proprietary language model lineup comparable to global AI models such as LLaMA and Gemma. We expect that this open source release will contribute to revitalizing the domestic AI ecosystem." He added, "We will continue to develop practical and safe AI models focused on efficiency and performance, and strengthen AI competitiveness through continuous technological innovation."

© The Asia Business Daily(www.asiae.co.kr). All rights reserved.