AI Company Website Uses Deceased Person's Face

Experts Express Concerns Over Personal Data Protection Capabilities

An artificial intelligence (AI) chatbot that used the name and photo of a woman murdered 18 years ago in the United States has been discovered, sparking controversy.

On the 15th (local time), The Washington Post reported that as conversational AI has recently gained popularity, cases of unauthorized use of real individuals' personal information are rapidly increasing. In particular, The Washington Post condemned the need for more proactive personal information protection, citing the case of Drew Crescent, who recently found that her deceased daughter's photo was being used by a chatbot.

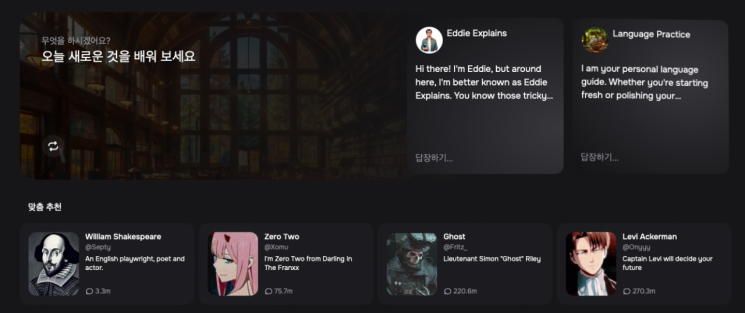

The chatbot in question was revealed to have been created by someone who unauthorizedly copied Jennifer's real name and a graduation photo taken during her lifetime, and it was published on the AI startup 'Character.ai' website. Character.ai is a company jointly founded by former Google developers after leaving the company, providing AI chatbot technology that allows conversations not only with real people but also with virtual characters, including those from animated films.

The chatbot in question was revealed to have been created by someone who unauthorizedly copied Jennifer's real name and a graduation photo taken during her lifetime, and it was published on the AI startup 'Character.ai' website. Character.ai is a company jointly founded by former Google developers after leaving the company, providing AI chatbot technology that allows conversations not only with real people but also with virtual characters, including those from animated films. [Photo by Character.ai homepage]

According to the report, Crescent, who lives in Atlanta, Georgia, discovered on the 2nd that an AI chatbot using the name and photo of her deceased daughter Jennifer appeared in her recommendations. Jennifer, Drew's daughter, was shot and killed by her ex-boyfriend in February 2006 at the age of 18. However, nearly 20 years after Jennifer's death, the chatbot introduced her as an AI character described as a "video game journalist and expert in technology, pop culture, and journalism."

The chatbot was found on the AI startup 'Character.ai' website, where someone had unauthorizedly copied Jennifer's real name and a graduation photo taken during her lifetime. 'Character.ai' is a company co-founded by former Google developers after leaving the company, providing AI chatbot technology that allows conversations not only with real people but also with characters from cartoons and other virtual figures. Accordingly, users can create chatbots by uploading photos, voice recordings, or short texts themselves. Therefore, this chatbot was set up to interact with an unspecified number of people, and as the controversy spread, the company deleted it upon receiving a report from the bereaved family.

Regarding this incident, The Washington Post stated that unauthorized use of crime victims like Jennifer as chatbots can cause great shock to the bereaved families, adding, "Among experts, concerns are growing about whether the AI industry handling personal information has the ability and willingness to protect individuals."

In the United States, a class-action lawsuit has been filed by citizens against OpenAI, which has been collecting and using personal information online without permission, including data for Chat GPT, since June last year, and the trial is currently underway. Italy also blocked access to Chat GPT in March 2022, stating that OpenAI's mass collection and storage of personal information for training purposes violates data protection laws.

In the United States, a class-action lawsuit has been filed by citizens against OpenAI, which has been collecting and using personal information online without permission, including data for Chat GPT, since June last year, and the trial is currently underway. Italy also blocked access to Chat GPT in March 2022, stating that OpenAI's mass collection and storage of personal information for training purposes violates data protection laws. [Photo by Pixabay]

Meanwhile, in the United States, a class-action lawsuit was filed last June against OpenAI, which is collecting and using personal information online without authorization, including ChatGPT, and the trial is ongoing. Italy blocked access to ChatGPT in March 2022, arguing that OpenAI's mass collection and storage of personal information for training purposes violated privacy laws. After OpenAI implemented corrective measures, access was restored, but in January of this year, further violations of privacy laws were found, leading to renewed demands for corrective action.

In South Korea as well, earlier this year, the Personal Information Protection Commission conducted an inspection of about ten domestic and foreign companies, including OpenAI and Naver, operating AI services. This inspection began in the second half of last year to check for potential privacy infringements related to the recently activated AI technologies.

© The Asia Business Daily(www.asiae.co.kr). All rights reserved.

![Clutching a Stolen Dior Bag, Saying "I Hate Being Poor but Real"... The Grotesque Con of a "Human Knockoff" [Slate]](https://cwcontent.asiae.co.kr/asiaresize/183/2026021902243444107_1771435474.jpg)