Early-Stage Deepfake Detection Technology

Challenge to Narrow the Gap with Generation Technology

The speed of deepfake development using artificial intelligence (AI) is accelerating rapidly every day, but detection technology is lagging behind. As the technology becomes more sophisticated, industry experts estimate that 2 out of 10 deepfakes are difficult to detect even with detection technology. As deepfake technology evolves quickly, narrowing the gap with detection technology has become a key challenge.

Deepfake Detection Rate at 80%... The Rest Are Blind Spots

According to the IT industry on the 3rd, the current detection rate of deepfakes filtered by AI is around 80%. In other words, the remaining 20% cannot be detected by AI. This is because the pace of deepfake development is outpacing detection technology.

This is also evident in investment scale. According to market research firm Fortune Business Insights, the global deepfake market size is expected to grow from $6.26 billion (approximately 8.38 trillion KRW) last year to $38.44 billion (approximately 51.43 trillion KRW) by 2032. The demand is increasing mainly in the entertainment, media, and e-commerce sectors. On the other hand, the deepfake video detection market size is expected to reach only $7.32 billion (approximately 9.8 trillion KRW) by 2030. This indicates how large the demand for deepfakes is.

One reason for the technological gap is that both original and manipulated data are required to detect manipulation. Also, when training AI models with original and manipulated data, the data must be processed into a form that the model can understand. While generating a simple deepfake image takes 1 to 2 minutes, detection usually requires about 5 to 10 minutes.

The emergence of new deepfake technologies is also an obstacle. If deepfake images are contaminated to make detection difficult or created using different methods, new technologies must be developed to respond accordingly. Lee Yoohyun, a researcher at DeepBrain AI’s deep learning team, pointed out, "Because there is inevitably a gap between deepfake generation and detection technologies, sufficient training data must be secured to reduce the gap even slightly."

The Ministry of Science and ICT and the Institute for Information & Communications Technology Planning & Evaluation (IITP) are promoting the development of countermeasure technologies against the negative effects of generative AI, including deepfakes, as national R&D projects. Companies such as Sands Lab, LG Uplus, and FortyTwoMaru, which won the contract, plan to develop technology that combines detection technology with small language models (sLLM) to enable detection and response in natural language by 2027.

AI Catching AI... Detection Technology ‘In Pursuit’

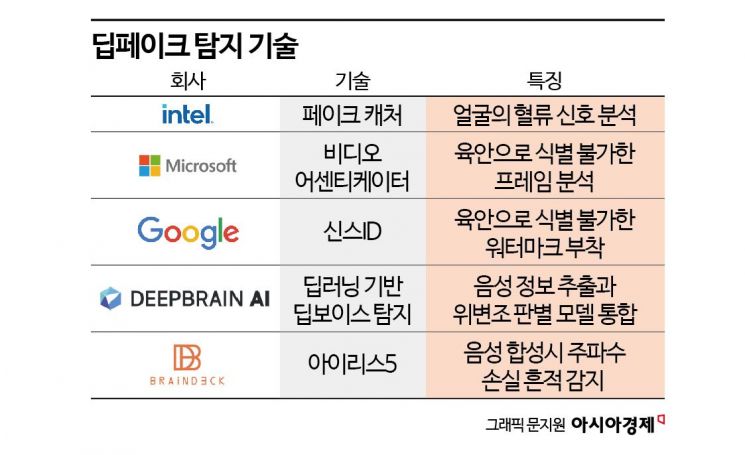

Fundamentally, deepfake detection technology involves inputting both original and manipulated data into an AI model to learn subtle differences. In image synthesis, when a new face is overlaid on an original photo, the contours of the face may distort or subtle color differences may appear, which the AI learns to detect. Microsoft’s detection tool, ‘Video Authenticator,’ analyzes frames invisible to the naked eye to determine authenticity by assessing the degree of blurriness along body boundaries such as the jawline.

It also uses detailed features such as blood flow changes. Intel’s deepfake video detection technology, ‘FakeCatcher,’ detects changes in the color of veins on the face surface in the video at the millisecond level. Humans’ vein colors subtly change when the heart beats, but deepfakes lack this change, which is the basis of this technology.

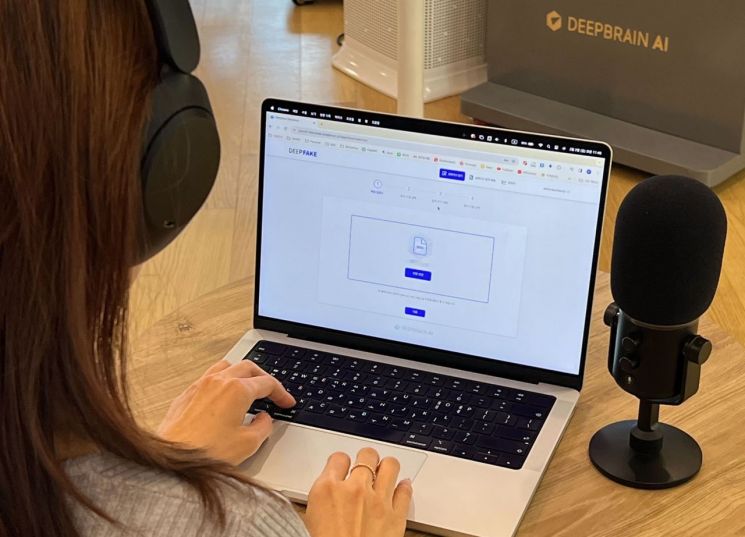

DeepBrain AI filed a patent for deep learning-based deep voice detection technology last February.

DeepBrain AI filed a patent for deep learning-based deep voice detection technology last February. [Photo by DeepBrain AI]

There is also a method of finding traces of the AI model used to create the deepfake or analyzing information contained in the deepfake file. This involves identifying whether it was created with specific software frequently used for deepfakes or detecting ‘tags’ indicating AI-generated images. Google DeepMind developed ‘DeepMind SynthID’ to prevent deepfake misuse, which leaves fake marks invisible to the naked eye, making it difficult to edit or remove.

Domestic companies are also releasing detection technologies. DeepBrain AI, a generative AI specialist company, has developed technology to distinguish AI-manipulated voices and offers it as Software as a Service (SaaS). It integrates a voice information extraction model and a tampering detection model to extract high-frequency range voice information necessary for detection. This is because existing models focused on low-frequency voice extraction find it difficult to detect recently emerging high-frequency modulation traces.

AI voice specialist company Braindec developed the deep voice solution ‘Iris-5.’ This technology detects traces of frequency loss occurring during the voice synthesis process. Existing detection models used vocoder detection (a device that converts human voice or pitch into the pitch of sounds output through electronic instruments) or acoustic characteristics. These models were vulnerable to unknown vocoders or sophisticated voice conversion, which has now been improved.

© The Asia Business Daily(www.asiae.co.kr). All rights reserved.