AMD boasts performance by directly comparing with Nvidia H100

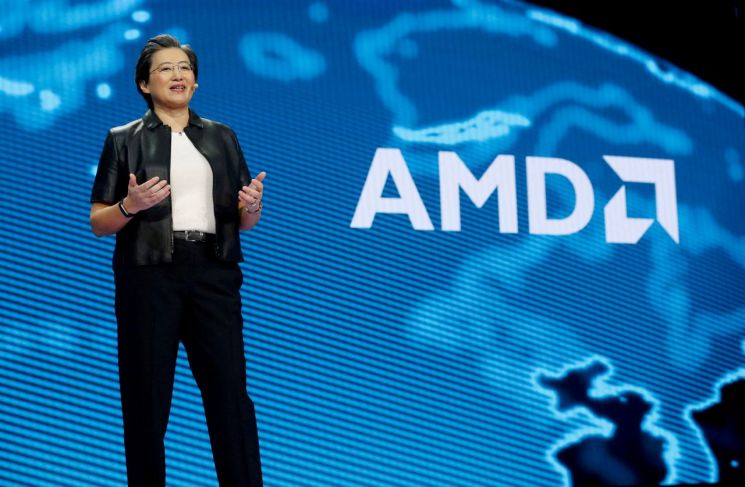

Lisa Su CEO "AI semiconductor market to grow to 527 trillion in 4 years"

As the importance of semiconductors essential for artificial intelligence (AI) technology development rapidly grows, AMD officially launched its AI semiconductor series 'Instinct MI300' on the 6th (local time). As a rival to Nvidia, which has dominated the AI semiconductor market, AMD plans to compete by further enhancing GPU processing speed and capabilities.

AMD Directly Compares with Nvidia H100... "MI300X Performance is Superior"

On the same day, AMD held a technology event in San Jose, California, unveiling new AI graphics processing units (GPUs) for servers and PCs: the 'Instinct MI300X' and the 'Instinct MI300A,' a combination of a central processing unit (CPU) and GPU. AMD had previously announced the Instinct MI300 series in June and stated that full-scale release would begin by the end of the year.

Both products are AI semiconductors used for training and operating large language models (LLMs). In particular, the MI300X, a new GPU product central to AI technology development, is equipped with 192GB of HBM3 memory, which is 1.5 times larger in capacity than the previous M1250X version, and AMD introduced improvements in memory capacity and energy efficiency.

AMD emphasized the superior performance of its product by comparing the MI300X with Nvidia's H100, currently the best-selling AI GPU in the market. Compared to the H100, the MI300X contains over 150 billion more transistors and has approximately 2.4 times the memory capacity. Additionally, during the training of Meta Platforms' LLM 'Llama2,' the MI300X demonstrated about 1.4 times better inference capability than the H100.

Lisa Su, AMD's Chief Executive Officer (CEO), stated, "As the size and complexity of LLMs continue to increase, vast amounts of memory and computational power are required," adding, "We know that GPU performance is the single most important factor in applying AI." She further emphasized the MI300X as "the world's highest-performing accelerator."

AMD plans to supply the Instinct MI300X GPU to major data centers including Oracle Cloud, Microsoft (MS), and Meta.

In November, MS announced at its annual developer event 'Ignite' that it would offer the 'Azure ND MI300x Virtual Machine Instance,' running on servers equipped with eight Instinct MI300X units. Meta also announced plans to deploy MI300 processors in its data centers, and Oracle explained that it intends to use AMD chips in its cloud services.

AMD's Rapid Growth... AI Semiconductor Industry Expected to Reach 527 Trillion Won in Four Years

AMD is striving to establish itself as a competitor on par with Nvidia in the AI GPU market dominated by Nvidia. The Wall Street Journal (WSJ) evaluated that "AMD is challenging Nvidia's dominance in AI computing."

AMD's stance is to sell products with better performance than Nvidia's at a lower price. AMD did not disclose the selling price of the new products on this day. Instead, considering Nvidia's AI semiconductors are priced around $40,000, CEO Su stated that AMD's semiconductors should be cheaper than competitors' products.

AMD's growth in the AI semiconductor market is faster than that of the 'strong' Nvidia.

In terms of semiconductor sales for data centers, Nvidia's related revenue increased from $6.7 billion in 2020 to $15 billion in 2022, while AMD's rose from $1.7 billion to $6 billion. Although Nvidia's absolute revenue is still more than twice that of AMD, the sales growth rate during this period was 123.9% for Nvidia and 252.9% for AMD, indicating AMD's faster growth.

At the event, CEO Su forecasted that the AI semiconductor industry could grow to over $400 billion by 2027. This is more than double the previous estimate of $150 billion made as recently as August, adjusted within just four months. AMD expects accelerator sales to exceed $2 billion in 2024.

Meanwhile, Nvidia plans to release a new AI GPU product 'H200,' expected to be a 'game changer,' in the second quarter of next year. It is currently projected to improve performance by about 90% compared to the H100.

© The Asia Business Daily(www.asiae.co.kr). All rights reserved.

![Clutching a Stolen Dior Bag, Saying "I Hate Being Poor but Real"... The Grotesque Con of a "Human Knockoff" [Slate]](https://cwcontent.asiae.co.kr/asiaresize/183/2026021902243444107_1771435474.jpg)