NVIDIA, leading the artificial intelligence (AI) chip market, has unveiled a next-generation AI chip capable of inferring large-scale AI language models. A platform that allows easy development of generative AI is also scheduled to be launched soon.

On the 8th (local time), NVIDIA introduced the next-generation AI chip, 'GH200 Grace Hopper Superchip,' at its annual conference held in Los Angeles, USA. This chip combines the same graphics processing unit (GPU) as the current top-tier H100 with 141GB of cutting-edge memory and a 72-core ARM central processor. Although it uses the same GPU as the H100, local media analyzed that its memory capacity is about three times larger.

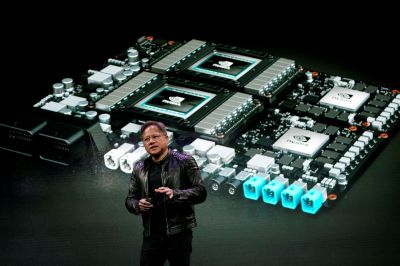

NVIDIA's new AI chip is characterized by expanded memory capacity compared to existing chips, enabling it to accommodate large-scale AI models. Jensen Huang, NVIDIA's CEO, introduced it as "designed to scale the size of data centers worldwide." CEO Huang said, "You can load almost any large-scale language model you want. Then it will infer like crazy," adding, "The cost of large-scale language model inference will drop significantly." Additionally, NVIDIA announced a system that combines two GH200 chips into a single computer for even larger AI models.

IT specialist media The Verge described, "NVIDIA, the market leader providing high-end processors for generative AI, is releasing a much more powerful chip as demand for large-scale AI models continues," calling it "the most complex generative AI workloads across large language models, recommendation systems, and vector databases." Full-scale sales will begin in the second quarter of next year. The price has not been disclosed. The Verge added that the H100 line is currently sold at about $40,000.

Along with this, NVIDIA announced 'NVIDIA AI Workbench,' a platform that allows developers to create their own generative AI on PCs or workstations. It enables easy definition and execution of generative AI using necessary enterprise-grade models. The Workbench supports NVIDIA’s own AI platform frameworks, libraries, SDKs, as well as GitHub, Hugging Face, and others.

NVIDIA currently holds more than 80% market share in the AI chip market. AMD, Intel, and others aiming to expand their own AI GPU production are trailing behind.

Meanwhile, on the New York Stock Exchange today, NVIDIA’s stock price is trading at a level more than 1% lower than the previous close.

© The Asia Business Daily(www.asiae.co.kr). All rights reserved.

![Clutching a Stolen Dior Bag, Saying "I Hate Being Poor but Real"... The Grotesque Con of a "Human Knockoff" [Slate]](https://cwcontent.asiae.co.kr/asiaresize/183/2026021902243444107_1771435474.jpg)