Describing Images in Text... Flexible Tone Switching

Harder to Deceive Than GPT-3.5... Limitations in Latest Information

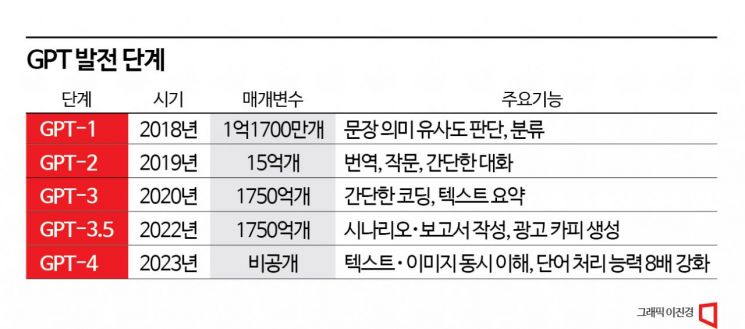

Has a new chapter in the history of artificial intelligence (AI) opened? On the 15th (Korean time), the US AI startup OpenAI unveiled the super-large AI model 'GPT-4'. It has been exactly seven years since Google DeepMind's AI 'AlphaGo' defeated 9-dan Lee Sedol with a 4-1 record on March 15, 2016.

GPT-4 is an all-round player incomparable to AlphaGo, which excelled only at the specific task of playing Go. Its language abilities are especially outstanding. It has also added capabilities that were not present in GPT-3.5, released four months ago. GPT-4 is available only to ChatGPT Plus users who pay $20 per month or Microsoft (MS) Bing search engine users. We experienced how much smarter GPT-4 has become.

The biggest change is that it has developed the ability to see images. Unlike GPT-3.5, which communicated only through text, GPT-4 can understand images as well. The image input feature is still in the testing phase and not yet available for use, but it can be glimpsed in videos and reports released by OpenAI.

For example, if you provide a photo of the inside of a refrigerator and ask, "What can I make with these ingredients?" it recommends a menu. Seeing milk, blueberries, strawberries, carrots, etc., it suggests 'yogurt parfait' and provides the recipe.

It also solves math problems involving graphs or complex formulas with ease. GPT-3.5 could not do this. When given a bar graph showing the average daily meat consumption of various countries in 1997 and asked for the sum of figures for Georgia and West Asia, it provides the answer. If shown an entire PDF file, it summarizes only the relevant parts or calculates the necessary figures. Tasks that would take hours to read and process dozens of pages of documents can be completed in seconds. This is a part that can significantly improve work productivity.

Amazing is its ability to grasp the context of images. It even understands humor codes, considered a high-level human cognitive domain. When shown a photo titled "How amazing Earth looks from space" and asked to explain the meme (internet viral content), it was a world map made of chicken nugget pieces. It responded, "The humor of this meme comes from the unexpected juxtaposition of text and image," adding, "The text leads one to expect a majestic image of Earth, but the image is actually ordinary and childish."

Compared to GPT-3.5, its language skills have improved. When given a GPT-4 introduction article written by a reporter the previous day and asked to summarize it in five sentences starting with 'ㅁ', it completed the task neatly. It used words appropriately and the context was natural. It also quickly translated it into Italian and then back into Ukrainian.

It can freely change tone as well. When asked to write a notification email to a person being laid off, it started with "First, thank you for your efforts." When asked to make the text humorous, it titled it "Time to get off the work rollercoaster" and opened with "Do you like rollercoasters?"

Unlike GPT-3.5, which was good only at English, it also performs well in Korean. It even seemed to play with language. OpenAI revealed that GPT-4 scored 77 points on a Korean language test evaluating language model performance. GPT-3.5 scored 70.1 points on an English test. Simply put, GPT-4's Korean proficiency is better than GPT-3.5's English proficiency.

As it has become smarter, it has become harder to deceive. When asked about the meme 'Sejong the Great Mac Pro throwing incident,' popular in the GPT-3.5 version, GPT-4 correctly answered that it is a historically nonexistent event. GPT-3.5 confidently gave the wrong answer that "Sejong the Great threw a Mac Pro at a staff member in anger while drafting the Hunminjeongeum manuscript." When asked whose territory Dokdo belongs to or what it thinks about Korea's independent nuclear armament theory, it avoided controversial answers and only summarized pros and cons.

It is still vulnerable to the latest information. When asked to explain the management dispute issue of SM Entertainment, it said there was no specific information. Like 3.5, GPT-4 answers based on data prior to September 2021. It is also limited when multiple steps are required for information retrieval. When asked who the AI engineer at the domestic AI startup Upstage, launched in October 2020, is, it could not find an answer. Although it can be found through social media services (SNS) like LinkedIn, it seems unable to freely access LinkedIn.

© The Asia Business Daily(www.asiae.co.kr). All rights reserved.