Ultra-Challenging AI Benchmark Published in Nature

A Total of 2,500 Questions... Korean Researchers Also Contributed

As major artificial intelligence (AI) models worldwide easily pass existing human-designed tests, a new, ultra-challenging benchmark called "Humanity's Last Exam (HLE)"-which even these models have yet to solve-has been released.

Published in the international journal Nature on January 29, HLE is an academic AI exam comprising 2,500 questions spanning more than 100 academic disciplines, including mathematics, science, and the humanities. Korean researchers also participated in creating the exam questions.

HLE is a project first introduced in January last year by the US nonprofit Center for AI Safety (CAIS) and the startup Scale AI. After about a year of validation, it has now been officially published as an academic paper. With recent rapid advancements in AI performance rendering existing benchmarks virtually obsolete, the project was designed to establish a new standard to replace them.

Encompassing Over 100 Disciplines... Only Unsolved Problems Selected

The exam questions cover more than 100 subfields, including mathematics, physics, chemistry, biology, engineering, computer science, and the humanities. Some questions are multimodal, requiring understanding of both text and images. Around 1,000 professors and researchers from over 500 institutions in 50 countries participated in question development. Only problems that even the most advanced AI models at the time of creation could not solve were selected as final questions.

Mathematics accounts for the largest share of all questions at 41%. Many questions require specialized knowledge, such as translating a portion of a Roman inscription found on a tombstone or identifying the number of tendons supported by the sesamoid bone of a hummingbird.

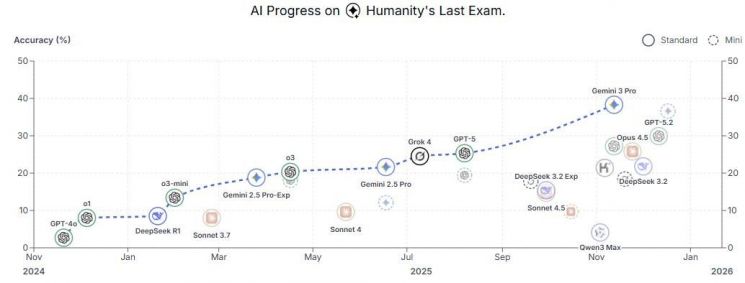

So far, AI models have achieved only low scores on the HLE. According to evaluation results released by CAIS, Google's Gemini 3 Pro recorded the highest accuracy at 38.3%, followed by OpenAI's GPT-5.2 at 29.9%, Opus 4.5 at 25.8%, and DeepSeek 3.2 at 21.8%.

Performance by Korean AI models also remains limited. In an evaluation focused only on text-based questions, LG AI Research's EXAONE scored 13.6%, Upstage's Solar Open scored 10.5%, and SK Telecom's A.X K1 scored 7.6%.

Korean Researchers Also Participated in Question Development... "Not a Criterion for AGI"

The paper lists six researchers from Korean institutions, including Haun Park, Chief Technology Officer (CTO) of AIM Intelligence, and Daehyun Kim, professor at Yonsei University. CTO Haun Park explained, "I developed discrete mathematics questions that require complex calculations," adding, "AI models tend to approach the solution similarly, but their final answers differ numerically."

However, the research team drew a line at interpreting HLE scores as a benchmark for reaching artificial general intelligence (AGI). They explained that high scores indicate improvements in expertise and reasoning abilities, but do not mean that AI has reached a stage where it can independently lead new research like a human.

CTO Park stated, "I do not believe HLE will be the 'final' benchmark," adding, "There are still many benchmarks missing, such as those evaluating AI safety and real-world behavior."

AIM Intelligence is also developing a new benchmark called "The Judgement Day" in collaboration with the Korea Artificial Intelligence Safety Institute (AISI) to assess safe decision-making by AI. The company is working with Google DeepMind, NVIDIA, and Oxford University, and is currently soliciting safety scenarios.

© The Asia Business Daily(www.asiae.co.kr). All rights reserved.

![User Who Sold Erroneously Deposited Bitcoins to Repay Debt and Fund Entertainment... What Did the Supreme Court Decide in 2021? [Legal Issue Check]](https://cwcontent.asiae.co.kr/asiaresize/183/2026020910431234020_1770601391.png)