Boosting Computational Efficiency with Mixture of Experts Architecture

Enhanced Tool-Calling Capabilities for Agentic AI

On January 20, Kakao announced that it has updated its artificial intelligence (AI) language model 'Kanana-2,' developed based on its own technology, and has additionally released four models as open source.

Kanana-2 is a language model that Kakao made available as open source through the global open-source AI platform Hugging Face in December of last year. About a month later, the company has now added four updated models as open source.

Kakao announced that it has updated its next-generation language model 'Kanana-2,' developed based on its own technology, and has additionally released four models as open source. Photo by Kakao

Kakao announced that it has updated its next-generation language model 'Kanana-2,' developed based on its own technology, and has additionally released four models as open source. Photo by Kakao

The four newly released models emphasize high efficiency and low cost. In particular, they have been optimized to run smoothly even on general-purpose GPUs such as NVIDIA A100, reducing costs and increasing practicality for small and medium-sized businesses as well as academic researchers. Typically, running high-performance, state-of-the-art AI models requires expensive GPU infrastructure.

The key to Kanana-2's efficiency lies in its 'Mixture of Experts (MoE)' architecture. While the model has a total of 32B (32 billion) parameters, only 3B (3 billion) parameters are activated during actual inference, depending on the context, thereby improving computational efficiency. Kakao directly developed several kernels essential for training MoE models, which increased training speed and reduced memory usage without loss of performance.

The data training process has also been advanced. Kakao introduced a 'mid-training' stage between pre-training and post-training, and adopted a 'replay' technique to prevent the phenomenon of forgetting existing knowledge when the AI model learns new information. Through this, the model can acquire new inference abilities while maintaining its proficiency in Korean and general common sense.

Kakao has additionally released a total of four Kanana-2 models on Hugging Face: the Base model, the Instruct model, the Thinking model, and the Mid-training model. By providing the base model for mid-training exploration, which is highly useful for research purposes, Kakao has further contributed to the open-source ecosystem.

To realize agentic AI capable of performing practical tasks on behalf of humans, Kakao also enhanced the models' tool-calling abilities. The company focused on training with high-quality multi-tool calling data to improve instruction-following and tool-calling capabilities. As a result, the models can accurately understand complex user instructions and autonomously select and call the appropriate tools.

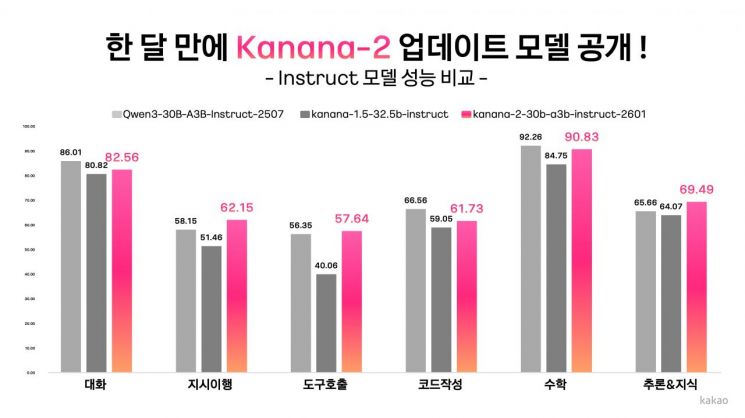

In actual performance evaluations, the models demonstrated superiority over Alibaba's competing model 'Qwen-30B-A3B-Instruct-2507' in terms of instruction-following accuracy, multi-turn tool-calling performance, and Korean language proficiency.

Kim Byunghak, Kanana Performance Leader at Kakao, stated, "The new Kanana-2 is the result of our intense efforts to answer the question, 'How can we implement practical agent AI without expensive infrastructure?'" He added, "By releasing a highly efficient model as open source that performs well even in common infrastructure environments, we hope to offer a new alternative for AI adoption by domestic companies and contribute to the advancement of the AI research and development ecosystem in Korea."

© The Asia Business Daily(www.asiae.co.kr). All rights reserved.

!["The Woman Who Threw Herself into the Water Clutching a Stolen Dior Bag"...A Grotesque Success Story That Shakes the Korean Psyche [Slate]](https://cwcontent.asiae.co.kr/asiaresize/183/2026021902243444107_1771435474.jpg)