Construction of Korea's Largest AI Computing Cluster

Securing Global-Level Computing Power

Accelerating AI Model Development Speed and Quality

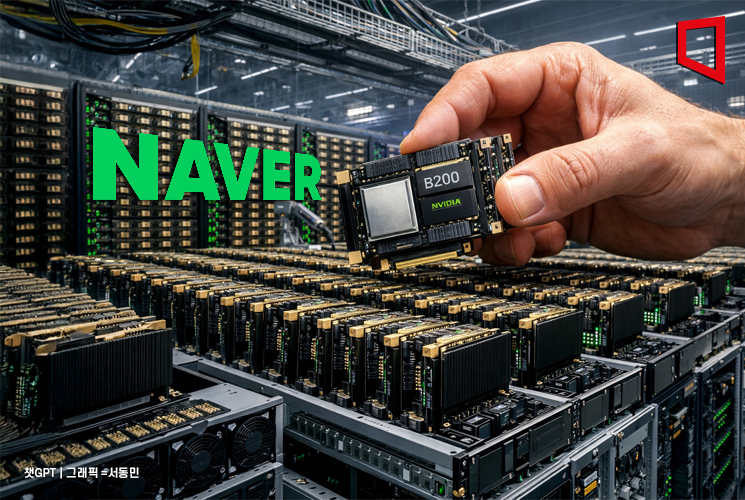

On January 8, Team Naver announced that it has completed the construction of the largest AI computing cluster in Korea, based on 4,000 units of NVIDIA's next-generation GPU, the B200 (Blackwell).

With this new infrastructure, Team Naver has secured computing power at a global level and established a core foundation to not only advance its proprietary foundation models but also flexibly apply AI technology across various services and industries.

Team Naver possesses technological expertise in the field of "clustering," which involves connecting large-scale GPU resources into a single entity to maximize performance. In 2019, the company was the fastest in the world to commercialize NVIDIA's supercomputing infrastructure, "SuperPOD," and has since accumulated hands-on experience in directly designing and operating ultra-high-performance GPU clusters.

The newly built "B200 4K Cluster" incorporates advanced cooling, power, and network optimization technologies based on this experience. Designed for large-scale parallel computing and high-speed communication, this cluster is evaluated to have a computing scale comparable to that of top-ranked supercomputers in the global TOP500 list.

This overwhelming infrastructure performance translates directly into faster AI model development. According to the company, internal simulations showed that training a model with 7.2 billion (72B) parameters, which previously took about 18 months using the main A100-based infrastructure (2,048 units), can now be shortened to approximately 1.5 months with the "B200 4K Cluster." These figures are based on internal simulations, and actual training times may vary depending on specific tasks and configurations.

With training efficiency improved by more than 12 times, Team Naver explained that it can now enhance model completeness through more experiments and iterative training, and has established a development and operational system that can respond more swiftly to the evolving technological environment. The ability to rapidly repeat large-scale training has significantly strengthened both the speed and flexibility of AI model development overall.

Team Naver also plans to accelerate the advancement of its proprietary foundation models. The company aims to massively expand the training of its Omni model, which simultaneously processes text, images, video, and audio, to achieve global-level performance and gradually apply it to various services and industrial settings.

Choi Soo-yeon, CEO of Naver, stated, "Building AI infrastructure goes beyond a simple technology investment; it is significant in that we have secured a key asset that underpins national-level AI competitiveness and AI autonomy and sovereignty." She added, "Based on infrastructure that enables rapid training and repeated experimentation, Team Naver will flexibly apply AI technology to services and industrial sites to create tangible value."

© The Asia Business Daily(www.asiae.co.kr). All rights reserved.