Sola Open Surpasses DeepSeek in Trilingual Benchmarks

Korean Language Performance Doubles That of DeepSeek

Upstage's self-developed large language model (LLM), 'Sola Open 100B' (Sola Open), has demonstrated superior performance compared to global models such as China's DeepSeek. Sola Open is the result of Upstage's participation in the government-led independent artificial intelligence (AI) foundation model project.

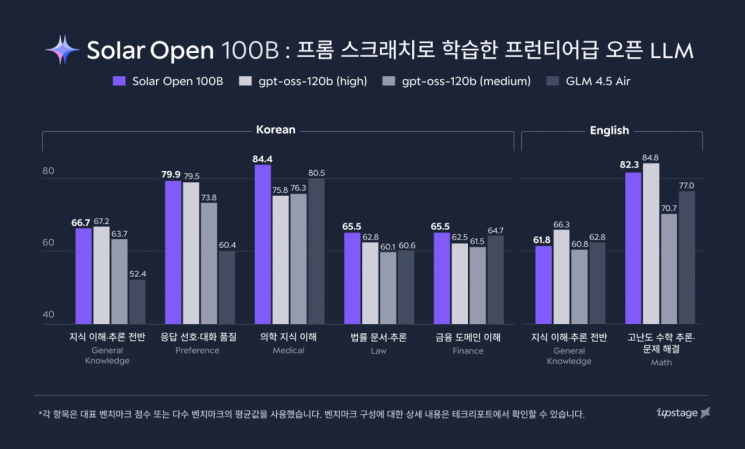

According to Upstage on January 6, Sola Open, a model with 100 billion (100B) parameters, outperformed China's DeepSeek AI model 'R1 (DeepSeek R1-0528-671B)' in major multilingual benchmark evaluations, achieving 110% in Korean, 103% in English, and 106% in Japanese. The size of Sola Open is only 15% that of DeepSeek R1.

Upstage has fully open-sourced its self-developed large language model (LLM) 'Sola Open 100B'. Provided by Upstage

Upstage has fully open-sourced its self-developed large language model (LLM) 'Sola Open 100B'. Provided by Upstage

In particular, its Korean language capabilities far exceeded those of global models. In key Korean benchmarks such as Korean cultural understanding (Hae-Rae v1.1) and Korean knowledge (CLIcK), it showed more than double the performance gap compared to DeepSeek R1. Even compared to 'GPT-OSS-120B-Medium' (GPT-OSS), an open-source model from OpenAI of similar scale, it delivered leading performance at the 100% level.

In advanced knowledge areas such as mathematics, complex instruction following, and agent tasks, Sola Open achieved performance on par with DeepSeek R1. In overall knowledge and coding ability, it produced results similar to GPT-OSS.

Upstage explained that these achievements were made possible by a high-quality pre-training dataset of approximately 20 trillion tokens. To address the shortage of Korean language data, Upstage utilized synthetic data as well as domain-specific datasets in fields such as finance, law, and medicine. Portions of this dataset will be released in the future through the 'AI Hub' of the National Information Society Agency (NIA).

Sola Open enhances efficiency through a 'Mixture-of-Experts' (MoE) architecture that combines 129 expert models, activating only 1.2 billion parameters during actual computation. In addition, GPU optimization improved tokens-per-second (TPS) throughput by about 80%, and the development of its own reinforcement learning (RL) framework, 'SnapPO,' reduced training time by 50%. As a result, GPU infrastructure cost savings amounted to approximately 12 billion won (about 9 million USD).

Upstage developed Sola Open entirely 'from scratch,' independently handling the entire process from data construction to model training. The company has released the model on the global AI open-source platform Hugging Face, along with a technical report detailing the development process and technical specifics.

Kim Sunghoon, CEO of Upstage, stated, "Sola Open is a model that Upstage trained independently from the ground up. It is the most Korean yet global AI, deeply understanding Korean sentiment and linguistic context. The release of Sola Open will be a significant turning point in ushering in the era of Korea-style frontier AI."

© The Asia Business Daily(www.asiae.co.kr). All rights reserved.