"Solar Open Is a 'From-Scratch' Model

Strong Response to False Allegations"

Upstage, a participant in the government-led 'independent artificial intelligence (AI) foundation model' project, held a public verification meeting and firmly denied allegations that its proprietary AI model, 'Solar-Open-100B (Solar Open),' had copied a large language model (LLM) developed by a Chinese company.

Kim Seonghoon, CEO of Upstage, explained during the verification meeting held at the company's headquarters in Gangnam-gu, Seoul, on the afternoon of January 2, "The claim that Solar Open is a product of copying and fine-tuning a Chinese model is not true."

Kim Seonghoon, CEO of Upstage, is presenting at the verification meeting held on the afternoon of the 2nd at the company building in Gangnam-gu, Seoul. Photo by Upstage

Kim Seonghoon, CEO of Upstage, is presenting at the verification meeting held on the afternoon of the 2nd at the company building in Gangnam-gu, Seoul. Photo by Upstage

Kim emphasized, "We trained Solar Open from scratch using the graphics processing units (GPUs) secured through the government-supported project." Training from scratch refers to building an AI model entirely from the ground up.

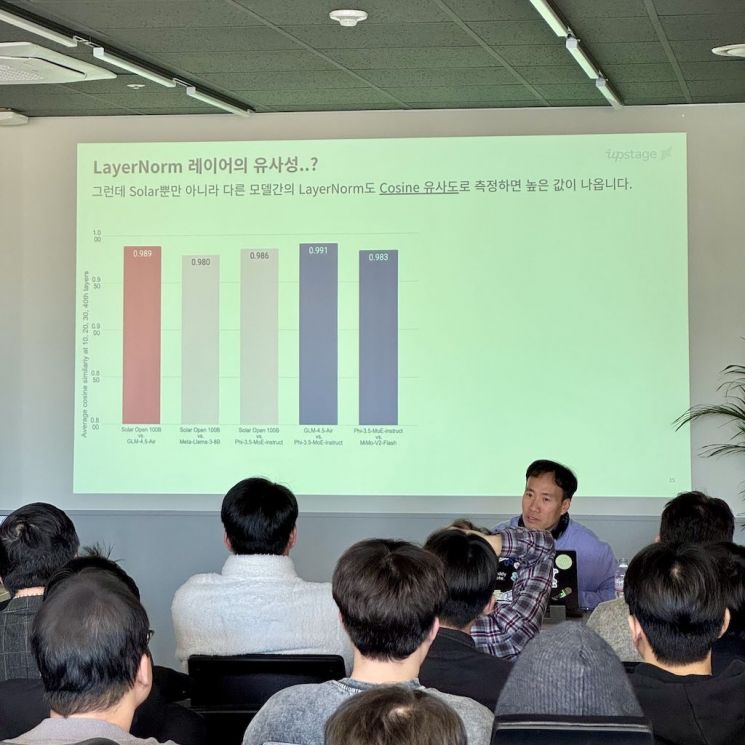

First, Kim explained that the claim of reusing weights from another model based on 'LayerNorm' similarity is merely a statistical illusion. He pointed out that the section showing LayerNorm similarity accounts for only about 0.0004% of the entire model. LayerNorm refers to the process of calculating the mean and variance of features within a specific layer of a model and then normalizing (standardizing) the activation values using these statistics.

He also stressed that the 'cosine similarity' used to judge LayerNorm similarity is not an accurate basis for comparison. Cosine similarity is a simple metric that only compares the direction of vectors, and since the LayerNorms of language models share similar structures and characteristics, it is natural for the similarity scores between models to be high. Kim remarked, "It's like opening two different English dictionaries and pointing out that their contents are similar."

Upstage further explained that a reanalysis using the 'Pearson correlation coefficient,' which reflects model characteristics through normalization, showed that the patterns of Solar Open and other models do not match.

He also refuted claims that the structure and code are similar to a particular model, stating that this is not true. Major open-source LLM developers do not disclose their training codes to the public, making it impossible to develop a model by reusing training code that is not accessible in the first place.

Kim stated, "We welcome healthy discussions and the exchange of opinions, but the act of definitively spreading such false information seriously undermines the efforts of Upstage and the government, who are striving to become a global AI powerhouse." He added, "Upstage will continue to demonstrate world-class technological capabilities through transparent technology disclosure and will work to expand the domestic AI ecosystem."

Kim Seonghoon, CEO of Upstage, is presenting at the verification meeting held on the afternoon of the 2nd at the company building in Gangnam-gu, Seoul. Photo by Upstage

Kim Seonghoon, CEO of Upstage, is presenting at the verification meeting held on the afternoon of the 2nd at the company building in Gangnam-gu, Seoul. Photo by Upstage

Allegations that Upstage's AI model was copied surfaced the previous day. Ko Seokhyeon, CEO of ScionicAI, stated on his social network service (SNS) account that "it is highly regrettable that a model suspected of being a copy and fine-tuned version of a Chinese model was submitted for a project funded by taxpayers' money," claiming that Solar Open was derived from 'GLM-4.5-Air' developed by the Chinese company ZhifuAI.

The report posted by Ko analyzed the weight structures of Solar Open and GLM-4.5-Air. The report measured the parameter similarities between the two models and claimed to have found decisive similarities in certain layers. However, the report has since been deleted.

In a subsequent post, Ko stated, "I have confirmed that the token embeddings of the two models being compared have virtually the same distribution," but added, "This is because GLM-4.5-Air and Solar Open have almost identical model structures and training codes, which would naturally result in similar distributions." He further claimed, "It appears to be a fact that Solar Open used most of the training code from the GLM-4.5-Air model as is. Of course, such an approach is not uncommon in the AI research process, but in such cases, it is customary to disclose the source from the beginning."

Previously, on December 30, Upstage unveiled Solar Open at the first presentation of the independent AI foundation model project. This model contains 100 billion (100B) parameters, making it highly specialized for high-performance inference. Among the five elite teams, Upstage was the only consortium composed solely of startups.

© The Asia Business Daily(www.asiae.co.kr). All rights reserved.