AI Usage Must Be 'Checked' When Posting Videos or Photos

Law Revision Planned for Early Next Year... Bipartisan Lawmakers Propose Bill

Government Cooperation for Blocking... Strict Penalties for Violations

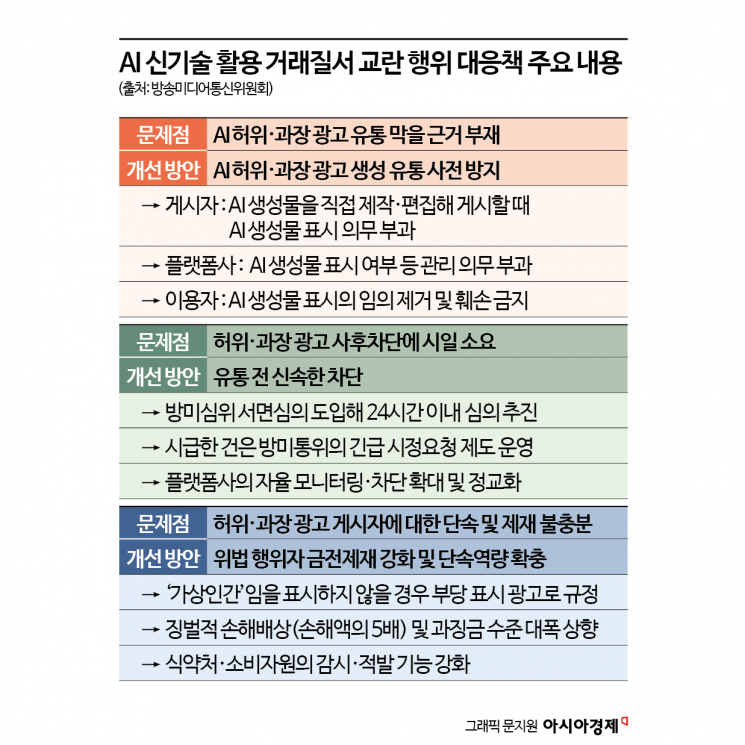

The government will introduce an "AI-generated content labeling system" to prevent damages caused by false or exaggerated advertisements utilizing generative artificial intelligence (AI) technology. The plan is to revise the Information and Communications Network Act in the first quarter of next year, requiring content publishers to indicate whether their content is AI-generated when uploading it. Online platform operators will be held responsible for notifying users of the labeling method and obligation. For malicious distribution of false or manipulated information, a punitive damages system will be introduced, allowing compensation of up to five times the actual damages, and the level of fines will be significantly increased.

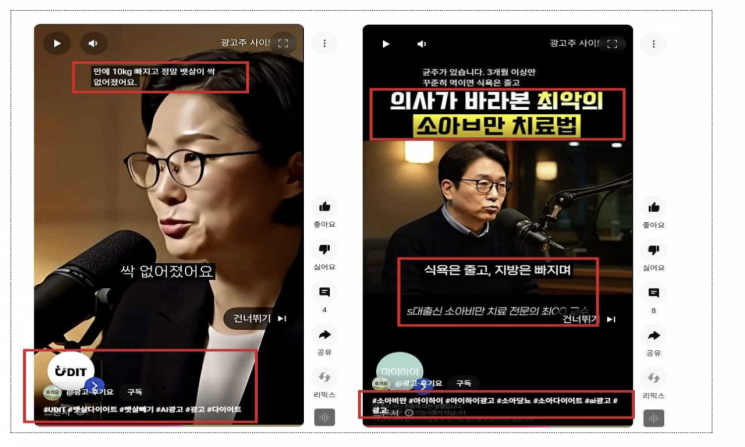

Examples of Unfair Advertising Using AI Generation Experts (Source: Broadcasting Media Communications Commission)

Examples of Unfair Advertising Using AI Generation Experts (Source: Broadcasting Media Communications Commission)

Obligations Imposed on Content Publishers, Platform Operators, and Users

On December 10, Prime Minister Kim Minseok presided over the 7th National Policy Coordination Meeting at the Government Complex Seoul, stating that false or exaggerated advertisements using AI constitute "a serious criminal act that disrupts normal market order and deceives consumers, causing harm." He added, "We will significantly increase fines and introduce a punitive damages system." He continued, "We will introduce a mandatory labeling system for AI-generated content and expedite the review process necessary to correct false advertising."

The government has announced these measures because, as AI technology becomes more sophisticated, advertisements using fake AI doctors and experts-difficult to distinguish from real ones-are rapidly spreading through social media. This is particularly concentrated in the food and pharmaceutical sectors, raising concerns about confusion and harm to consumers, especially seniors.

The AI-generated content labeling system announced by the government imposes obligations on content publishers, platform operators, and users alike. The AI Basic Act, which will take effect in January next year, only imposes labeling obligations on producers of AI-generated content such as OpenAI and Anthropic. The new system is intended to supplement this by extending regulations to all publishers.

Once the AI-generated content labeling system is implemented, those posting AI-generated content will be required to indicate that the photos, videos, etc., were created using AI. An official from the Broadcasting and Media Communications Commission explained, "It will likely take the form of a 'checkbox' that asks, when uploading content, 'Is this AI-generated content?'" Platform operators will be responsible for informing publishers of the labeling method and obligation. Platform users will be prohibited from removing or damaging the AI-generated content label.

The bill containing the mandatory AI-generated content labeling system was sponsored by Assemblyman Jeon Yonggi of the Democratic Party, and Assemblymen Kim Sanghoon and Park Junghoon of the People Power Party. An official from the Broadcasting and Media Communications Commission stated, "We are aiming to revise the Information and Communications Network Act in the first half of next year," adding, "Whether to regulate only advertising-related AI-generated content or to expand to all AI-generated content to address deepfake issues will depend on discussions in the National Assembly."

Swift Blocking of False Advertising... Introduction of Punitive Damages

The government will strengthen inter-agency cooperation to ensure prompt blocking measures at the stage of AI-based false or exaggerated advertisement distribution. If a platform operator fails to comply with a correction request from the Korea Communications Standards Commission, the Broadcasting and Media Communications Commission will issue a corrective order. Continued non-compliance will be met with criminal sanctions to enforce compliance. The current fast-track system, which is only applied to narcotics, will be expanded to include food, pharmaceuticals, and cosmetics in cooperation with the Ministry of Food and Drug Safety. The fast-track system allows the Ministry to submit review requests electronically to the Korea Communications Standards Commission. Through the introduction of electronic written reviews, the government aims to complete reviews within 24 hours.

If there is concern about damage to citizens' property or lives, a system will be established allowing the Broadcasting and Media Communications Commission to make emergency correction requests to platform operators through the relevant authorities. This will be possible once the Information and Communications Network Act is revised in the second half of next year.

Self-regulation within the platform industry will also be strengthened. The government plans to expand the adoption of technologies for the rapid detection and blocking of impersonation advertisements using AI deepfakes, as well as inspections of operational status, based on voluntary agreements with major online platforms such as Naver, Meta (Instagram), and TikTok.

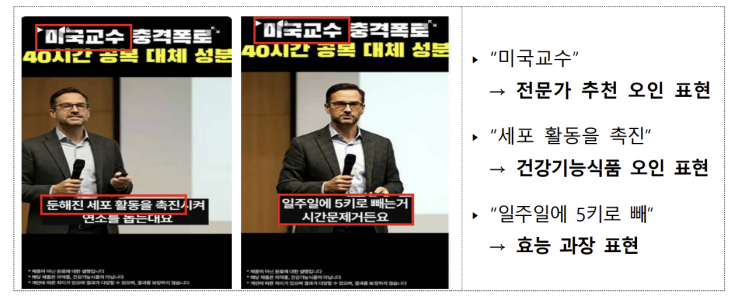

Examples of Unfair Advertising Using AI Generation Experts (Source: Broadcasting and Media Communications Commission)

Examples of Unfair Advertising Using AI Generation Experts (Source: Broadcasting and Media Communications Commission)

The Fair Trade Commission will clarify the criteria for determining the illegality of advertisements that do not indicate AI generation. Advertisements in which a virtual doctor or professor created by AI recommends a product without disclosing that they are virtual will be explicitly defined as unfair labeling and advertising. The relevant laws will be revised to prohibit AI expert recommendation advertisements in the fields of food, pharmaceuticals, and cosmetics altogether. In addition, recommendation advertisements that do not disclose the virtual nature of the endorser will be subject to the same sanctions as existing false or exaggerated advertisements. If an influencer with significant public influence intentionally distributes false or manipulated information, the law will be amended to allow punitive damages of up to five times the actual damages. Under the Labeling and Advertising Act, fines of up to 2% of sales related to illegal advertising can be imposed, and the government plans to significantly increase this level of fines.

Major countries such as the European Union (EU), the United States, China, and Spain are also pursuing mandatory labeling of AI-generated content. However, this is limited to cases involving criminal intent or elections, with the aim of minimizing public confusion. There are also calls for exceptions to respect freedom of expression in certain specialized fields such as creative content and the arts.

An official from the Korea Internet Corporations Association stated, "In the legislative process, platform operators should not be held responsible for users damaging or altering AI-generated content labels," adding, "There should also be parallel measures such as exempting creative content that is clearly recognizable as AI-generated from regulation."

© The Asia Business Daily(www.asiae.co.kr). All rights reserved.