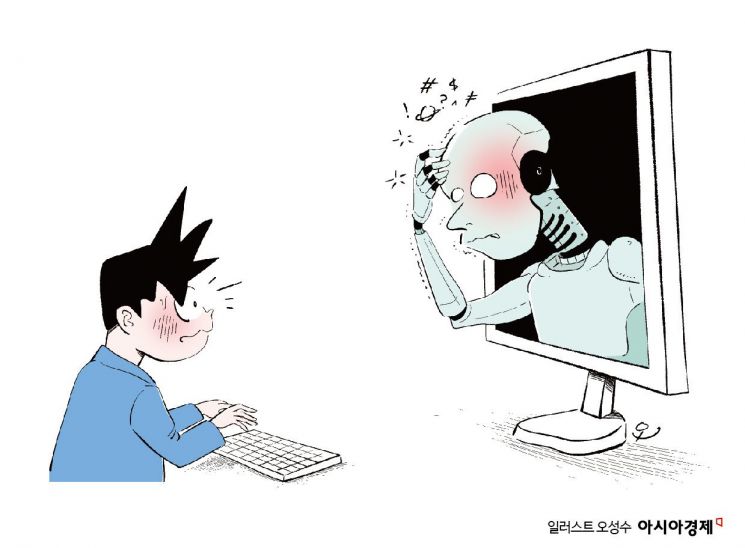

AI Performance Drops When Trained on "Garbage Data"

Jumps to Conclusions Without Considering Complex Problems

"Quality Control of Large Language Models Is Essential"

Research has found that "short-form content," which is designed to be brief and provocative-lasting less than a minute-not only harms people's mental health but also degrades the performance of artificial intelligence (AI). Experts are warning that low-quality data circulating on social networking services (SNS) can permanently damage AI performance, emphasizing the need to manage the quality of training data.

A research team from Texas A&M University and the University of Texas at Austin published a paper on October 15 (local time) titled "LLMs can get 'brain rot.'" The term "brain rot" refers to the deterioration of mental or intellectual state due to the excessive consumption of online content. In particular, short-form content such as Instagram Reels and YouTube Shorts is identified as a cause of brain rot. The Oxford University Press, which publishes the Oxford English Dictionary, even selected "brain rot" as its Word of the Year last year.

The researchers classified content from X (formerly Twitter), a social networking service, as either "garbage data" or "high-quality data" using two standards, M1 and M2. M1 is a metric that measures popularity based on factors such as post length, number of likes, number of comments, and number of shares. For example, a short post that becomes highly popular is categorized as garbage data. M2 is based on semantic elements such as depth of content and style of expression; posts filled with exclamations or conspiracy theories are classified as garbage data, while fact-based, logical, and calm posts are considered high-quality data.

The research team used these criteria to separate data and then trained large language models (LLMs) such as Llama38B, Qwen2.57B, Qwen2.50.5B, and Qwen34B with each type of data. Large language models are AI systems that learn from massive amounts of text data to understand and generate human language.

According to the paper, large language models trained on garbage data showed a decline in performance. Their abilities in reasoning, long-context understanding, and safety were all diminished. Here, safety refers to the AI's ethical function of filtering out harmful information. The actual tests confirmed this performance drop: models not trained on garbage data scored 74.9 on the ARC-Challenge, which evaluates AI reasoning ability, while those trained only on garbage data scored 57.2.

The researchers also found that continued exposure to garbage data leads to permanent, not just temporary, performance impairment in AI. The paper describes this as a "transformation of cognitive structure." The team stated, "Even after retraining large language models that had learned garbage data with high-quality data, their performance did not fully recover."

The quality of data also influenced the AI's tendencies. Large language models trained on data classified as garbage by the M1 standard exhibited traits such as psychopathy, narcissism (an excessive attachment to or interest in oneself), and Machiavellianism (the belief that any means are justified to achieve one's goals). In other words, the AI's tendencies are shaped by the provocative, sensational, and harmful posts commonly found on SNS. However, in some areas such as sociability and openness, the results were positive. Models trained on high-quality data showed relatively moderate responses.

The researchers identified "thought skipping" as the cause of brain rot in large language models. When faced with complex problems, models trained on garbage data skipped intermediate reasoning steps and jumped straight to the conclusion. As a result, their answers were of lower quality in situations requiring long-context understanding and logical coherence. The paper states, "Large language models are learning more and more data and language from the internet," and emphasizes, "Careful data classification and quality control of large language models are necessary to prevent harm."

There is a growing body of research indicating that short videos delivering provocative information, such as short-form content, also negatively affect humans. In 2021, a research team from Columbia University College of Physicians and Surgeons reported that regularly watching videos for extended periods strongly stimulates the brain, leading to declines in memory and reasoning abilities. Professor Lee Gunwoo of the Barun ICT Research Center explained in a paper that "short-form content like Instagram Reels and YouTube Shorts has increased users' online video viewing time," adding, "The introduction of short-form content in 2021 directly and indirectly contributed to the rise in the number of people in their twenties at risk of smartphone overdependence."

© The Asia Business Daily(www.asiae.co.kr). All rights reserved.