Model Parameters Expanded from 2.2 Billion to 3.1 Billion

"Best Korean Language Performance Among Compact Language Models"

Upstage announced on May 20 that it will release a preview version of its next-generation large language model (LLM), "Solar Pro 2."

Solar Pro 2 is the successor to "Solar Pro," which was launched in December 2024. According to Upstage, the number of model parameters has increased from 2.2 billion to 3.1 billion, resulting in improved performance.

Ahead of the official launch in July, Upstage has made the preview API (application programming interface) of Solar Pro 2 available for free for initial testing purposes.

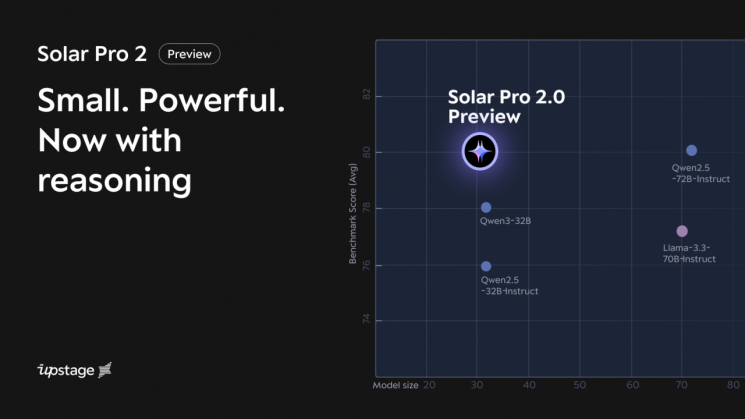

Upstage stated that Solar Pro 2 demonstrated superior performance compared to compact language models (sLLM) from global big tech companies. In major LLM benchmark averages such as "MMLU (Massive Multitask Language Understanding)" and "IFEval (Instruction Following Evaluation)," Solar Pro 2 outperformed Meta's "Llama 4 Scout" and "Llama 3.3 70B," as well as Alibaba's "Qwen 2.5 72B."

Solar Pro 2 also achieved high scores in leading benchmarks for Korean language performance, including "KMMLU" and "HAE-RAE," proving itself to be the most outstanding among publicly available compact language models. Upstage explained that the use of high-quality Korean data for training played a key role in this achievement.

Additionally, Solar Pro 2 is the first Upstage LLM to feature a "hybrid mode." Users can choose between "chat mode," optimized for fast responses, and "reasoning mode," which generates structured answers through step-by-step reasoning. The reasoning mode incorporates the Chain of Thought (CoT) technique, enabling precise answers to complex problems such as mathematics and coding.

Usability has also been enhanced. The model now supports up to 64,000 tokens?twice as many as before?allowing it to process longer documents or conversations at once. Improvements to the in-house tokenizer have made it possible to reduce tokens by up to 30% in Korean and document-based tasks. As a result, both response speed and cost efficiency have been improved.

Kim Sunghoon, CEO of Upstage, said, "Solar Pro 2 delivers performance on par with 70B models, despite its efficient 31B scale, setting a new standard for compact yet powerful language models. In particular, we expect this model, which combines top-tier reasoning capabilities with outstanding Korean language performance, to drive even greater innovation in the workplace."

© The Asia Business Daily(www.asiae.co.kr). All rights reserved.

![From Bar Hostess to Organ Seller to High Society... The Grotesque Con of a "Human Counterfeit" [Slate]](https://cwcontent.asiae.co.kr/asiaresize/183/2026021902243444107_1771435474.jpg)