Google Cloud Next 2025

Reduced Response Time and Computational Cost

New AI Chip 'Ironwood' Also Unveiled

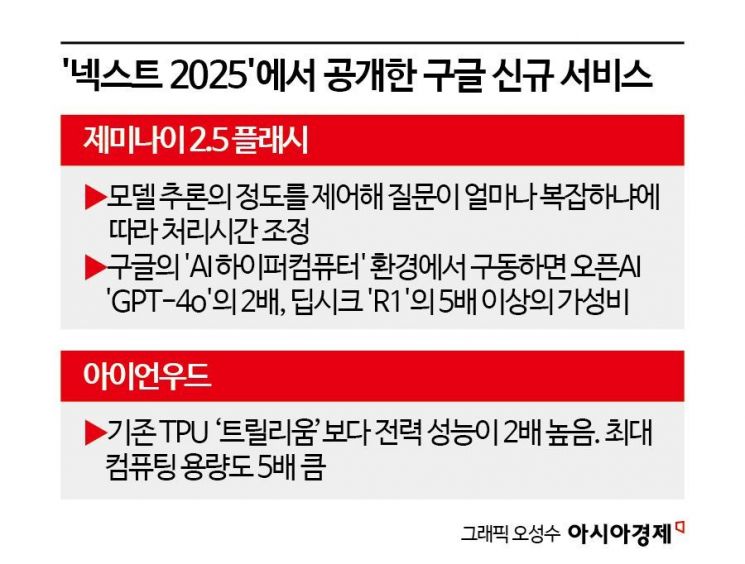

Google unveiled a new AI model, 'Gemini 2.5 Flash,' which delivers more efficient performance than AI models from OpenAI and DeepSeek. Gemini 2.5 Flash is the second model in the Gemini 2.5 series. It reduces response latency and saves computational costs compared to 'Gemini 2.5 Pro,' which Google released last month.

On the 9th (local time) at the 'Google Cloud Next 2025' event held at the Mandalay Bay Convention Center in Las Vegas, USA, Sundar Pichai, CEO of Google, said, "Using Gemini 2.5 Flash allows control over the degree of model inference and balances budget and performance." This refers to a feature that automatically adjusts processing time depending on the complexity of the user's input question. It is characterized by delivering quick answers at a lower cost for simple requests.

Thomas Kurian, CEO of Google Cloud, added, "Running Gemini 2.5 Flash in Google's 'AI hypercomputer' environment will provide more than twice the cost-effectiveness of OpenAI's 'GPT-4o' and over five times that of DeepSeek's 'R1'." Gemini 2.5 Flash is currently available in preview on Google's platform 'Vertex AI' and Gemini applications for AI developers and researchers.

Google's 7th generation Tensor Processing Unit (TPU) 'Ironwood', scheduled for release later this year. It offers twice the power efficiency and up to five times the maximum computing capacity compared to the 6th generation. Google Cloud

Google's 7th generation Tensor Processing Unit (TPU) 'Ironwood', scheduled for release later this year. It offers twice the power efficiency and up to five times the maximum computing capacity compared to the 6th generation. Google Cloud

On the same day, Google also unveiled a new AI chip called 'Ironwood.' Ironwood is a 7th-generation Tensor Processing Unit (TPU) specialized for inference, a semiconductor dedicated to data analysis and deep learning. It is designed to scale according to various AI processing capacities such as chatbots, code, and media content generation. Ironwood was developed with the intention of reducing dependence on NVIDIA for AI accelerators. As the AI market rapidly shifts toward inference-based models, Google's goal is to dominate the market with its cost-effective proprietary chip. It will be released to Google Cloud customers by the end of this year.

Ironwood's power efficiency and capacity have been significantly improved compared to previous models. Each pod (a collection of TPUs) contains over 9,000 chips, delivering a computing power of 42.5 EFlops (exaflops). It offers twice the power performance and five times the maximum computing capacity compared to Google's 6th-generation TPU 'Trillium,' which was unveiled last year. Equipped with 198GB bandwidth high-bandwidth memory (HBM), it also reduces data transfer frequency.

Google mentioned Samsung as a partner in its AI and cloud business on this day. Google plans to equip Samsung's home AI robot 'Bolly,' launching in the first half of this year, with generative AI models.

© The Asia Business Daily(www.asiae.co.kr). All rights reserved.