'3rd Day: 2nd Seoul National University AI Semiconductor Forum' Held

Samsung Electronics and SK Hynix have entered a competition for next-generation memory technology targeting the era of large language models (LLM). Both companies are focusing on combining processing-in-memory (PIM) technology and low-power double data rate (LPDDR) memory to address performance and energy efficiency issues in on-device AI and data centers.

Samsung Electronics Accelerates Technological Innovation with LPDDR-PIM

On the 3rd, Samsung Electronics Master Son Gyomin is giving a lecture on the theme of "AI and Memory" at the "2nd Seoul National University AI Semiconductor Forum" held at Seoul National University Gwanak Campus in Gwanak-gu, Seoul. Photo by Choi Seoyoon

On the 3rd, Samsung Electronics Master Son Gyomin is giving a lecture on the theme of "AI and Memory" at the "2nd Seoul National University AI Semiconductor Forum" held at Seoul National University Gwanak Campus in Gwanak-gu, Seoul. Photo by Choi Seoyoon

On June 3, Son Gyo-min, a master at Samsung Electronics, emphasized the advantages of LPDDR-PIM technology at the '2nd Seoul National University AI Semiconductor Forum (SAISF)' held at Seoul National University’s Gwanak Campus in Gwanak-gu, Seoul, hosted by the SNU Graduate School of AI Semiconductor. He stated, "LPDDR-PIM is more energy-efficient than standalone LPDDR."

He added, "PIM based on LPDDR is an innovative technology that surpasses the limitations of existing memory and can be widely used not only in smartphones and laptops but also in edge servers."

Son also revealed that Samsung Electronics is developing a prototype of the next-generation DRAM, LPDDR6. Through this, they plan to significantly improve both performance and energy efficiency in AI services and data centers.

Explaining the differentiation from SK Hynix, he said, "Samsung Electronics is designing a structure that maximizes PIM performance while maintaining compatibility with existing DRAM interfaces," and "We are enabling customers to adopt LPDDR-PIM technology without major changes to their existing systems."

He emphasized, "PIM technology focuses on reducing data movement while maximizing internal memory bandwidth to enhance performance," and "LPDDR6 will be the main memory for next-generation smart devices and data centers."

SK Hynix Innovates LLM Processing with AiMX

On the 3rd, Im Eui-cheol, Vice President in charge of Solution AT at SK Hynix, gave a lecture on the topic "PIM-based LLM Computing Solutions from Data Centers to Edge Devices" at the "2nd Seoul National University AI Semiconductor Forum" held at Seoul National University Gwanak Campus in Gwanak-gu, Seoul. Photo by Choi Seo-yoon

On the 3rd, Im Eui-cheol, Vice President in charge of Solution AT at SK Hynix, gave a lecture on the topic "PIM-based LLM Computing Solutions from Data Centers to Edge Devices" at the "2nd Seoul National University AI Semiconductor Forum" held at Seoul National University Gwanak Campus in Gwanak-gu, Seoul. Photo by Choi Seo-yoon

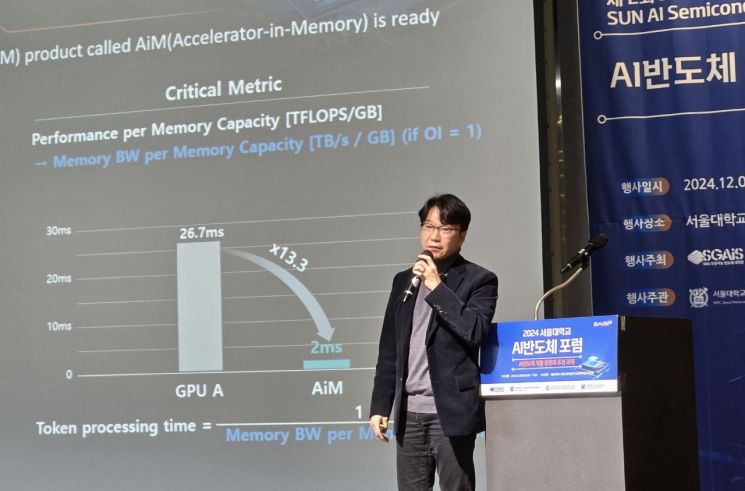

SK Hynix has advanced AiM (Accelerator in Memory) technology based on GDDR6 graphics DRAM and introduced an accelerator chip called 'AiMX' (prototype name), aiming to build the next-generation AI ecosystem. AiMX is an accelerator chip that enhances the speed of central processing units (CPU) or graphics processing units (GPU) and applies PIM technology to GDDR6.

Im Eui-cheol, Vice President in charge of Solutions AT at SK Hynix, said, "LPDDR is the optimal choice for simultaneously achieving energy savings and performance enhancement in data centers and on-device AI," and added, "LPDDR-PIM is a versatile solution that addresses the high power consumption of data centers while supporting high-performance processing in on-device AI."

Vice President Im explained that SK Hynix is expanding its GDDR6-based AiM product lineup centered on LPDDR. He stated, "We developed the accelerator card 'AiMX,' specialized for LLM processing, utilizing GDDR6-AiM," and "AiMX meets the core demands of data centers with high bandwidth and energy efficiency, and we plan to expand the AI ecosystem by collaborating with various global companies."

SK Hynix explained that AiMX has succeeded in resolving the memory bottleneck occurring during the generation stage of LLM models (the stage where AI performs computations to generate outputs from given inputs) and significantly reducing data transfer volume. Vice President Im said, "We are focusing on developing technology that provides optimal performance and efficiency in both on-device AI and data center domains."

© The Asia Business Daily(www.asiae.co.kr). All rights reserved.

![Clutching a Stolen Dior Bag, Saying "I Hate Being Poor but Real"... The Grotesque Con of a "Human Knockoff" [Slate]](https://cwcontent.asiae.co.kr/asiaresize/183/2026021902243444107_1771435474.jpg)