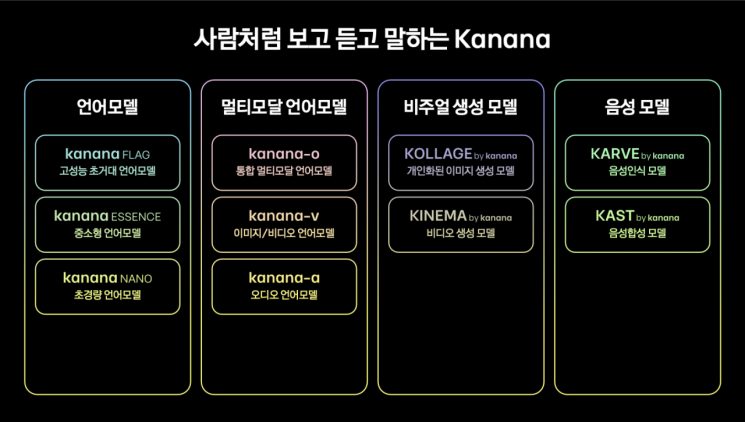

Release of In-House Developed Kanana Family Model

"Providing User Value with Highly Efficient Models"

Introducing Multimodal, Image and Video Generation Models

Real-Time AI Interaction by Pointing Camera at Objects

Kakao, which had been somewhat behind in the artificial intelligence (AI) technology race, has unveiled 10 AI models developed in-house. The lineup includes large language models (LLMs) of various sizes as well as image and video generation models. Rather than pursuing overwhelming model size and performance, the company emphasized a strategy of applying models optimized for Kakao services to enhance cost efficiency and user satisfaction.

On the 23rd, Kakao announced this plan on the second day of the developer event ‘if kakao 2024’ held at the AI Campus in Yongin, Gyeonggi Province.

Kakao applied its new AI brand name ‘Kanana’ to its in-house developed AI models. The Kanana family consists of a total of 10 models: ▲3 LLMs ▲3 multimodal language models ▲2 image and video generation models ▲2 speech models. Multimodal refers to models capable of understanding various types of data such as text and images.

The LLMs are categorized by size and performance into ▲ultra-light ‘Kanana Nano’ ▲optimized ‘Kanana Essence’ ▲flagship ‘Kanana Flag’. The core model, Kanana Essence, emphasizes high performance and cost efficiency. It is said to be comparable in performance to global models of similar size and demonstrates a clear advantage in Korean language processing.

The defining feature of these models is ‘service optimization.’ Kim Byung-hak, Kanana Alpha Performance Leader, who gave the keynote speech that day, said, "LLMs must have good performance, cost efficiency, and problem-solving capabilities for services," adding, "We are preparing to commercialize AI services based on the Kanana models that can provide direct assistance to users."

Byunghak Kim, Performance Leader at Kanana Alpha, delivered the keynote speech on the second day of the developer event "if kakao 2024" held on the 23rd at the AI Campus in Yongin, Gyeonggi Province.

Byunghak Kim, Performance Leader at Kanana Alpha, delivered the keynote speech on the second day of the developer event "if kakao 2024" held on the 23rd at the AI Campus in Yongin, Gyeonggi Province. [Photo by Kakao]

The Kanana models will be used in various AI services, including the conversational hyper-personalized AI assistant ‘Kanana’ that Kakao unveiled the day before. However, depending on the service, Kakao plans to combine external models such as open-source models along with the Kanana models. This approach involves selecting or combining models that deliver the best results in each element such as inference, comprehension, and mathematics, or choosing models with lower costs among those with similar performance. On the previous day, Jeong Sin-ah, Kakao’s CEO, emphasized, "We aim to maximize user experience with the most practical solution without falling behind in the model competition amid capital competition worth tens of trillions."

Kakao also introduced the multimodal language model ‘Kanana-o.’ Unlike the previous modular structure where text recognition, speech recognition, and other models were combined, Kanana-o integrates and processes multiple data types. Because it quickly understands various forms of data, it outputs answers at an average speed of 1.6 seconds regardless of the question. Due to its fast response speed, it is planned to evolve into a form where users can point their phone cameras at objects of interest and have real-time conversations with the AI.

The two models capable of generating images and videos are being developed to handle various inputs ranging from text to images and personal profile photos. Beyond generating videos based on input images, they are said to enable the creation of rich video content, such as controlling character movements with simple mouse operations.

© The Asia Business Daily(www.asiae.co.kr). All rights reserved.