Participating with the slogan 'AiM Accelerating AI Performance'

Showcasing technology and vision for the AI era

SK Hynix's intelligent semiconductor specialized for LLM

SK Hynix recently showcased technologies for the AI era, including the accelerator card AiMX, at the 'Artificial Intelligence (AI) Hardware & Edge AI Summit 2024 (AI Hardware Summit)' held in San Jose, California, USA.

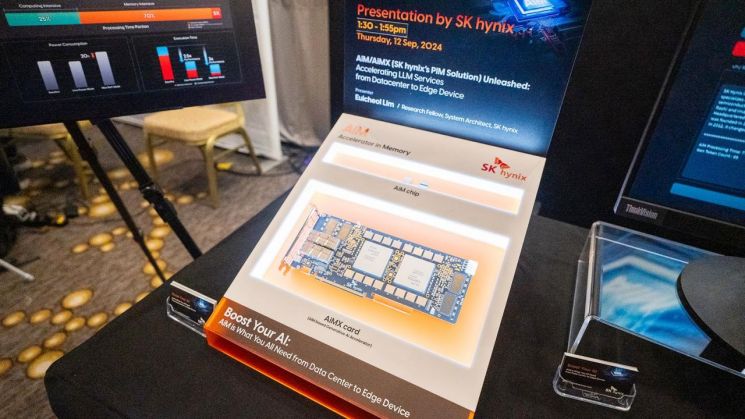

SK Hynix recently announced on the 19th that it showcased technologies for the AI era, including the accelerator card AiMX, at the 'AI Hardware & Edge AI Summit 2024' held in San Jose, California. The photo shows SK Hynix's AiM chip and AiMX card exhibited at the AI Hardware Summit. Photo by SK Hynix [Image source=Yonhap News]

SK Hynix recently announced on the 19th that it showcased technologies for the AI era, including the accelerator card AiMX, at the 'AI Hardware & Edge AI Summit 2024' held in San Jose, California. The photo shows SK Hynix's AiM chip and AiMX card exhibited at the AI Hardware Summit. Photo by SK Hynix [Image source=Yonhap News]

According to SK Hynix Newsroom on the 19th, SK Hynix participated in the event under the slogan "AiM accelerating AI performance from data centers to edge devices," presenting the AiM solution and future vision.

AiM is the name of SK Hynix's intelligent semiconductor (PIM) product. The newly introduced AiMX is SK Hynix's accelerator card specialized for large language models (LLM), using the GDDR6-AiM chip.

SK Hynix explained that its AiM performs some computations within the memory, offering higher bandwidth and superior energy efficiency compared to conventional memory, enabling more economical implementation of the high computing performance required by LLM-based generative AI. At the exhibition booth, AiMX's performance was demonstrated using Meta's latest LLM model, 'Llama 3 70B.'

The newly unveiled AiMX doubled its memory capacity from 16GB (gigabytes) to 32GB compared to the previous version. It also attracted significant attention by processing data using the multi-batch technique, which handles multiple data groups simultaneously.

The AI Hardware Summit is an event where global IT companies and promising startups participate to share the latest technologies and research results and seek collaboration opportunities within the industry through networking.

At this event, SK Hynix unveiled the AiM solution applicable not only to data centers but also to edge devices (on-device AI). SK Hynix stated, "The strength of this solution lies in its ability to enhance performance without changing the memory interface of existing edge devices," adding, "Replacing mobile DRAM with SK Hynix's AiM is expected to improve LLM speed by up to three times and energy efficiency compared to existing solutions."

Im Euicheol, Vice President of SK Hynix, emphasized in his session presentation that AiM technology is a core solution for LLM services and shared plans to develop AiM products for mobile DRAM-based on-device AI.

SK Hynix stated, "We plan to continue building the next-generation AI accelerator ecosystem in collaboration with global companies through various AiM solutions and strengthen our leadership in the on-device AI market."

© The Asia Business Daily(www.asiae.co.kr). All rights reserved.