Image Recognition Feature Available from 27th

Some Metrics Surpass GPT-4o

Lagging in Multimodal AI Speed Race

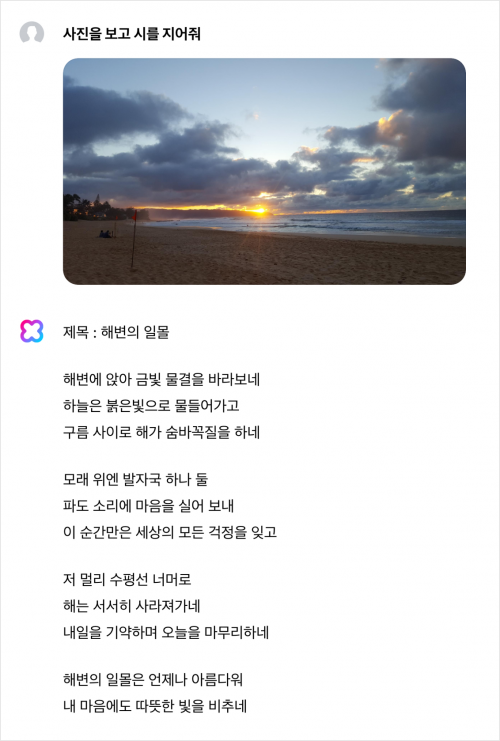

"Sitting on the beach, gazing at the golden waves / The sky is dyed red / The sun plays hide-and-seek among the clouds."

This is part of a poem composed by Naver's large language model (LLM) 'HyperCLOVA X' after seeing a photo of a beach sunset. HyperCLOVA X adds image recognition capabilities beyond text. While big tech companies have long integrated image and voice recognition functions into their AI models, Naver has belatedly entered the multimodal competition. Multimodal models understand and generate multiple types of data such as text, images, and voice.

On the 22nd, Naver announced that it will equip its conversational AI service 'CLOVA X' with 'HyperCLOVA X Vision,' which can view images and respond accordingly. General users will be able to use this feature through an update on the 27th. In the future, it will also be offered to corporate clients via the B2B AI solution platform 'CLOVA Studio.'

HyperCLOVA X Vision can perform tasks such as detailed image captioning, inference to predict the next situation after viewing a photo, analysis of charts and graphs, and solving math problems involving formulas or shapes. It can also engage in creative tasks like writing poems or creating memes (short viral internet content) based on photos.

This capability is the result of training on a large volume of text and images. In particular, it has been trained extensively on data related to Korean culture, resulting in a high level of understanding of Korean documents and text within related images. When Naver had HyperCLOVA X Vision solve past exam questions from the Korean elementary, middle, and high school equivalency tests input as images, it achieved an accuracy rate of 83.8%. This not only surpassed the passing threshold of 60% but also scored higher than OpenAI's latest model GPT-4o (77.8%). However, the overall evaluation results and average scores based on about 30 indicators were not disclosed.

With HyperCLOVA X gaining vision capabilities, it is expected to handle a variety of specialized tasks. Naver stated, "In the near term, it can automate the processing of documents and images, and further, independent agents like robots using HyperCLOVA X as their brain will be able to utilize visual information to accomplish goals."

However, it is considered a step behind compared to big tech. OpenAI introduced multimodal AI last September by integrating the image generation AI 'DALL·E 3' into ChatGPT. Seemingly in response, Google introduced its AI model 'Gemini' as a multimodal model from its initial release in December last year. Anthropic, an AI startup regarded as a rival to OpenAI, also unveiled its first multimodal model through 'Claude 3' in March this year. They have accelerated sophistication as well. GPT-4o provides real-time voice responses to voice questions and recorded videos. Google recently incorporated this feature into smartphones, enabling complex commands to be executed across applications on Android phones.

Naver is developing 'SpeechX,' a voice synthesis technology based on HyperCLOVA X, which will enable voice-based Q&A functionality. The release date has not been set.

© The Asia Business Daily(www.asiae.co.kr). All rights reserved.