Providing Customized Services Through Emotion Recognition

What if there was a technology that could read a person's emotions by observing their facial expressions in real time?

A technology capable of recognizing human emotions in real time has been developed. It is expected to be applied in various ways, such as next-generation wearable systems that provide services based on emotions.

UNIST (President Yong-Hoon Lee) announced on the 29th that Professor Ji-Yoon Kim's team from the Department of Materials Science and Engineering has developed the world's first "wearable human emotion recognition technology" capable of real-time emotion recognition.

Emotions are difficult to define with just one type of data and require the integration and analysis of various data. In this study, the team achieved a result by wirelessly transmitting "multimodal" data that simultaneously detects facial muscle deformation and voice, and converting it into emotional information using artificial intelligence. Because it is a wearable device, it can analyze emotions in any environment and be used appropriately.

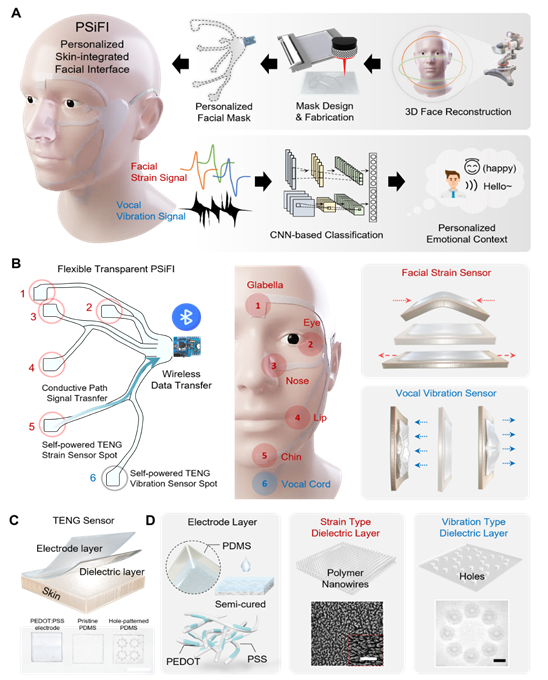

Conceptual diagram of a multimodal information-based personalized emotion recognition wireless facial interface.

Conceptual diagram of a multimodal information-based personalized emotion recognition wireless facial interface.

According to the research team, the developed system was created based on the "triboelectric effect," where two objects separate after friction and become charged with positive and negative charges respectively. It is also self-powered, so no additional external power source or complex measuring equipment is needed when recognizing data.

Professor Ji-Yoon Kim explained, "Based on this technology, we developed a skin-integrated facial interface (PSiFI) system that can provide personalized services to individuals."

The research team utilized a semi-cured technique that maintains a soft solid state. Using this technique, they produced a conductor with high transparency and applied it to the electrodes of the triboelectric device. They also created personalized masks using multi-angle imaging techniques. The system is self-powered and combines flexibility, stretchability, and transparency.

Additionally, the team systematized the simultaneous detection of facial muscle deformation and vocal cord vibrations, integrating this information to enable real-time emotion recognition. The obtained information can be used in virtual reality "digital concierge" services that provide customized services based on the user's emotions.

Dr. Jin-Pyo Lee, the first author and postdoctoral researcher, said, "This technology enables real-time emotion recognition with just a few training sessions without complex measuring equipment," adding, "It shows potential for application in portable emotion recognition devices and next-generation emotion-based digital platform service components."

The research team conducted experiments on "real-time emotion recognition" using the developed system. They not only collected multimodal data such as facial muscle deformation and voice but also enabled "transfer learning" to utilize the collected data.

The developed system demonstrated high emotion recognition accuracy with just a few training sessions. It is personalized and wireless, ensuring wearability and convenience.

The team also applied the system in VR environments as a "digital concierge." They simulated various scenarios such as smart homes, personal cinemas, and smart offices. They confirmed once again that personalized services capable of recommending music, movies, books, and more based on the individual's emotions in different situations are possible.

Professor Ji-Yoon Kim of the Department of Materials Science and Engineering said, "For humans and machines to interact at a high level, HMI devices must also be able to collect various types of data and handle complex and integrated information."

Professor Kim added, "This research will serve as an example showing that even highly complex forms of information such as human emotions can be utilized through next-generation wearable systems."

This research was conducted in collaboration with Professor Lee Pooi See from the Department of Materials Science and Engineering at Nanyang Technological University, Singapore. It was published online on January 15 in the internationally renowned journal Nature Communications. The research was supported by the Ministry of Science and ICT, the National Research Foundation of Korea (NRF), and the Korea Institute of Materials Science (KIMS).

© The Asia Business Daily(www.asiae.co.kr). All rights reserved.

!["The Woman Who Threw Herself into the Water Clutching a Stolen Dior Bag"...A Grotesque Success Story That Shakes the Korean Psyche [Slate]](https://cwcontent.asiae.co.kr/asiaresize/183/2026021902243444107_1771435474.jpg)