Native AI Era of Big Corporations and Startups

"Find the Optimal Model"... Diversifying Sizes and Sharing Data

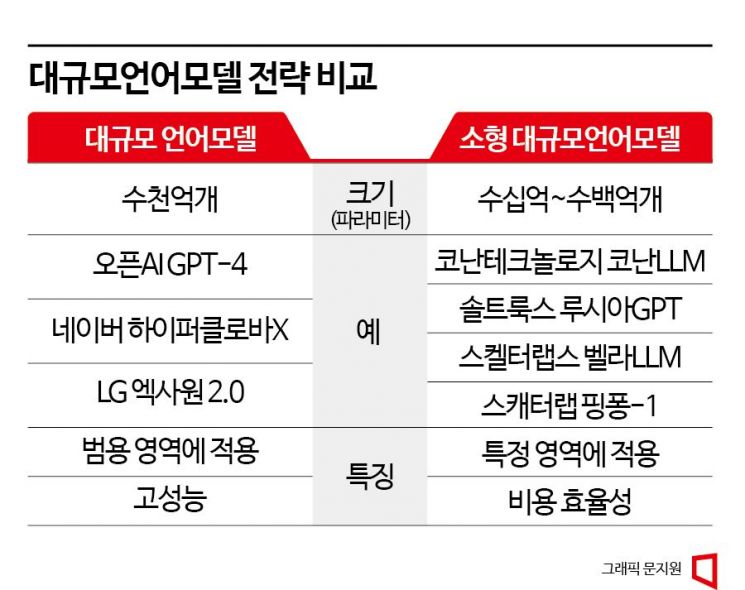

From domestic big tech companies to startups, South Korean IT companies are consecutively unveiling large language models (LLMs) developed in-house. As the AI era enters a period of intense competition, strategies have diversified. While leading companies have focused on scaling up AI, latecomers are targeting niches with small but efficient AI.

Domestic Startups Developing Small but Efficient AI

AI software (SW) company Konan Technology unveiled its self-developed LLM, 'Konan LLM,' on the 17th. The parameter sizes vary by model, with 13.1 billion and 41 billion parameters. These are smaller than OpenAI's GPT 3.5 (175 billion), Naver HyperCLOVA (204 billion), and LG ExaOne 2.0 (300 billion).

Parameters play the role of learning and memorizing information. The larger the size, the better the performance. Typically, LLMs have hundreds of billions of parameters, while small LLMs have tens to hundreds of billions. However, a larger number does not necessarily mean better. Larger parameters require vast computing resources such as GPUs and electricity, resulting in higher costs. Therefore, companies are seeking parameter sizes that offer cost efficiency. Do Won-cheol, Executive Director at Konan Technology who led the LLM development, said, "Increasing parameters doubles operating costs," adding, "We need to find the optimal parameter size considering customers' operating expenses."

More companies, especially startups, are developing small LLMs. Saltlux plans to release a small LLM called 'LuciaGPT' in September. Based on this, they aim to provide AI services optimized for specific fields such as finance, law, and patents. Skelter Labs will introduce a lightweight 'Bella LLM (tentative name)' in the second half of this year. Scatter Lab, known for the AI chatbot 'Iruda,' is developing a small LLM called 'PingPong-1.' While Iruda 2.0 showcased natural conversation capabilities in a small LLM, PingPong-1 is characterized by logical interaction capabilities.

Improving Performance by Increasing Training Data... Data Sharing Collaborations

Instead of reducing model size, the strategy is to improve performance by increasing the training data volume. Konan Technology trained Konan LLM with 270 times more Korean language data than Meta's LLaMA 2. They utilized their own data collection and analysis platform 'PulseK,' which has been operating since 2007. The platform has secured 20.5 billion documents from cafes, blogs, Twitter, Instagram, and more. They also added news data purchased under formal contracts with the Korea Press Foundation since 2011. To ensure quality data, short Twitter posts or news comments were excluded.

Upstage has even initiated data sharing collaborations to develop its own LLM. They launched the '1T Club' to gather partners willing to share data. '1T' stands for 1 trillion tokens, equivalent to the data volume of 200 million books. Except for some portals like Naver and Kakao, it is difficult to independently possess data at the trillion-token scale. This is why Upstage is collecting data collaboratively. Partner companies sharing data can use Upstage's developed LLM at a lower cost or share profits generated from the LLM business.

Large corporations are also diversifying model sizes. NCSoft recently unveiled the language model 'VARCO LLM' with parameter sizes of 1.3 billion, 6.4 billion, and 13 billion. These are mainly small to medium-sized AI models that individuals and companies can easily utilize. They plan to release a 52 billion parameter model in November and a 100 billion parameter model in March next year. Kakao is also testing various sizes such as 6 billion, 13 billion, 25 billion, and 65 billion parameters ahead of unveiling its next-generation model by the end of the year. Kakao CEO Hong Eun-taek explained, "The game is not about who builds the largest model first, but who creates a cost-effective, appropriately sized model and applies it to services." Stanford University Professor Andrew Ng also predicted, "Rather than a single model dominating, optimized models tailored to diverse data and use cases will share the market."

© The Asia Business Daily(www.asiae.co.kr). All rights reserved.

!["The Woman Who Threw Herself into the Water Clutching a Stolen Dior Bag"...A Grotesque Success Story That Shakes the Korean Psyche [Slate]](https://cwcontent.asiae.co.kr/asiaresize/183/2026021902243444107_1771435474.jpg)