③ Preparing Before the 'Nodaji' AI Market Opens

Expansion of Server CPU Market... Seizing Opportunities

Samsung Electronics and SK Hynix have been accelerating the development of next-generation memory semiconductors used in CPUs (central processing units) for artificial intelligence (AI) and data center servers, such as ChatGPT. Generative AI like OpenAI's ChatGPT has gained tremendous popularity, and with the world's number one CPU company, Intel, unveiling new CPU processors, the AI and CPU markets are experiencing explosive growth. AI and CPU manufacturers seek high-efficiency memory semiconductors produced by Samsung Electronics and SK Hynix to enhance product performance.

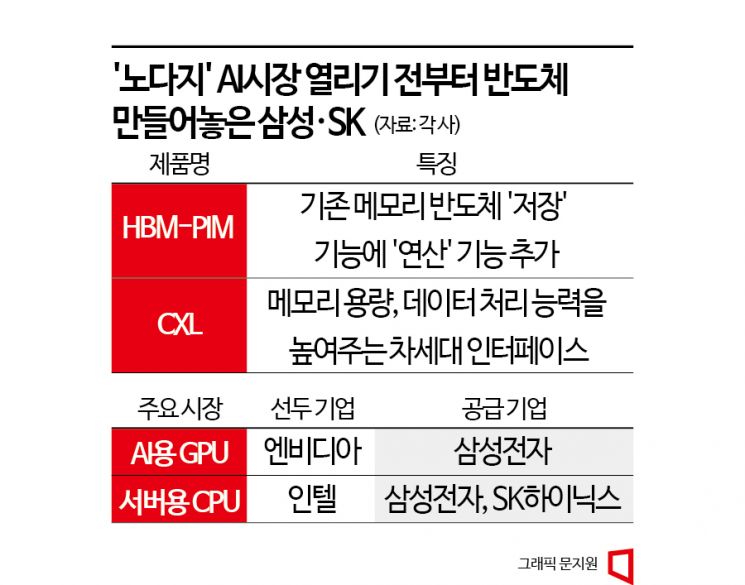

Samsung Electronics and SK Hynix supply next-generation memory semiconductors to world-leading companies such as Intel, Nvidia, and AMD, which are top in CPUs and GPUs (graphics processing units). They have developed products that significantly enhance computing capabilities (HBM-PIM), low-power semiconductors that increase data processing speeds (DDR5 DRAM), and interfaces that strengthen memory capacity and data processing capabilities (CXL).

News about CPU product development for data center servers emerged early last year. Intel, which holds a 90% market share in the server CPU market, announced in the first half of last year that it would release the 4th generation model of its new server CPU brand, the Xeon Scalable Processor (code-named "Sapphire Rapids"). This CPU supports the new DDR5 DRAM standard. When Sapphire Rapids was unveiled in January, expectations grew that Samsung Electronics and SK Hynix would mass-produce DDR5 DRAM semiconductors.

Intel stated it plans to produce four successor products to Sapphire Rapids by 2025. The "Granite Rapids," expected to be unveiled next year, will be the first server CPU to support DDR5-based MCR DIMM 8800. MCR DIMM 8800 is 80% faster than the existing DDR5 product's data processing speed of 4.8 Gb per second. At the end of last year, SK Hynix became the first in the world to unveil a DDR5 MCR DIMM sample.

The semiconductor industry anticipates a surge in demand for DRAM used in data center servers. On the 29th of last month, at SK Hynix's regular shareholders' meeting, President Kwak No-jeong stated, "The demand for HBM (high bandwidth) memory semiconductors used in servers and next-generation standard products like DDR5 DRAM is extremely 'tight'." The term "tight" here means that demand exceeds supply, allowing worry-free mass production without inventory concerns.

The generative AI market is also expanding alongside the server CPU market. On the same day that Micron announced a $2.3 billion operating loss (approximately 3.01 trillion KRW) for the second quarter of fiscal year 2023 (December to February last year), its stock price surged by 7.2%, thanks to AI. Sanjay Mehrotra, CEO of Micron, said during the earnings announcement, "AI servers contain eight times the DRAM and up to three times the NAND flash compared to general servers," adding, "AI will be a continuous driving force for data center demand growth."

HBM memory semiconductors, primarily used in GPUs for AI data processing, are products that Samsung Electronics and SK Hynix excel at manufacturing. At the shareholders' meeting, SK Hynix Vice Chairman Park Jung-ho revealed that the supply price of HBM semiconductors is under $200 (approximately 260,000 KRW). This is 108 times higher than the price of general-purpose PC DRAM ($1.81).

SK Hynix supplies HBM-PIM products to Nvidia, the world's number one GPU company, while Samsung Electronics supplies them to AMD, ranked second. Since June last year, SK Hynix has been supplying its HBM3 products to Nvidia's GPU product H100. SK Hynix is known to have the highest level of HBM semiconductor technology worldwide.

Samsung Electronics has also been supplying HBM-PIM memory semiconductors to AMD GPU accelerator cards since October last year. PIM semiconductors not only store data like conventional DRAM but also perform computations, maximizing high-performance AI data output speeds.

Samsung Electronics is expanding its semiconductor solution ecosystem in collaboration with Naver, which independently develops generative AI.

Naver's large-scale AI "HyperCLOVA" contains 204 billion parameters that act like synapses in the human brain, learning information. Due to the vast number of parameters, a large amount of data is required during the learning process. If memory semiconductors cannot properly handle the data, bottlenecks occur, causing slower speeds and reduced performance. Samsung Electronics plans to create semiconductors optimized for HyperCLOVA to reduce these bottlenecks. The two companies signed a business agreement in December last year and formed a practical task force (TF).

Competition is fierce not only in products but also in interface technology. Samsung Electronics developed the world's first CXL (Compute Express Link)-based DRAM technology in May 2021, and SK Hynix created a DDR5 DRAM-based CXL memory sample in August last year. Both companies are competing to lead CXL technology. CXL is used in memory semiconductors, GPUs, AI accelerators, and more.

A Samsung Electronics official said, "CXL is an innovative interface that can change the paradigm of next-generation memory semiconductors," adding, "As memory semiconductor companies, it is a path we must take and a field we must win."

© The Asia Business Daily(www.asiae.co.kr). All rights reserved.

!["The Woman Who Threw Herself into the Water Clutching a Stolen Dior Bag"...A Grotesque Success Story That Shakes the Korean Psyche [Slate]](https://cwcontent.asiae.co.kr/asiaresize/183/2026021902243444107_1771435474.jpg)