Large Amount of Data Required for Ultra-Large AI Computations

Vital to Secure 'Super-Gap' in PIM and HBM3 Memory

[Asia Economy Reporter Moon Chaeseok] Samsung Electronics and SK Hynix are racing to develop AI-related product technologies represented by conversational artificial intelligence (AI) 'ChatGPT'. This is to outpace the pursuit of US Micron and China's Yangtze Memory Technologies (YMTC).

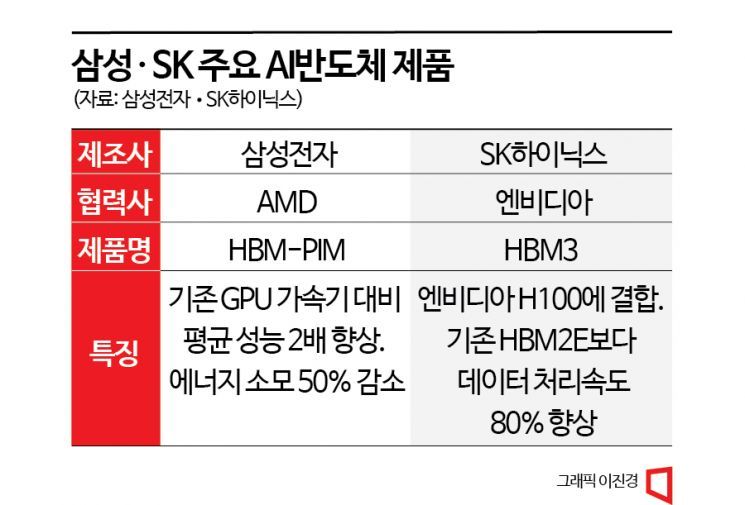

According to industry sources on the 3rd, Samsung Electronics and SK Hynix are supplying next-generation AI memory semiconductors to the world's top CPU (central processing unit) and GPU (graphics processing unit) companies such as US Intel, AMD, and Nvidia. The most notable products are Samsung Electronics' intelligent memory (PIM) semiconductors and SK Hynix's high-bandwidth memory (HBM)3 semiconductors.

First, Samsung Electronics developed the HBM-PIM memory semiconductor in October last year. This product is used in US AMD's GPU accelerator cards. It is a product that combines memory semiconductors and AI processors. It has twice the average performance of existing GPU accelerators and consumes half the energy. PIM semiconductors are components that can perform computations as well as store data like conventional DRAM. They play a role in maximizing high-performance AI data output speed. Minister Lee Jong-ho of the Ministry of Science and ICT mentioned it as "a project on which the fate of Korea's semiconductor industry depends," highlighting its promising potential.

Samsung Electronics is also promoting collaboration on AI semiconductor solution development. They have agreed to develop new semiconductors to improve data processing efficiency for Naver's large-scale AI system 'HyperCLOVA'. In December last year, they signed a business agreement with Naver and launched a practical task force.

SK Hynix's HBM3 semiconductor competitiveness is also regarded as world-class. The industry is paying attention to the fact that SK supplies HBM3 products to Nvidia, the world's top GPU company. Since the first supply in June last year, the supply volume has been increasing. HBM3 is used in Nvidia's GPU product H100. It improves data processing speed by 80% compared to the existing HBM2E. It can process 819 gigabytes (GB) of data per second, equivalent to processing 163 Full HD movies in one second.

The Intel 'Sapphire Rapids' server CPU market, the biggest news in the memory industry in 2023, is also an opportunity not to be missed. Intel announced last year that Sapphire Rapids combined with HBM memory will more than double the workload compared to previous generation AI. This indicates a good synergy between Samsung and SK's HBM semiconductors and Intel's supercomputer CPUs.

Both Samsung and SK are fully preparing, anticipating a surge in demand for AI semiconductors represented by ChatGPT. Kim Jae-jun, Vice President of Samsung Electronics' Memory Business Division, said during the Q4 2022 earnings conference call on the 31st of last month, "The launch of natural language-based AI services like ChatGPT is significant as large-scale language models have been commercialized," adding, "A combination of high-performance processors required for training and inference and high-performance, high-capacity memory to support them is essential." He further stated, "We will actively respond by developing server memory semiconductors with capacities of 128GB or more."

Park Myung-soo, Vice President in charge of DRAM marketing at SK Hynix, also mentioned during the conference call on the 1st, "After AI commercialization, data must handle not only text but also images, videos, and biometric signals," and added, "The server memory semiconductor market may accelerate its transition from 64GB to 128GB capacity due to the launch of ChatGPT."

© The Asia Business Daily(www.asiae.co.kr). All rights reserved.