Lookalike of IU in China 'Chaiyu', Deepfake Suspicion

Deepfake, Technology Using AI to Synthesize Specific Person's Face

25% of Deepfake Victims Are Korean Female Celebrities

A Chinese woman gained popularity on TikTok by showcasing her resemblance to IU. Photo by Online Community Capture.

A Chinese woman gained popularity on TikTok by showcasing her resemblance to IU. Photo by Online Community Capture.

[Asia Economy Reporter Heo Midam] Recently, the real appearance of Chinese beauty creator 'Chaiyu,' who has gained popularity for her resemblance to singer IU, was revealed, raising suspicions that the woman used 'deepfake' technology. Deepfake is a technology that uses artificial intelligence to composite a specific person's face into videos.

Some pointed out that since female celebrities are often targets of 'deepfake porn' crimes, those who imitate specific celebrities using deepfake technology should not be left unchecked. Experts urged investigative agencies to respond actively.

Recently, on the Chinese application 'TikTok,' a Chinese beauty creator with a face strikingly similar to IU appeared, becoming a hot topic.

This woman not only mimicked IU's unique expressions and clothing but also had facial features such as eye shape and face contour that closely resembled IU, leading some to call her 'Chaiyu' (China + IU), meaning 'Chinese IU.' Especially, Chinese netizens sometimes mistook her for the real IU and sent messages of support.

The real face of the Chinese woman who was called the 'IU look-alike.' Photo by Online Community Capture.

The real face of the Chinese woman who was called the 'IU look-alike.' Photo by Online Community Capture.

However, a netizen recently claimed that the real appearance of 'Chaiyu' does not resemble IU and released some of her videos as evidence.

The video presented showed a moment when the deepfake effect briefly disappeared, revealing a woman whose facial features, aside from hairstyle, did not resemble IU. This netizen argued that the woman used deepfake technology to create an appearance similar to IU.

Deepfake is a portmanteau of 'deep learning' and 'fake,' referring to edited content that composites a specific person's face or body into desired videos using artificial intelligence (AI) technology. It can be easily created with just a 15-second original video containing various expressions of the person, along with webcam and voice data.

The problem is that such composites are being used to sexualize others. According to a deepfake research report released by Dutch cybersecurity company 'Deeptrace,' 96% of deepfake videos uploaded in 2019 were consumed as pornography. Among the victims of face synthesis, Korean female celebrities such as K-pop singers accounted for 25%, second only to American and British actresses (46%).

As a result, voices calling for the establishment of related laws grew louder last year, pointing out the insufficiency of punishment regulations for deepfake-related crimes. In response, the National Assembly passed the 'Deepfake Punishment Act' (an amendment to the Special Act on Sexual Violence Crimes) in March last year, but since there were no punishment provisions for video possessors or those commissioning synthesis production, it was criticized as a 'half-baked' law.

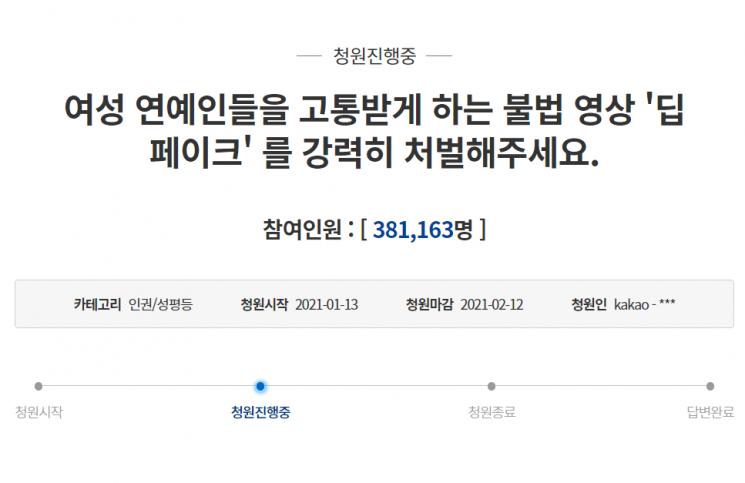

On the 12th, a post titled "Please Strongly Punish Illegal Deepfake Videos That Cause Suffering to Female Celebrities" was uploaded on the Blue House National Petition Board. Photo by Blue House National Petition Board capture.

On the 12th, a post titled "Please Strongly Punish Illegal Deepfake Videos That Cause Suffering to Female Celebrities" was uploaded on the Blue House National Petition Board. Photo by Blue House National Petition Board capture.

Recently, despite the enforcement of the punishment law, deepfake videos have still been easily found on social networking services (SNS) such as Twitter, sparking controversy.

Consequently, calls for stronger punishment have emerged. On the 13th, a petition titled "Please strongly punish illegal deepfake videos that cause suffering to female celebrities" was posted on the Blue House National Petition Board. As of 2:50 PM on the 28th, about 381,160 people had agreed.

The petitioner emphasized, "Deepfake is a clear form of sexual violence. Female celebrities have not only become victims of sexual crimes but also have had illegal deepfake videos sold," urging "strong punishment and investigation of deepfake sites and users."

Experts urged investigative agencies to respond actively, noting that female celebrities may suffer greater harm from deepfake crimes.

Seo Seunghee, head of the Korea Cyber Sexual Violence Response Center, said, "Deepfake sexual exploitation materials are often produced and distributed on platforms like Telegram, where it is difficult to trace perpetrators. Therefore, even if investigative agencies start investigations, it is hard to identify the perpetrators," adding, "Because of this, victims sometimes hesitate to report deepfake crimes, fearing whether the criminals will be caught."

She continued, "Especially for female celebrities, it is difficult to report their own deepfake videos. When reported, many people inevitably view and pay attention to those videos, which can cause even greater harm."

Seo emphasized, "Although the 'Deepfake Punishment Act' was passed last year, demands for 'strong punishment' continue through national petitions. This is related to the ongoing production and distribution of deepfake materials despite the law," adding, "There are still investigative limitations. Investigative agencies must strive to overcome these challenges."

© The Asia Business Daily(www.asiae.co.kr). All rights reserved.