AI Model Developed to Change 3D Character Poses to Match Photo Movements Without Shape Distortion

Lowering the Barriers to 3D Content Creation for All in the Metaverse... Spotlight at SIGGRAPH ASIA

Can a still photo walk, swing its arms, and dance?

An artificial intelligence technology has been developed that enables a 3D character on screen to precisely mimic the movements of a 2D character in a photo.

On December 25, Professor Kyungdon Joo's team at the UNIST Graduate School of Artificial Intelligence announced the development of DeformSplat (Rigidity-aware 3D Gaussian Deformation), an AI technology that can change the pose of a 3D character generated by a 3D Gaussian model without any shape distortion.

Research team, (from left) Professor Kyungdon Joo, Researcher Jinhyuk Kim (first author), Researcher Jaehun Bang, Researcher Seunghyun Seo. Provided by UNIST

Research team, (from left) Professor Kyungdon Joo, Researcher Jinhyuk Kim (first author), Researcher Jaehun Bang, Researcher Seunghyun Seo. Provided by UNIST

3D Gaussian Splatting is an AI model that reconstructs 3D objects on screen by taking 2D data such as photos as input. However, to animate the 3D characters reconstructed by this Gaussian Splatting technology for use in cartoons or games, it was still necessary to have video data captured from multiple angles or continuous video footage. Without sufficient data, there was a high risk of shape distortion, such as arms or legs bending unnaturally like taffy when moving.

The DeformSplat technology developed by the research team allows a 3D character's pose to be changed to exactly match the pose in a photo, without any shape distortion, using just a single image as input.

In actual experiments, 3D characters animated by this model maintained natural poses with minimal shape distortion, even when viewed from the side or behind. For example, when an arm-raising motion was input, the proportions of the arm and torso remained consistent not only from the front but also from side and rear perspectives, and there was almost no phenomenon of joints stretching like rubber.

The research team developed this model by utilizing Gaussian-to-Pixel Matching and Rigid Part Segmentation technologies. Gaussian-to-Pixel Matching connects the Gaussian points that make up the 3D character with the pixels in the 2D photo, transferring the pose information from the photo to the 3D character. In addition, the Rigid Part Segmentation technology automatically identifies and groups the solid parts that need to move together during pose deformation, allowing robots or dolls to move naturally without their forms becoming distorted.

Professor Kyungdon Joo stated, "Previous technologies had the limitation that when trying to animate a 3D object using only a single photo as input data, the shape would be severely damaged. The developed AI distinguishes and generates movement in areas that act as the object's skeleton by considering its structural characteristics. This technology is expected to lower the entry barriers in 3D content creation fields such as the metaverse, games, and animation, which have traditionally relied on specialized personnel and expensive equipment."

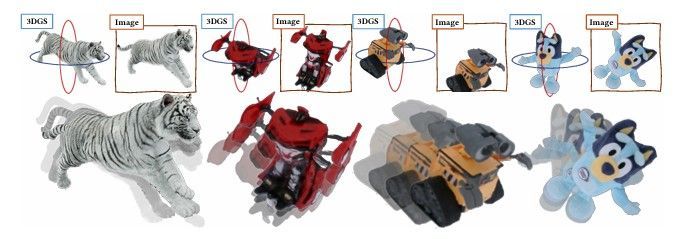

Function of the developed AI model. It can change the pose of a 3D character to the pose of the character inside the red box.

Function of the developed AI model. It can change the pose of a 3D character to the pose of the character inside the red box.

The research results have been accepted for presentation at SIGGRAPH ASIA 2025 (The 18th ACM SIGGRAPH Conference and Exhibition on Computer Graphics and Interactive Techniques in Asia).

SIGGRAPH ASIA is organized by ACM, the world's largest international association in the field of computer science, and is considered the most influential conference in graphics and interactive technology. This year's conference was held in Hong Kong from December 15 to 18.

This research was supported by the Institute of Information & Communications Technology Planning & Evaluation under the Ministry of Science and ICT, as well as the UNIST Graduate School of Artificial Intelligence.

© The Asia Business Daily(www.asiae.co.kr). All rights reserved.