Research team led by Professor Kyu-bin Lee from the Department of AI Convergence at Gwangju Institute of Science and Technology.

Research team led by Professor Kyu-bin Lee from the Department of AI Convergence at Gwangju Institute of Science and Technology.

The Gwangju Institute of Science and Technology (GIST) announced on September 9 that the research team led by Professor Kyubin Lee from the Department of AI Convergence has developed and released "GraspClutter6D," the world’s largest robot grasping dataset that precisely reflects the complexities of real-world environments.

This achievement is expected to serve as a core foundation for building a "robot foundation model" that can operate reliably even in cluttered, real-world settings, overcoming the previous limitations of robot AI, which only functioned in simple and organized scenarios. It is also anticipated to make significant contributions to the advancement of "Physical AI" research, which has recently been gaining global attention. This research was conducted in collaboration with the Korea Institute of Machinery and Materials (KIMM).

The task of a robot grasping an object is one of the most fundamental yet challenging problems. In real-world situations, such as retrieving items from a warehouse or organizing objects at home, where items overlap and are partially hidden, it is difficult for robots to accurately recognize and reliably grasp objects.

Although recent advancements in deep learning technology have greatly improved robotic grasping performance, existing training datasets have mostly assumed tidy and simple environments, limiting their applicability to real-world scenarios. As a result, robots have experienced a significant drop in performance when faced with cluttered objects or diverse backgrounds in actual settings.

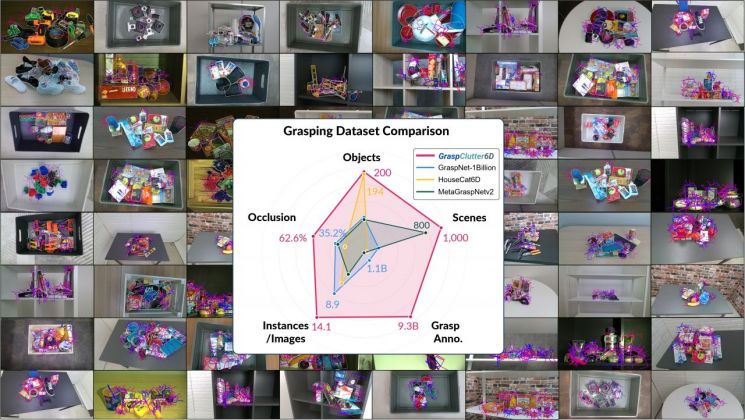

For example, the widely used global dataset "GraspNet-1Billion" contains fewer than nine objects per scene on average, and the occlusion rate is only about 35 percent, which is insufficient to fully represent real-world conditions.

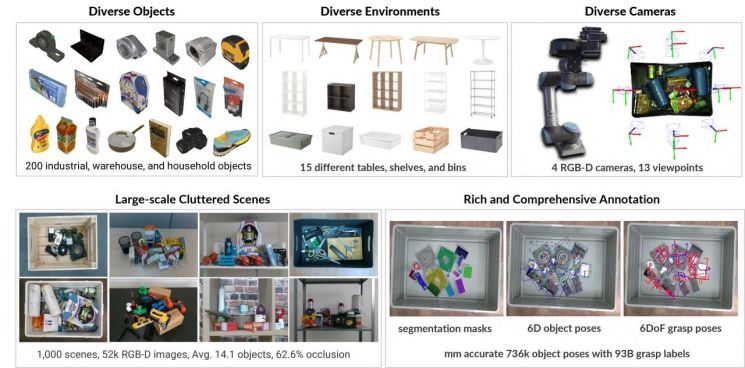

To overcome these limitations, the research team built "GraspClutter6D," a massive dataset that accurately replicates real-life and industrial environments. The team created 75 different settings, including boxes, shelves, and tables, and used four RGB-D (color plus depth) cameras mounted on a robotic arm to collect 52,000 images from 1,000 scenes.

The dataset includes high-quality 3D models of 200 real objects, 736,000 six-dimensional (6D) object poses, and as many as 9.3 billion six-dimensional (6D) robot grasp poses. This scale far surpasses that of previously released public datasets.

Along with the dataset, the research team also released benchmark results evaluating the performance of state-of-the-art AI recognition and grasping models. After evaluating the latest object segmentation, six-dimensional pose estimation, and grasp detection methods, it was found that the performance of existing AI technologies dropped sharply in environments with heavy clutter and complexity. However, AI models trained with the "GraspClutter6D" dataset showed significant performance improvements in real-world robotic grasping experiments.

In simple environments (five objects), the grasping success rate improved from 77.5 percent to 93.4 percent, a 15.9 percentage point increase. In complex environments (15 objects), the success rate rose from 54.9 percent to 67.9 percent, an improvement of 13.0 percentage points. This demonstrates that "GraspClutter6D" is not just a large-scale dataset, but a "realistic dataset" that faithfully reflects actual environments.

Professor Kyubin Lee stated, "This achievement not only faithfully replicates the complex situations encountered in industrial and home environments for the first time, but also provides an important foundation for Physical AI research, which aims for robots to learn and act in the real world. In the future, this will enable a significant leap forward in the use of robots across various fields such as logistics, manufacturing, and everyday services."

© The Asia Business Daily(www.asiae.co.kr). All rights reserved.