Ministry of the Interior and Safety and National Forensic Service Develop AI Deepfake Analysis Model

13 Election-Related and 2 Digital Sex Crime Cases Analyzed in Two Months

#An incident occurred in which a person's face photo, uploaded on social media, was synthesized onto a nude image and distributed via an online messenger. The National Forensic Service detected distortions at the boundary of the synthesized face in the distributed material using its 'AI Deepfake Analysis Model,' thereby proving it was a deepfake. This forensic result served as decisive scientific evidence for blocking false videos and initiating investigations.

Going forward, the AI model developed by the government is expected to be widely used to verify the authenticity of images and videos suspected to be deepfakes.

The Ministry of the Interior and Safety, together with the National Forensic Service, completed the development and verification of the 'AI Deepfake Analysis Model' for determining the authenticity of deepfakes using AI technology in April. On July 30, they disclosed the results of its use in deepfake crime investigations over the past two months. By deploying the analysis model for forensic examination of deepfake evidence, the Ministry and the National Forensic Service expect a groundbreaking turning point in deepfake crime investigations.

The analysis model, upon requests from investigative agencies such as the National Police Agency, successfully analyzed 60 pieces of evidence in 15 deepfake cases during May and June. Of these, 13 cases were related to presidential candidates during the 21st presidential election, and 2 cases were digital sex crimes. Additionally, by sharing the analysis model with the National Election Commission during the election period, it contributed to detecting and deleting over 10,000 illegal deepfake election materials online, including on YouTube.

The analysis model was developed to overcome the situation where deepfake crimes have been increasing explosively, but investigative agencies face difficulties in analyzing related evidence due to a lack of detection technology. According to the Korea Communications Standards Commission, requests for corrective action regarding deepfake sexual crime videos surged from 3,574 cases in 2022, to 7,187 cases in 2023, and to 23,107 cases last year.

The development of the model utilized approximately 2.31 million deepfake data, including public datasets and self-produced content. Furthermore, by training massive amounts of data on the latest deep learning algorithms and conducting continuous feedback and performance improvement, detection capabilities were enhanced.

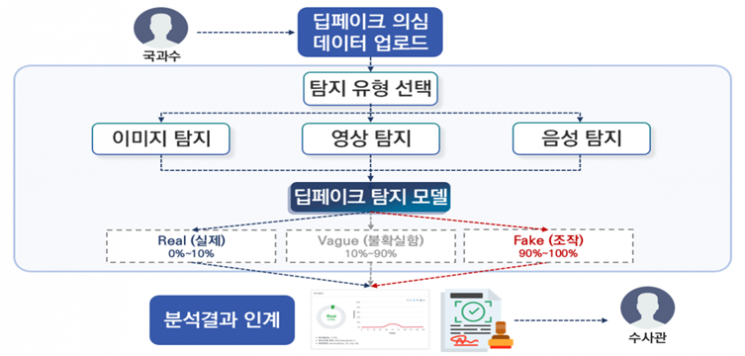

The analysis model thus developed automatically detects traces of deepfakes and predicts the probability of synthesis and the manipulation rate over time for suspicious files, enabling rapid determination of deepfake content. It is also capable of detecting manipulation in specific facial features and analyzing evidence where some data has been lost due to repeated uploading and downloading.

This development is significant in that it officially systematized deepfake forensics in South Korea for the first time, overcoming past technical limitations. With the development of the analysis model, an investigation system based on scientific evidence has been established. The Ministry of the Interior and Safety and the National Forensic Service plan to create synergy by linking this model with the 'Voice Phishing Speech Analysis Model' developed by the Ministry in 2023.

The scope of application for the analysis model will also be further expanded. Internally, the National Forensic Service plans to integrate the currently standalone model into the Digital Evidence Authentication System (DAS) in the future. In addition, the model will be gradually deployed to agencies such as the Ministry of Gender Equality and Family and the Korea Communications Commission to strengthen each agency's deepfake content detection and response capabilities.

Lee Bongwoo, Director of the National Forensic Service, said, "We will do our utmost to enhance the speed and accuracy of forensic analysis by strengthening scientific investigation capabilities based on AI technology," and added, "We will proactively respond to the rapidly changing advanced technology environment to establish a scientific investigation system that the public can trust."

Lee Yongseok, Director of the Digital Government Innovation Office at the Ministry of the Interior and Safety, stated, "The Ministry will continue to actively introduce AI and data analysis into administrative fields to ensure public safety and stability in people's livelihoods."

© The Asia Business Daily(www.asiae.co.kr). All rights reserved.