Joint Research Team Develops Vision Sensor with Reduced Data Transmission and Enhanced Accuracy

Mimics Dopamine-Glutamate Signaling Pathway; Applications in Autonomous Driving and Robotics

A vision sensor that efficiently and accurately extracts object contour information, even under fluctuating brightness conditions, has been developed by mimicking the neural transmission principles of the human brain.

This advancement is expected to help autonomous driving, drone, and robotics technologies recognize their surroundings faster and more accurately.

The research team led by Professor Choi Mungi from the Department of Materials Science and Engineering at UNIST, in collaboration with Dr. Choi Changsoon’s team at the Korea Institute of Science and Technology (KIST) and Professor Kim Daehyung’s team at Seoul National University, announced on June 4 that they have developed a synapse-mimicking robotic vision sensor.

Research team (from left) Kwon Jongik, Researcher at UNIST (Co-first author), Choi Mungi, Professor at UNIST, Choi Changsoon, PhD at KIST, Kim Daehyung, Professor at Seoul National University, Kim Jisoo, Researcher at Seoul National University (Co-first author). Provided by UNIST

Research team (from left) Kwon Jongik, Researcher at UNIST (Co-first author), Choi Mungi, Professor at UNIST, Choi Changsoon, PhD at KIST, Kim Daehyung, Professor at Seoul National University, Kim Jisoo, Researcher at Seoul National University (Co-first author). Provided by UNIST

The vision sensor serves as the "eye" of a machine, with the information detected by the sensor sent to a processor that acts as the "brain." If this information is transmitted without filtering, the volume of data increases, resulting in slower processing speeds and reduced recognition accuracy due to unnecessary information. These issues become even more pronounced when lighting changes abruptly or when bright and dark areas are mixed.

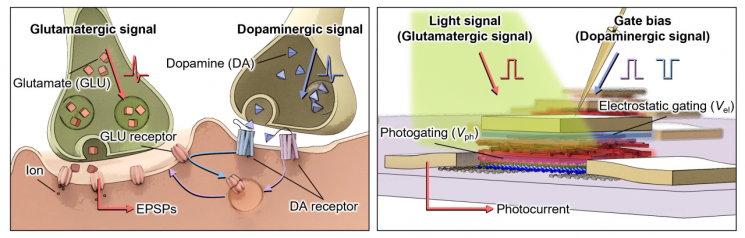

The joint research team developed a vision sensor that selectively extracts only high-contrast visual information, such as contours, by mimicking the dopamine-glutamate signaling pathway found in brain synapses. In the brain, dopamine enhances important information by regulating glutamate, and the sensor was designed to replicate this principle.

Professor Choi Mungi explained, "By applying in-sensor computing technology, which imparts some brain functions to the eye itself, the sensor can autonomously adjust image brightness and contrast and filter out unnecessary information. This can fundamentally reduce the burden on robotic vision systems that must process video data at rates of tens of gigabits per second."

Experimental results showed that this vision sensor reduced video data transmission volume by approximately 91.8% compared to conventional methods, while achieving an object recognition simulation accuracy of about 86.7%.

This sensor is composed of phototransistors, whose current response changes according to the gate voltage. The gate voltage functions like dopamine in the brain, modulating the response intensity, while the current from the phototransistor simulates the glutamate signal transmission. By adjusting the gate voltage, the sensor becomes more sensitive to light, enabling it to clearly detect contour information even in dark environments. Additionally, the output current is designed to vary not only with absolute brightness but also with the brightness difference from the surroundings. As a result, boundaries with significant brightness changes?contours?elicit a stronger response, while backgrounds with uniform brightness are suppressed.

Dr. Choi Changsoon of KIST stated, "This technology can be widely applied to various vision-based systems such as robots, autonomous vehicles, drones, and IoT devices. It can simultaneously enhance data processing speed and energy efficiency, making it a core solution for next-generation AI vision technology."

This research was supported by the National Research Foundation of Korea’s Excellent Young Researcher Program, the KIST Future Semiconductor Technology Development Project, and the Institute for Basic Science.

The research results were published online in Science Advances on May 2.

© The Asia Business Daily(www.asiae.co.kr). All rights reserved.