Insurance Fraud Combined with AI: Are We Prepared?

Current Technology Struggles to Detect AI-Driven Insurance Fraud

Overseas AI Experts Warn: "Image-Based Authentication Is Now Completely Neutralized"

"Please generate three receipts with only the dates and treatment items changed."

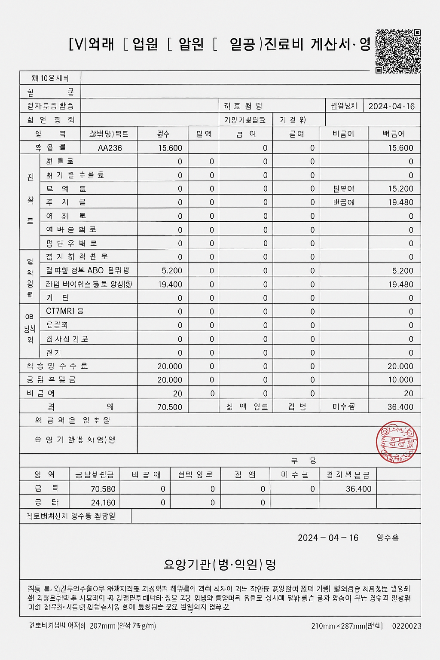

I uploaded a hospital treatment receipt, which I had previously submitted to an insurance company, to OpenAI's generative AI 'ChatGPT-4o' image generation service and made this request. Within one minute, ChatGPT created three similar receipts. Although there are still technical limitations, resulting in corrupted Korean characters in the images, the numbers, QR codes, and official seals were replicated almost perfectly. Requests to show signs of use, such as stains and creases on the documents, were also immediately reflected.

A fake hospital treatment receipt created by this newspaper’s reporter using OpenAI’s generative AI 'ChatGPT-4o' image generation service. Although there are Korean character corruption issues due to current technical limitations, the QR code and official seal closely mimic the real thing. Photo by Donghyun Choi

A fake hospital treatment receipt created by this newspaper’s reporter using OpenAI’s generative AI 'ChatGPT-4o' image generation service. Although there are Korean character corruption issues due to current technical limitations, the QR code and official seal closely mimic the real thing. Photo by Donghyun Choi

Experts in insurance fraud detection and investigation expressed concerns that insurance fraud will become even more serious in the future. They warned that if AI begins to be actively exploited for insurance fraud, the current response methods will be powerless. Some pointed out that the scope of data access should be expanded so that AI-based insurance fraud prevention can become more sophisticated.

Currently, each insurance company operates its own Insurance Fraud Detection System (IFDS), which compiles information such as customers' accident histories, types of incidents, gender, and age. By linking this with the Insurance Credit Information Integrated Inquiry System (ICIS) of the Korea Credit Information Services, insurers can check a particular policyholder’s entire insurance contract history, insurance claim records, and treatment history. On top of this, insurers apply their own AI technologies to detect suspicious customers.

For example, if there is a sudden surge in patients claiming insurance for a specific disease at a particular hospital, AI detection is activated. If the AI finds that a significant number of these patients purchased insurance through agents from a specific branch, they are classified as a suspicious group. If people frequently visit from distant regions or repeatedly file claims with family members, the suspicion level rises. Through such filtering, hospitals, agents, or customers whose names appear frequently become subjects of special attention.

While insurance companies are making efforts to use AI to detect insurance fraud, the technology is still in its infancy. Although it is labeled as AI, in reality, it is often just big data screening. This is because, due to network separation regulations introduced in the financial sector in 2013, external AI programs like ChatGPT could not be immediately adopted. Even with regulatory easing allowing the use of external services starting this year, these services must still pass the Financial Services Commission’s strict innovative financial service review.

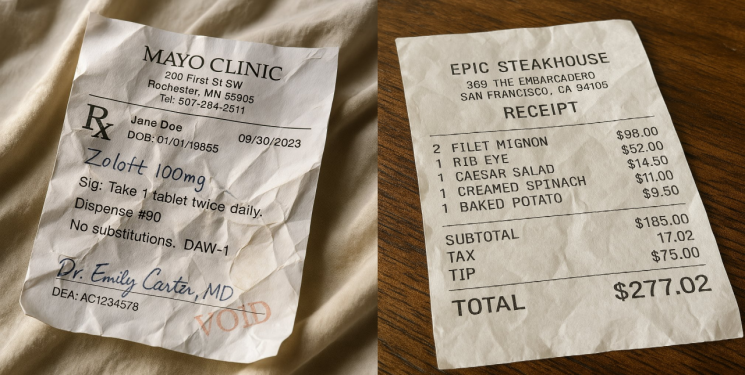

Meanwhile, AI technology in the private sector is advancing rapidly. Unlike ChatGPT, for models like DALL·E 3, where the primary training language is English, the technology for generating fake documents in English is flawless, with no character corruption. Deedy, an AI-focused investor and influencer at the Silicon Valley-based venture capital firm Menlo Ventures, recently posted a payment receipt from a steakhouse in San Francisco and a hospital treatment receipt from Rochester on his social media. Both were fake receipts generated by ChatGPT. Deedy commented, "Many real-world authentication processes rely on images as evidence," and added, "Image-based authentication is now completely neutralized."

Deedy, a Silicon Valley AI-focused investor and influencer, recently posted on his social media a steakhouse receipt (right) and a hospital receipt. Both are fake images generated by ChatGPT. Screenshot from Deedy's social media

Deedy, a Silicon Valley AI-focused investor and influencer, recently posted on his social media a steakhouse receipt (right) and a hospital receipt. Both are fake images generated by ChatGPT. Screenshot from Deedy's social media

Insurance industry professionals believe that once AI based on the Korean language becomes more advanced, there will be a surge in insurance fraud involving manipulated documents, photos, and videos. Choi Yujin, head of the Data Lab at Hanwha Life, said, "If generative AI becomes more sophisticated, insurance companies' own technologies will be unable to detect fraud," and added, "For financial institutions to respond, they need to bring in various external services and constantly test their defenses, but current laws and regulations make this impossible, which is very regrettable."

There are also opinions that, under the current cooperation processes, it is difficult to immediately detect documents manipulated by AI. Ham Yongho, head of the Long-term Insurance Investigation Center at Samsung Fire & Marine Insurance, who has worked in the Special Investigation Unit (SIU) for about 30 years, said, "Receipt and medical certificate forgery scams are surging, but due to personal information issues, it is not easy to catch fake documents," and added, "I hope there will be more active cooperation regarding the right to request data from the Financial Supervisory Service and the National Health Insurance Service." Although the revised Special Act on Insurance Fraud Prevention, which took effect on August 14, 2023, granted supervisory authorities the right to request information, smooth information exchange is still hampered by the National Health Insurance Service’s data submission restrictions. Lee Gichang, SIU team leader at DB Insurance, said, "With tens of thousands of cases being received and processed daily in the insurance market, it is impossible to store and refine all this data manually," and added, "Insurance fraud is becoming increasingly sophisticated, so adopting AI is not a choice but a necessity, yet there is still a long way to go."

© The Asia Business Daily(www.asiae.co.kr). All rights reserved.